Issue with backups listings and saving backup config in 6.2

-

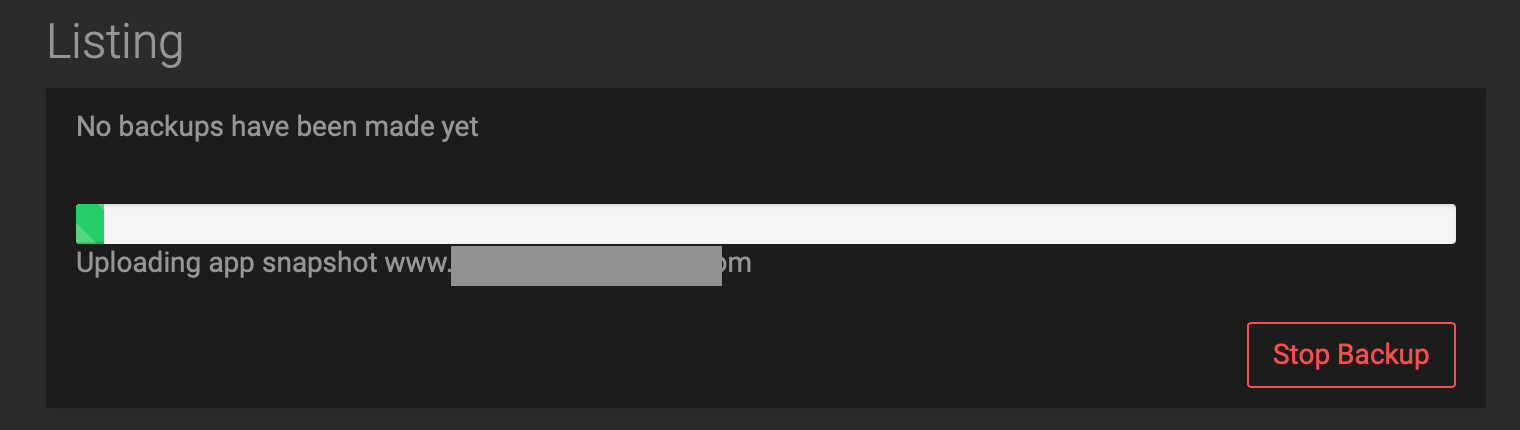

I can also add that the backup I ran earlier this morning was successful still using the OVH Object Storage backend. If it's truly on version 6.2 as it appears to be, then I guess that rules out the

storageendpoint as an issue, right? The logs also indicated a missing Region earlier from the failed backup prior.I'm not 100% sold on this being caused by the change from

s3tostorageendpoints yet (even though mine still showss3despite being on 6.2). haha. I think there's something bigger afoot here, especially if we're seeing Region missing too after the upgrade.Sorry for making things confusing, just trying to update as I learn more, lol. I don't mean to be moving the goal posts.

-

@d19dotca OK, sorry, I can confirm you are not imagining things. If I use OVH in dropdown it does not work. If I use it in "S3 compatible", it works. (i.e testing both with

storagesubdomain) This should be easy to figure out now what is different.@girish lol, okay glad to know I'm not crazy. I am still wondering how it changed from

storagetos3as I didn't change the preset at all, it still shows OVH Object Storage, not the s3-compatible type one (though I realize they appear to use the same code in the backend). -

@d19dotca Found it! Phew.

The

storagesubdomain only supports path style API whereass3subdomain supports subdomain style API. The path style API is already deprecated (though they have backtracked a bit on it) - https://aws.amazon.com/blogs/aws/amazon-s3-path-deprecation-plan-the-rest-of-the-story/ . So, s3 subdomain is the way to go with it's vhost based API access.Now, for Cloudron UI, all the named providers use vhost style. This is why

storagedoes not work. The 'Compat' option (and minio) uses the path style for compat reasons. -

@girish lol, okay glad to know I'm not crazy. I am still wondering how it changed from

storagetos3as I didn't change the preset at all, it still shows OVH Object Storage, not the s3-compatible type one (though I realize they appear to use the same code in the backend). -

@d19dotca Found it! Phew.

The

storagesubdomain only supports path style API whereass3subdomain supports subdomain style API. The path style API is already deprecated (though they have backtracked a bit on it) - https://aws.amazon.com/blogs/aws/amazon-s3-path-deprecation-plan-the-rest-of-the-story/ . So, s3 subdomain is the way to go with it's vhost based API access.Now, for Cloudron UI, all the named providers use vhost style. This is why

storagedoes not work. The 'Compat' option (and minio) uses the path style for compat reasons. -

@d19dotca It seems that apart from trying to figure why

storagemagically changed tos3, we are good. I am suspecting maybe there is some UI bug. Just clicking around the UI to see if it's something obvious to reproduce.@girish Actually sorry one more thing I'm wondering about... if we believe the

storageands3thing in the GUI to just be a possible GUI issue, wouldn't that mean it's in fact usingstoragethen since that's what the 6.2 code points it to and the database points to it too? And if it's indeed using thestorageendpoint then my last backup worked, so why would that work ifstorageisn't the right endpoint? lol Sorry just trying to wrap my head around it and make sure we've resolved it. -

@girish Actually sorry one more thing I'm wondering about... if we believe the

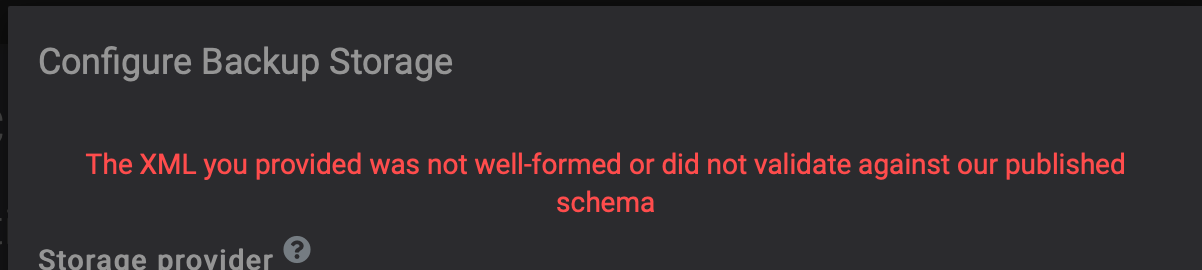

storageands3thing in the GUI to just be a possible GUI issue, wouldn't that mean it's in fact usingstoragethen since that's what the 6.2 code points it to and the database points to it too? And if it's indeed using thestorageendpoint then my last backup worked, so why would that work ifstorageisn't the right endpoint? lol Sorry just trying to wrap my head around it and make sure we've resolved it.@d19dotca Sure. So the db in 6.2 says

storageand also path style disabled. This means that when a user goes to Backups view, he will seestoragebut backups will fail. Also, if you go to Backups -> Configure and Click Save making no changes, you will get that XML error.wouldn't that mean it's in fact using storage then since that's what the 6.2 code points it to and the database points to it too

Correct

And if it's indeed using the storage endpoint then my last backup worked, so why would that work if storage isn't the right endpoint?

This, I am not so sure (and I guess cause of much confusion for us). Atleast, from my tries here, I couldn't get it work with

storageendpoint with 6.2. I don't see how it can work with path style disabled. I guess one way to know this for sure is to go to the EventLog and see if any backup succeeded after the 6.2 update but before the backup config was changed? Sadly, I think we don't log backup config in the event log. -

@girish Actually sorry one more thing I'm wondering about... if we believe the

storageands3thing in the GUI to just be a possible GUI issue, wouldn't that mean it's in fact usingstoragethen since that's what the 6.2 code points it to and the database points to it too? And if it's indeed using thestorageendpoint then my last backup worked, so why would that work ifstorageisn't the right endpoint? lol Sorry just trying to wrap my head around it and make sure we've resolved it.@d19dotca Also, looking at your post here - https://forum.cloudron.io/post/27080 . Somehow you managed to get s3 in endpoint URL. I think this is why the backup succeeded. Also, to clarify, what is in the db and what is in the UI are in "sync".

-

@d19dotca Also, looking at your post here - https://forum.cloudron.io/post/27080 . Somehow you managed to get s3 in endpoint URL. I think this is why the backup succeeded. Also, to clarify, what is in the db and what is in the UI are in "sync".

@girish said in Issue with backups listings and saving backup config in 6.2:

Somehow you managed to get s3 in endpoint URL. I think this is why the backup succeeded. Also, to clarify, what is in the db and what is in the UI are in "sync".

That's the thing, I didn't do that, at least not intentionally. haha. So I was about to go change to the generic s3-compatible type, but before I did that that's when I noticed the Region was empty, so I just left it as it was but set the Region and saved, then tried the backup again to see if it was successful and it was. Is it possible that saving it somehow overwrote the

storageendpoint back tos3? Maybe another part of the code needs to be modified?For the database, it showed the

storageendpoint used earlier this morning, and when I run the same command now it shows thes3endpoint instead. So I think maybe part of the confusion earlier was that when I saved the changes (where all I did was edit the Region from null to BHS), it overwrote it back tos3fromstorage. Do you think that's possibly what happened, if you look at the code side of it?Here's what the DB looks like now, but compare to this the link above where I pasted it this morning before I saved the change to fill in the Region field...

ubuntu@cloudron:~$ mysql -uroot -ppassword -e "SELECT * FROM box.settings WHERE name='backup_config'" mysql: [Warning] Using a password on the command line interface can be insecure. +---------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | name | value | +---------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | backup_config | {"provider":"ovh-objectstorage","format":"tgz","memoryLimit":4294967296,"schedulePattern":"00 00 7,19 * * *","retentionPolicy":{"keepWithinSecs":172800},"bucket":"cloudron-backups","prefix":"","accessKeyId":"<accesskey>","secretAccessKey":"<secretkey>","endpoint":"https://s3.bhs.cloud.ovh.net","region":"bhs","signatureVersion":"v4","uploadPartSize":1073741824,"encryption":null} | +---------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ -

I can confirm too in a recent test if I opt to use the s3-compatible method, and put in the

https://storage.bhs.cloud.ovh.net/endpoint, my backups succeed, and that also jives with what I wrote too last year. So I still wonder if the issue truly is with thestorageendpoint. Or is that explained by the path style differences you spoke about earlier? I'm not familiar with that part at all, so not sure if that explains why it works fine when using the s3-compatible method instead of the dedicated OVH Object Storage method for thestorageendpoint URL. That page you linked to definitely shows thes3endpoint URL, but knowing OVH their docs tend to be outdated / not actively maintained, so I wonder if it should still showstorageendpoint at all, since that's the endpoint URL shown in the GUI everywhere in the OVH Control Panel and the OopenStack backend. Just throwing it out there. I think you have a better handle on this now than I do, so I'll leave it to you. haha. But if I can help troubleshoot at all further, I'll be happy to help. For now, I've moved to the s3-compatible one and used thes3endpoint as you suggested. -

I can confirm too in a recent test if I opt to use the s3-compatible method, and put in the

https://storage.bhs.cloud.ovh.net/endpoint, my backups succeed, and that also jives with what I wrote too last year. So I still wonder if the issue truly is with thestorageendpoint. Or is that explained by the path style differences you spoke about earlier? I'm not familiar with that part at all, so not sure if that explains why it works fine when using the s3-compatible method instead of the dedicated OVH Object Storage method for thestorageendpoint URL. That page you linked to definitely shows thes3endpoint URL, but knowing OVH their docs tend to be outdated / not actively maintained, so I wonder if it should still showstorageendpoint at all, since that's the endpoint URL shown in the GUI everywhere in the OVH Control Panel and the OopenStack backend. Just throwing it out there. I think you have a better handle on this now than I do, so I'll leave it to you. haha. But if I can help troubleshoot at all further, I'll be happy to help. For now, I've moved to the s3-compatible one and used thes3endpoint as you suggested.@d19dotca said in Issue with backups listings and saving backup config in 6.2:

I'm not familiar with that part at all, so not sure if that explains why it works fine when using the s3-compatible method instead of the dedicated OVH Object Storage method for the storage endpoint URL

I can explain at a high level. But essentially, given an endpoint say s3.objectstorage.com, it's a question of how to access the objects of a bucket

foo. There are two "styles" of accessing the S3 REST API: one is https://foo.s3.objectstorage.com (the vhost style) and another is https://s3.objectstorage.com/foo (path style). The former as you can see requires a subdomain to be setup per bucket. The latter is deprecated for various reasons including security.When you choose 'OVH', we set the path style to false. When you choose 'S3 compat', we set the path style to true. This is why

storageworks with S3 compat - it's only supporting a legacy API style.I think OVH does not display s3 in the UI because those are openstack endpoints. In fact, to get S3 API access (as you know) in OVH is super geeky. You have to download this openrc.sh and then use a CLI tool to generate access keys etc. It's really second class citizen / after thought. (S3 API is actually just an optional addon in openstack. Lots of people implemented openstack 5-6 years ago but they are all in various states of dead afaik. only huge/massive companies use it now since it's a behemoth).

For now, I've moved to the s3-compatible one and used the s3 endpoint as you suggested

I pushed a 6.2.1. You can update to that and use the OVH backend which will use s3 subdomain.

In any case, if you opened a ticket with OVH, let me know what they say.

-

@d19dotca said in Issue with backups listings and saving backup config in 6.2:

I'm not familiar with that part at all, so not sure if that explains why it works fine when using the s3-compatible method instead of the dedicated OVH Object Storage method for the storage endpoint URL

I can explain at a high level. But essentially, given an endpoint say s3.objectstorage.com, it's a question of how to access the objects of a bucket

foo. There are two "styles" of accessing the S3 REST API: one is https://foo.s3.objectstorage.com (the vhost style) and another is https://s3.objectstorage.com/foo (path style). The former as you can see requires a subdomain to be setup per bucket. The latter is deprecated for various reasons including security.When you choose 'OVH', we set the path style to false. When you choose 'S3 compat', we set the path style to true. This is why

storageworks with S3 compat - it's only supporting a legacy API style.I think OVH does not display s3 in the UI because those are openstack endpoints. In fact, to get S3 API access (as you know) in OVH is super geeky. You have to download this openrc.sh and then use a CLI tool to generate access keys etc. It's really second class citizen / after thought. (S3 API is actually just an optional addon in openstack. Lots of people implemented openstack 5-6 years ago but they are all in various states of dead afaik. only huge/massive companies use it now since it's a behemoth).

For now, I've moved to the s3-compatible one and used the s3 endpoint as you suggested

I pushed a 6.2.1. You can update to that and use the OVH backend which will use s3 subdomain.

In any case, if you opened a ticket with OVH, let me know what they say.

-

@girish Ah that helps explain it then, thanks Girish!

I really appreciate the time and educational aspect to this. Thanks again!

I really appreciate the time and educational aspect to this. Thanks again!@d19dotca To add to this, this is why minio has path style to true. Because it will be a pain for selfhosters to create a subdomain (dns and certs) for every bucket they create.

Edit: just looked this up now. In minio, one can set

MINIO_DOMAINto enable vhost style per https://docs.min.io/docs/minio-server-configuration-guide.html . I have to test if this works with Cloudron's domain alias feature.