Serge - LLaMa made easy 🦙 - self-hosted AI Chat

-

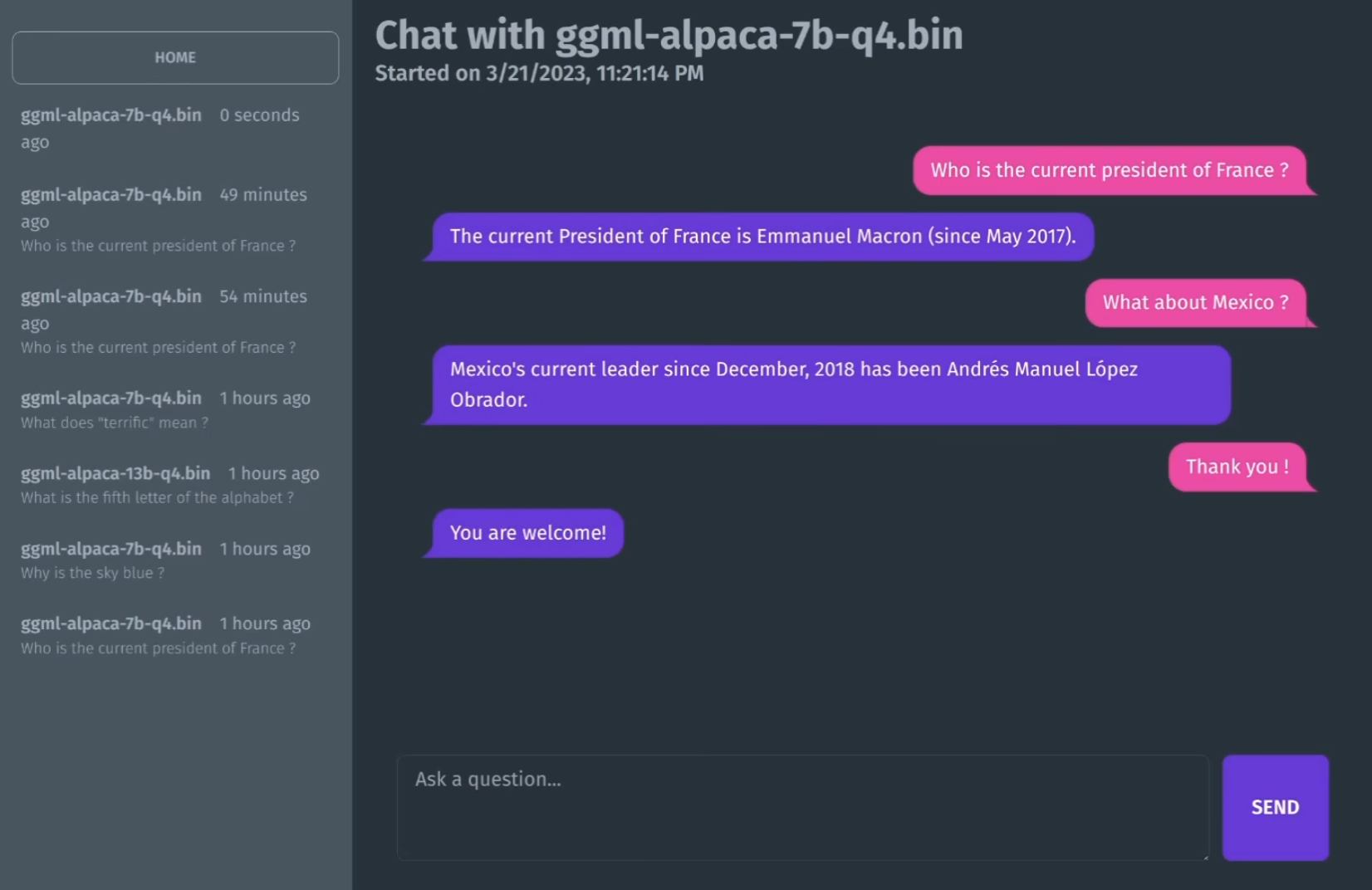

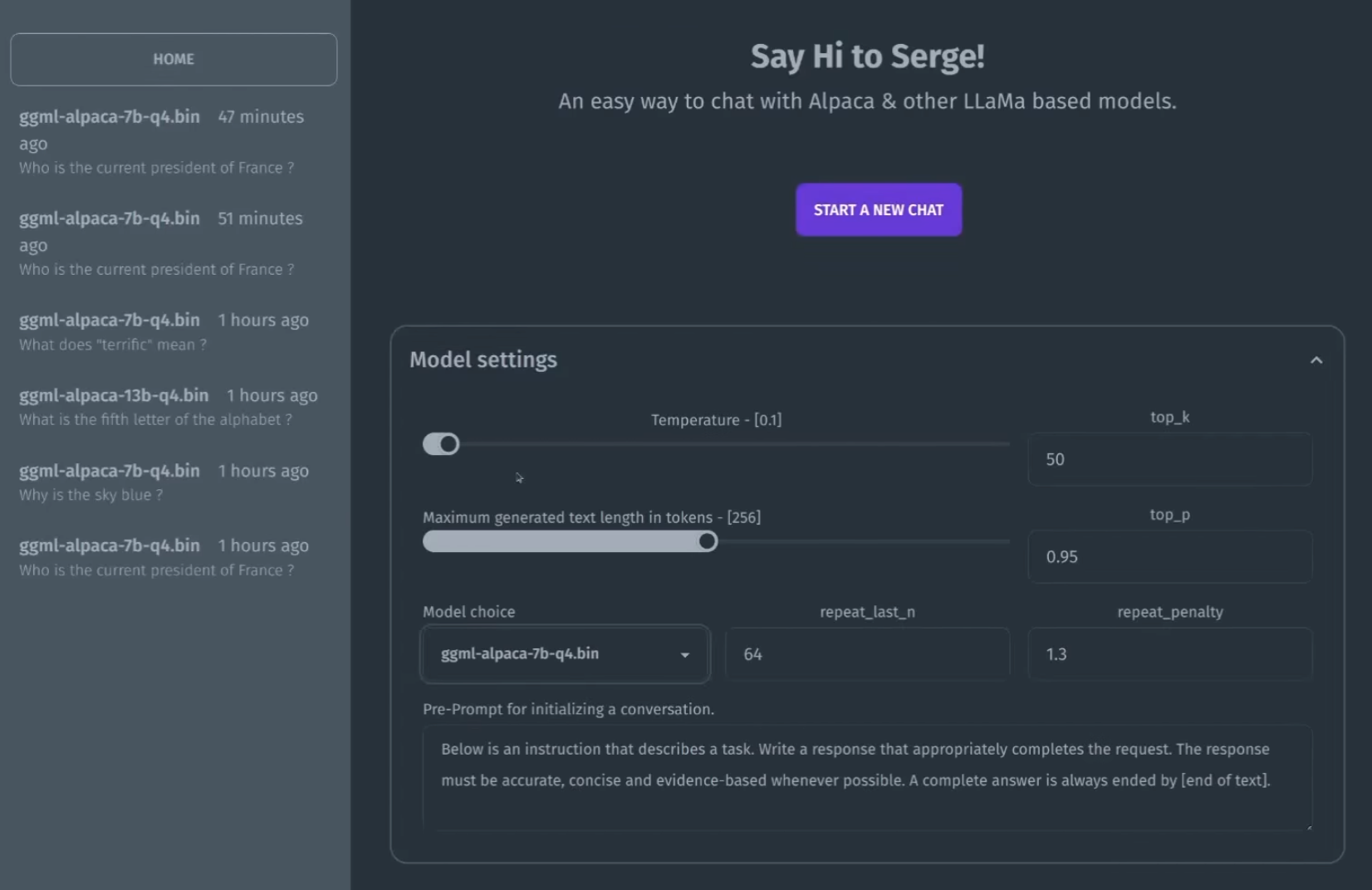

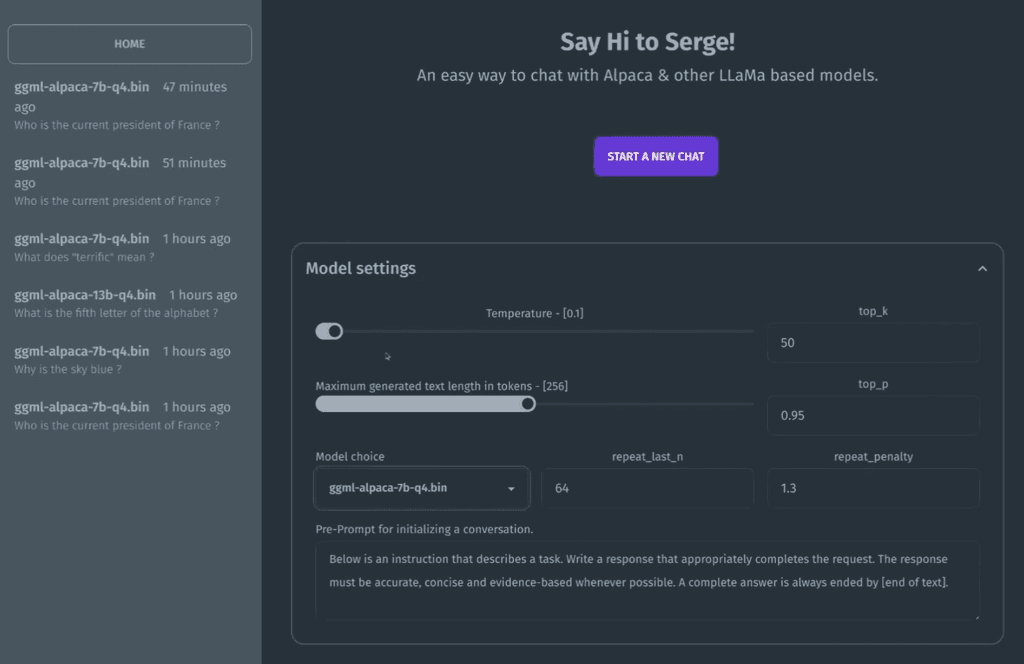

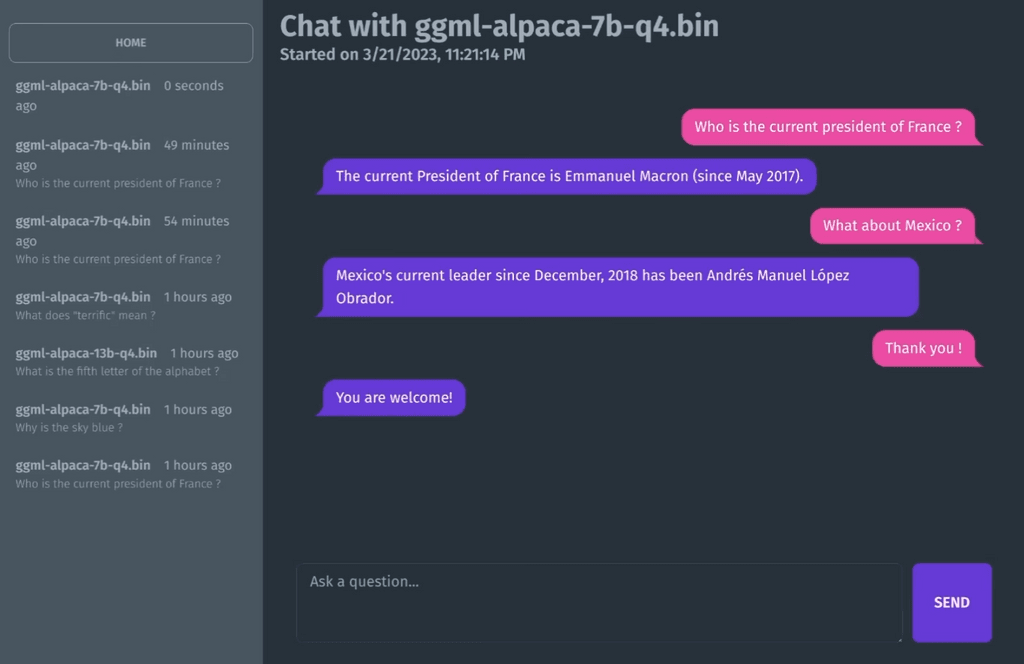

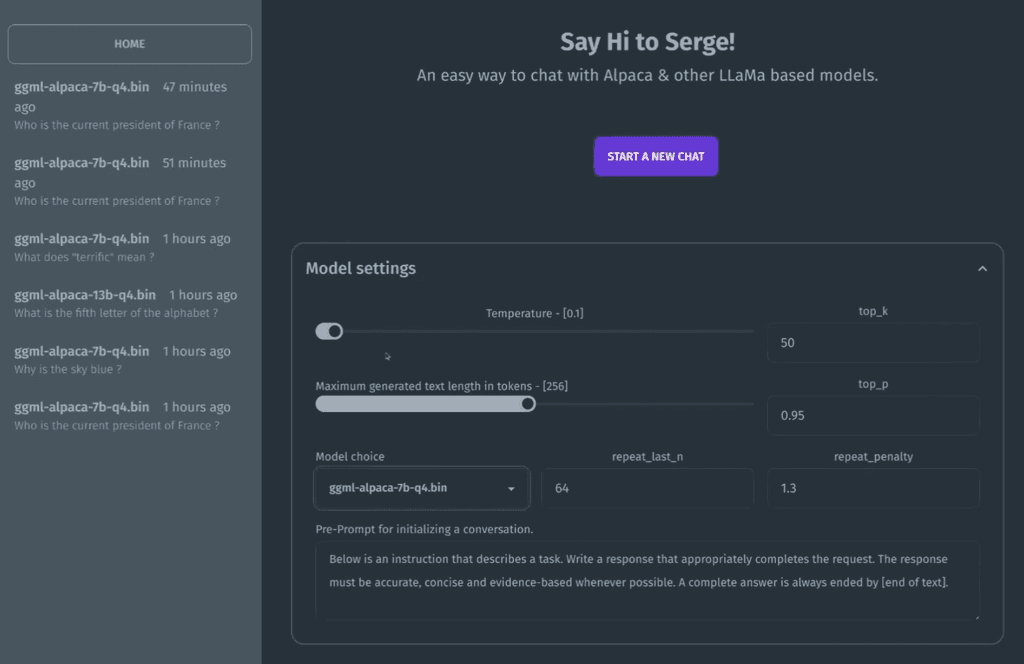

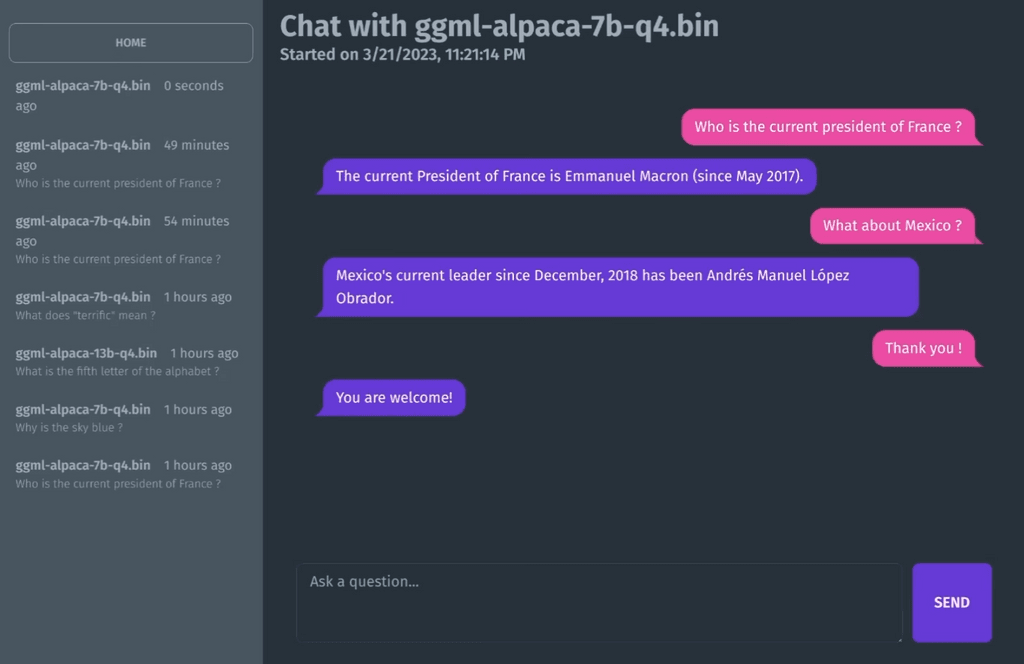

chat interface based on llama.cpp for running Alpaca models. Entirely self-hosted, no API keys needed. Fits on 4GB of RAM and runs on the CPU.

SvelteKit frontend

MongoDB for storing chat history & parameters

FastAPI + beanie for the API, wrapping calls to llama.cpp

-

@marcusquinn Have you been able to get the ggml-alpaca-30B-q4_0 model to work? For me the best it can do is eggtime or give me a "loading" message.

@JOduMonT I think you might like this project.

@LoudLemur Not tried yet. Just spotted it on my Reddit travels, skimming past a post on it i the self-hosting sub-Reddit.

-

L LoudLemur referenced this topic on

-

@LoudLemur Not tried yet. Just spotted it on my Reddit travels, skimming past a post on it i the self-hosting sub-Reddit.

@marcusquinn said in Serge - LLaMa made easy 🦙 - self-hosted AI Chat:

@LoudLemur Not tried yet. Just spotted it on my Reddit travels, skimming past a post on it i the self-hosting sub-Reddit.

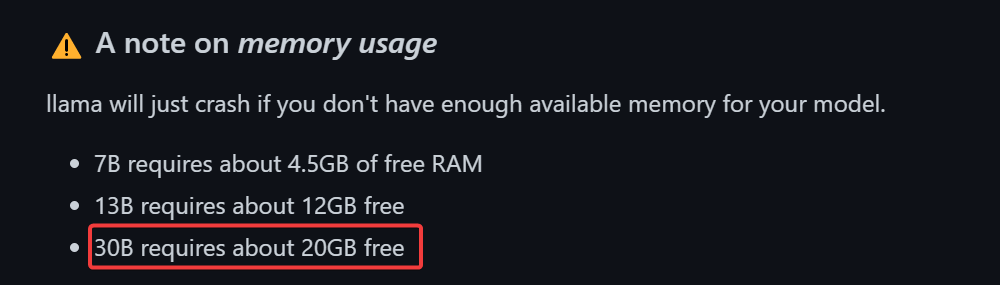

It was a RAM issue. If you don't have a lot of RAM, it is best not to multi-task with it.

-

chat interface based on llama.cpp for running Alpaca models. Entirely self-hosted, no API keys needed. Fits on 4GB of RAM and runs on the CPU.

SvelteKit frontend

MongoDB for storing chat history & parameters

FastAPI + beanie for the API, wrapping calls to llama.cpp

@marcusquinn There is now a medical Alpaca too:

https://teddit.net/r/LocalLLaMA/comments/12c4hyx/introducing_medalpaca_language_models_for_medical/

We could ask it health related questions, or perhaps it could ask us questions...

-

@lars1134 said in Serge - LLaMa made easy 🦙 - self-hosted AI Chat:

Is there any interest in getting this in the app store?

There is also this, but I couldn't see the code for the GUI:

https://lmstudio.ai/ -

@lars1134 said in Serge - LLaMa made easy 🦙 - self-hosted AI Chat:

Is there any interest in getting this in the app store?

There is also this, but I couldn't see the code for the GUI:

https://lmstudio.ai/It looks like that only runs locally, but I like the program.

I liked serge as I have quite a bit of ram left unused at one of my servers.

-

This might be a good one for @Kubernetes ?

-

This might be a good one for @Kubernetes ?

@timconsidine I already played with it on my local machines. I still miss quality for other languages than english. In addition it is very RAM and Disk consuming. So I think it is better to run it dedicated instead of running in shared mode with other applications.

-

I'm on huggingface and the library is huge! My laptop has 32GB RAM and an empty 500GB secondary SSD. Which model would be a good GPT-3.5 alternative?

-

I'm on huggingface and the library is huge! My laptop has 32GB RAM and an empty 500GB secondary SSD. Which model would be a good GPT-3.5 alternative?

@humptydumpty said in Serge - LLaMa made easy 🦙 - self-hosted AI Chat:

I'm on huggingface and the library is huge! My laptop has 32GB RAM and an empty 500GB secondary SSD. Which model would be a good GPT-3.5 alternative?

This model uses the recently released Llama2, is uncensored as far as things go and works well and even better with longer prompts:

-

@Kubernetes Thank you for looking into it. I understand the limitations. I run a few servers that have more than 10 EPYC cores and 50GB RAM left unused - those servers have a lot more available resources than our local clients. But I understand that not too many have a similar situation.

-

@Kubernetes Thank you for looking into it. I understand the limitations. I run a few servers that have more than 10 EPYC cores and 50GB RAM left unused - those servers have a lot more available resources than our local clients. But I understand that not too many have a similar situation.

-

@lars1134 the tests I've run in a LAMP app allows these to run in less than 5GB RAM (depending on model) using the right combo of sw all on CPU only.

@robi said in Serge - LLaMa made easy 🦙 - self-hosted AI Chat:

using the right combo of sw all on CPU only.

The GGML versions of the models are designed to offload the work onto the CPU and RAM and, if there is a GPU available, to use that too. Q6 versions offer more power than e.g. Q4, but are a bit slower.

-

From Claude AI:

"

Here is a quick comparison of FP16, GPTQ and GGML model versions:FP16 (Half precision float 16):

Uses 16-bit floats instead of 32-bit floats to represent weights and activations in a neural network.

Reduces model size and memory usage by half compared to FP32 models.

May lower model accuracy slightly compared to FP32, but often accuracy is very close.

Supported on most modern GPUs and TPUs for efficient training and inference.GPTQ (Quantization aware training):

Quantizes weights and/or activations to low bitwidths like 8-bit during training.

Further compresses model size over FP16.

Accuracy is often very close to FP32 model.

Requires quantization aware training techniques.

Currently primarily supported on TPUs.GGML (Mixture of experts):

Partitions a single large model into smaller expert models.

Reduces compute requirements for inference since only one expert is used per sample.

Can maintain accuracy of original large model.

Increases model size due to overhead of gating model and expert models.

Requires changes to model architecture and training procedure.

In summary, FP16 is a straightforward way to reduce model size with minimal accuracy loss. GPTQ can further compress models through quantization-aware training. GGML can provide inference speedups through model parallelism while maintaining accuracy. The best choice depends on hardware constraints, accuracy requirements and inference latency needs." -

@lars1134 the tests I've run in a LAMP app allows these to run in less than 5GB RAM (depending on model) using the right combo of sw all on CPU only.

@robi said in Serge - LLaMa made easy 🦙 - self-hosted AI Chat:

@lars1134 the tests I've run in a LAMP app allows these to run in less than 5GB RAM (depending on model) using the right combo of sw all on CPU only.

Awesome, I will definitely look into that today and tomorrow. Thank you for the inspiration

. I will let you know how things went for me.

. I will let you know how things went for me.