Import 5gb sql file into MYSQL database

-

Hello,

I'm currently in the process of migrating Traccar to Cloudron and have encountered a significant challenge. I have a MySQL database file that is around 5GB in size, and despite numerous attempts, I cannot seem to upload it to the tmp folder successfully. The folder remains empty after each attempt, which is puzzling since I've had no issues with smaller databases from other applications.

Are there any specific settings or steps that I should follow to bypass this hurdle? Any guidance or recommendations would be greatly appreciated as I'm keen on completing this migration successfully.

Thank you in advance for your assistance and advice.

-

You can always directly upload it to the root server and move it into the

/app/data/folder.

Just rsync the file to the root server and move it to/home/yellowtent/appdata/{ID}/data/ -

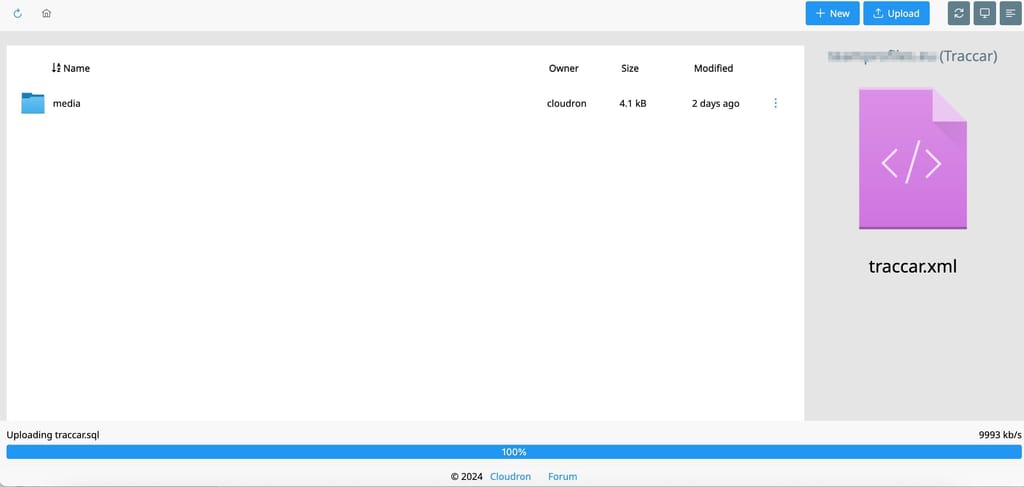

@vadim have you tried uploading via filemanager ? For historic reasons, Filemanager and Upload to tmp are separate upload functionalities. Once you upload the file in filemanager, you will find it in /app/data in the Web terminal.

-

@vadim by any chance, is your dashboard behind Cloudflare? I think Cloudflare has some upload limit.

@girish I have my own server, don't use Cloudflare. I got finally copied with 2 steps:

- Ubunti terminal

cp /mnt/usb/traccar.sql /home/yellowtent/appsdata/836aeaf4-bc7b-44cb-9227-2ca70aa0f7df/data/traccar.sql- Cloudron > Traccar terminal

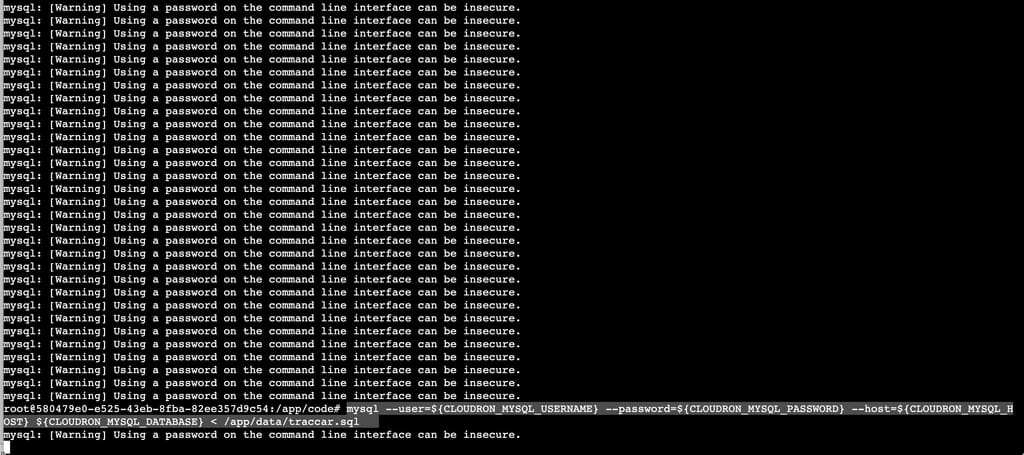

cp /app/data/traccar.sql /tmp/traccar.sqlBut! Dump import was not successful; it was aborted after a long time, and Traccar is not responding. I used:

mysql --user=${CLOUDRON_MYSQL_USERNAME} --password=${CLOUDRON_MYSQL_PASSWORD} --host=${CLOUDRON_MYSQL_HOST} ${CLOUDRON_MYSQL_DATABASE} < /tmp/traccar.sqlWhat is the best way to import this big file?

-

G girish marked this topic as a question on

G girish marked this topic as a question on

-

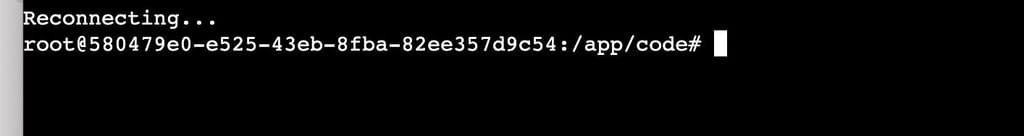

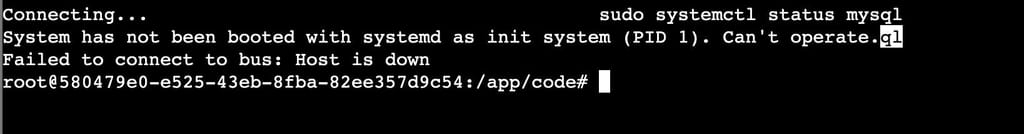

@vadim the systemctl command can only be run via SSH (and not the web terminal).

If you are comfortable with docker, you can try one more thing:

- SSH into server

- Find the container of the traccar app. You can find this from

docker ps | grep traccar docker exec -ti <containerid> /bin/bash. Do the MySQL import here .

Thing is I suspect the web terminal is disconnecting because the command is running too long. Maybe there are proxies in the middle that disconnect the connection. There could be many reasons (OS/Network/Firewall/Proxy/Browser...). Doing docker exec directly atleast rules out most of the issues.

-

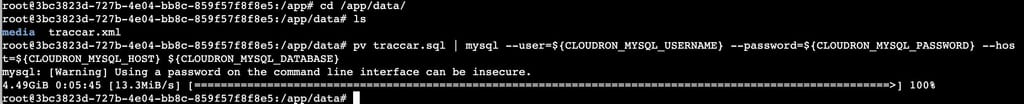

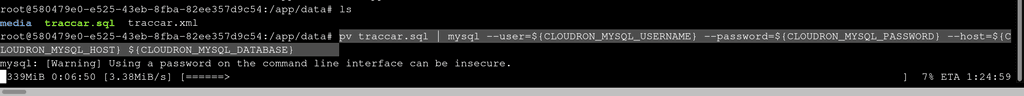

The issue here is that disk speed is quite low (90Mb/sec). To track the slow progress:

- Put app in recovery mode

- apt update && apt install pv

pv traccar.sql | mysql --user=${CLOUDRON_MYSQL_USERNAME} --password=${CLOUDRON_MYSQL_PASSWORD} --host=${CLOUDRON_MYSQL_HOST} ${CLOUDRON_MYSQL_DATABASE}

FWIW, it imported in < 10 mins in my test installation on DigitalOcean.

-

You can always directly upload it to the root server and move it into the

/app/data/folder.

Just rsync the file to the root server and move it to/home/yellowtent/appdata/{ID}/data/@BrutalBirdie said in Import 5gb sql file into MYSQL database:

You can always directly upload it to the root server and move it into the /app/data/ folder.

Just rsync the file to the root server and move it to /home/yellowtent/appdata/{ID}/data/@vadim this is one of the most save ways to get the file to the server.

Once on the root system you can copy it over and over for trying out different solutions so you don't have to wait for the upload over and over again. -

@BrutalBirdie said in Import 5gb sql file into MYSQL database:

You can always directly upload it to the root server and move it into the /app/data/ folder.

Just rsync the file to the root server and move it to /home/yellowtent/appdata/{ID}/data/@vadim this is one of the most save ways to get the file to the server.

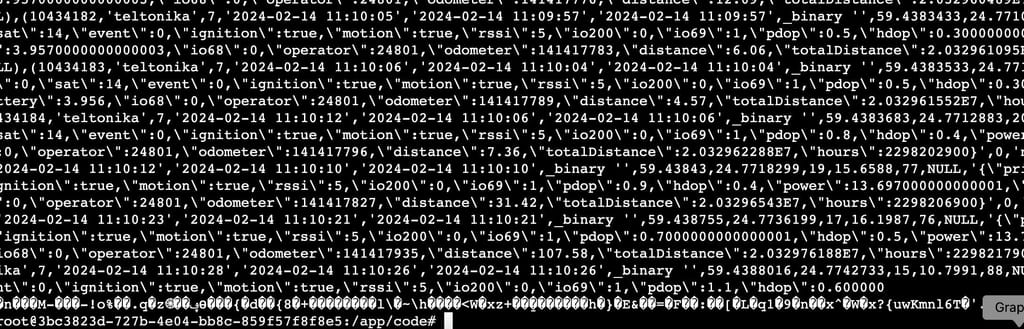

Once on the root system you can copy it over and over for trying out different solutions so you don't have to wait for the upload over and over again.@BrutalBirdie I have sql files in /app/data and use this way. But every import is blank. No way to get data from source service.

I have Traccar installed for 4 years since very old version. DB works perfect with new version. Could be there a problem with "old" db trying to import to a new installation?

-

@vadim so the DB is importing but you have to make sure that you import the database into the right version of traccar. Which version of traccar were you using?

Also, after import, have you tried inspecting the tables? Make sure you were not using any table prefixes etc.

-

@vadim so the DB is importing but you have to make sure that you import the database into the right version of traccar. Which version of traccar were you using?

Also, after import, have you tried inspecting the tables? Make sure you were not using any table prefixes etc.

-

@vadim when you installed traccar, did you install it with

Leave user management to the app? Otherwise, it will use LDAP by default. This flag can only be set at install time. So, start with a fresh installation and selectLeave user management to the appat install time. -

After a fresh installation with the option "Leave user management to the app" (the same option was used previously), none of the existing users are able to log in. Additionally, it's impossible to change or recover their passwords. I cleared the old database and proceeded with the import. However, after doing so, the system user admin@cloudron.local, who shouldn't be present, remained in the system after the import.

-

After a fresh installation with the option "Leave user management to the app" (the same option was used previously), none of the existing users are able to log in. Additionally, it's impossible to change or recover their passwords. I cleared the old database and proceeded with the import. However, after doing so, the system user admin@cloudron.local, who shouldn't be present, remained in the system after the import.

@vadim said in Import 5gb sql file into MYSQL database:

However, after doing so, the system user admin@cloudron.local, who shouldn't be present, remained in the system after the import.

I guess you found this out by checking the users table in traccar ? In Cloudron package, the table name is

tc_users. What is the table name of users in your mysql dump?