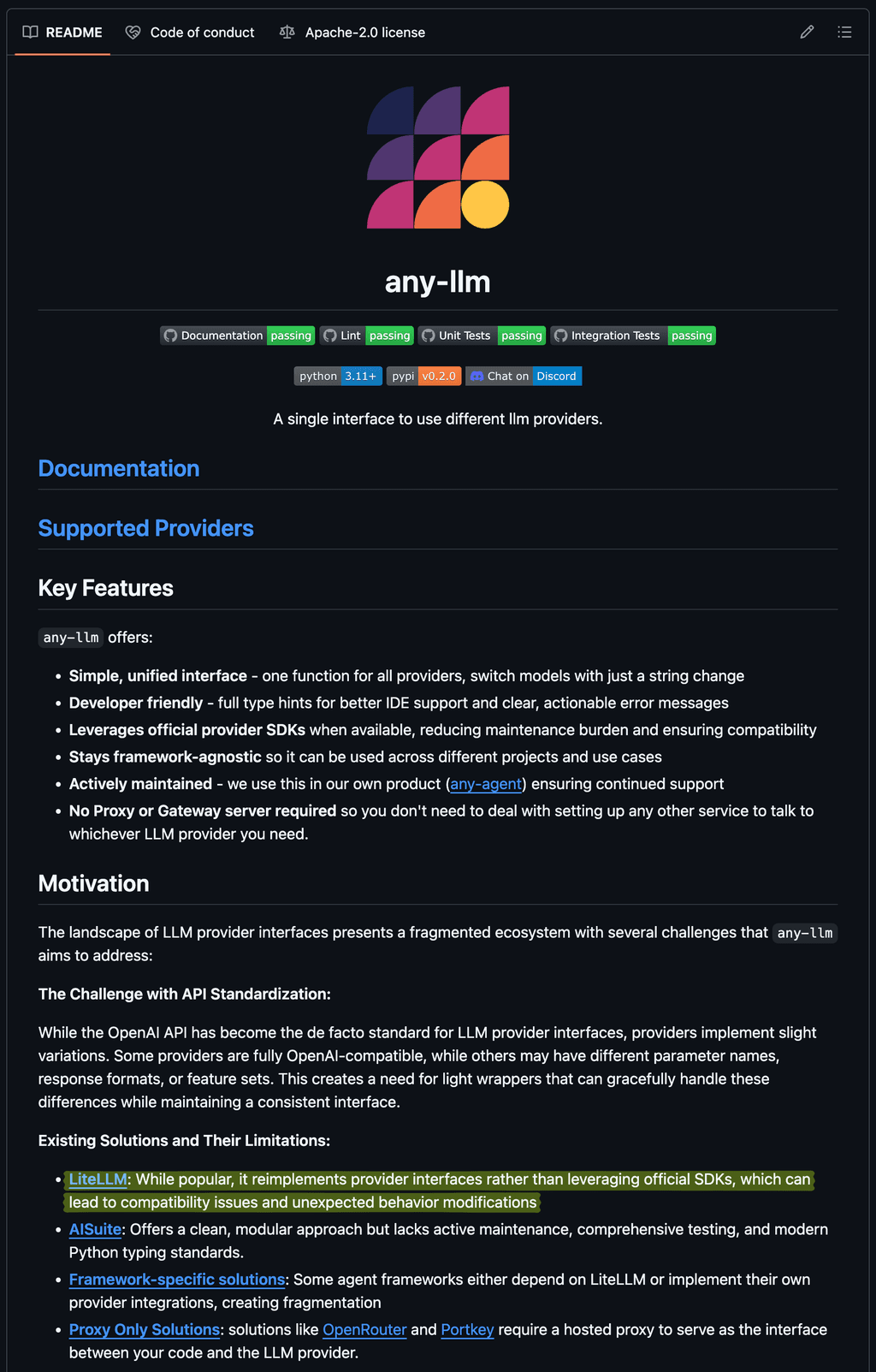

LiteLLM - OpenRouter Self-Hosted Alternative proxy provides access to OpenAI, Bedrock, Anthropic, Gemini, etc

-

- LiteLLM: LLM Gateway on Cloudron - provide model access, logging and usage tracking across 100+ LLMs. All in the OpenAI format.

- Main Page: https://www.litellm.ai/

- Git: https://github.com/BerriAI/litellm/

- Licence: AGPL

- Docker: Yes, Docker Packages

- Demo: 7 Day Enterprise Trial

- Discussion/Community: Discord

- Summary: LiteLLM exposes an OpenAI compatible API that proxies requests to other LLM API services. This provides a standardized API to interact with both open-source and commercial LLMs.

This can be a self-hosted alternative to OpenRouter.

Any application - including OpenWebUI (on CLoudron) can use this OpenAI compatible API endpoint to access over 100 integrations from OpenAI, Amazon Bedrock, Anthropic models, Google Gemini models, Grok, Deepseek, etc.- 100+ LLM Provider Integrations

- Langfuse, Langsmith, OTEL Logging

- Virtual Keys, Budgets, Teams

- Load Balancing, RPM/TPM limits

- LLM Guardrails

- Notes: This would make any of the AI on cloudron or outside (e.g. Khoj) very powerful, bridge the divide between models as they are released and afford us access easily through a single API.

This is superpower, AI on steroids, giving us access to leverage the comparive advantage of each AI model (e.g. Claude for programming, Deepseek for cheapness and Bedrock for longer context window and memory, etc.

- Alternative to / Libhunt link: OpenRouter.ai

- Installation Instructions: https://docs.litellm.ai/docs/proxy/docker_quick_start

-

LiteLLM was earlier integrated into OpenWebUI and was marvellous.

It was removed recently and I had posted about it here: https://forum.cloudron.io/topic/11957/litellm-removed-from-openwebui-requires-own-separate-containerNow, having successfully tested the docker, wish it could be on Cloudron and can act as proxy for all of our AI applications that require API access to any AI provider.

This would be fantastic - one application to rule them all!

-

J jagan referenced this topic on

-

J jagan referenced this topic on

-

Agreed. This would be a great app IMO.

The ideal set & forget self-hosted AI API routing setup and controller for all the other AI apps we will inevitably start to rely on.

Could do with this to make LibreChat more useful in setup and minimising .yml maintenance, too:

-

M marcusquinn referenced this topic on

M marcusquinn referenced this topic on

-

-

LiteLLM is quite interesting as it has a Presidio plugin (https://docs.litellm.ai/docs/proxy/guardrails/pii_masking_v2), i.e. it can mask PII (Personally Identifiable Information), PHI (Protected Health Information), and other sensitive data before sending data to a LLM - think anonymizing a document before having it analyzed by an LLM. That can make Gemini, Claude etc usable under GDPR jurisdiction.

-

LiteLLM is quite interesting as it has a Presidio plugin (https://docs.litellm.ai/docs/proxy/guardrails/pii_masking_v2), i.e. it can mask PII (Personally Identifiable Information), PHI (Protected Health Information), and other sensitive data before sending data to a LLM - think anonymizing a document before having it analyzed by an LLM. That can make Gemini, Claude etc usable under GDPR jurisdiction.

@necrevistonnezr Nice find. That is very interesting!

-

L LoudLemur referenced this topic on