Help with Wasabi mounting

-

Good afternoon,

What I want to accomplish is as follows:

I need to set up an external storage through wasabi to be my main HDD for the server. Then I want to mount additional buckets for each individual app to use specifically.The backups would go to an additional bucket within Wasabi.

I currently have 4 buckets, but will eventually have once bucket for each app, 1 bucket for backups of cloudron config, and one bucket for a backup of everything (I am still looking in to how to accomplish this piece). But for now, lets assume I need 3 out of the 4 buckets from wasabi mounted into cloudron for app access (ultimately to keep as little data on the VPS itself as possible).

I have a user w/ a group already made in wasabi. I have my app (nextcloud) where I change how much of a data quota the specific user gets. All user data will be stored on a single bucket from Wasabi, this drive will be broken up into boxes via the user quota and nextclouds account functionality.

I also have Firefly III and rainloop as well, that will each have its own separate bucket from wasabi (if possible, if they have to be in the same bucket then so be it).The problem is that Cloudron says you can mount a drive, but I do not see how. Wasabi docs are not helpful and neither is cloudrons.

The docs I have found are for backups (https://docs.cloudron.io/backups/) and then the other one I found is for storage (https://docs.cloudron.io/storage/). This is the one I was hoping would help, but alas, it doesnt. It says its possible, then vaguely provides info and I am back to being lost.

I just found this guide (https://dotlayer.com/how-to-mount-s3-wasabi-digital-ocean-storage-bucket-on-centos-and-ubuntu-using-s3fs/) but the steps included are not working for me (several steps don't work).

Can anyone hold my hand to achieve this?

-

-

@robi so this doesn’t really answer my question.

I want my wasabi bucket to contain all of my nextcloud data. So the app directory and all is on wasabi.

I don’t want an external drive connected for remote storage.

If I have 100 users and they all are allowed 50gb of space, then I would need a minimum of 5tb of space. My 180gb ssd in my vps won’t cut it.

Since nextcloud natively stores everything on the app directory, I need to move that to a block storage solution. -

@robi so this doesn’t really answer my question.

I want my wasabi bucket to contain all of my nextcloud data. So the app directory and all is on wasabi.

I don’t want an external drive connected for remote storage.

If I have 100 users and they all are allowed 50gb of space, then I would need a minimum of 5tb of space. My 180gb ssd in my vps won’t cut it.

Since nextcloud natively stores everything on the app directory, I need to move that to a block storage solution.Under resources/storage for nextcloud is where I’d assume I would add a block storage to replace my current data directory. But I don’t see how to do this.

@privsec said in Help with Wasabi mounting:

@robi so this doesn’t really answer my question.

I want my wasabi bucket to contain all of my nextcloud data. So the app directory and all is on wasabi.

I don’t want an external drive connected for remote storage.

If I have 100 users and they all are allowed 50gb of space, then I would need a minimum of 5tb of space. My 180gb ssd in my vps won’t cut it.

Since nextcloud natively stores everything on the app directory, I need to move that to a block storage solution. -

@robi so this doesn’t really answer my question.

I want my wasabi bucket to contain all of my nextcloud data. So the app directory and all is on wasabi.

I don’t want an external drive connected for remote storage.

If I have 100 users and they all are allowed 50gb of space, then I would need a minimum of 5tb of space. My 180gb ssd in my vps won’t cut it.

Since nextcloud natively stores everything on the app directory, I need to move that to a block storage solution.@privsec That's not the place to add it. (NC)

The place to look into adding it is in the container for NC.

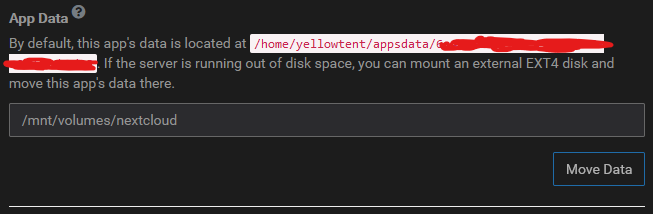

From CL UI, in the NC App Config > Resources > Storage section is where you would move the App storage to.

To make this happen you need to have a filelsystem based mount point.

This is where

rclonecomes in which can mount remote filesystems and object stores into the local filesystem.See it now?

-

@privsec That's not the place to add it. (NC)

The place to look into adding it is in the container for NC.

From CL UI, in the NC App Config > Resources > Storage section is where you would move the App storage to.

To make this happen you need to have a filelsystem based mount point.

This is where

rclonecomes in which can mount remote filesystems and object stores into the local filesystem.See it now?

@robi

That is my problem, I believe.My system, for whatever reason wont allow any mountpoints.

I have followed these guides

https://wasabi-support.zendesk.com/hc/en-us/articles/115001744651-How-do-I-use-S3FS-with-Wasabi-

https://www.interserver.net/tips/kb/mount-s3-bucket-centos-ubuntu-using-s3fs/

And neither of them are working. Ill have to check out Rclone as nothing else is working at the moment. -

@privsec That's not the place to add it. (NC)

The place to look into adding it is in the container for NC.

From CL UI, in the NC App Config > Resources > Storage section is where you would move the App storage to.

To make this happen you need to have a filelsystem based mount point.

This is where

rclonecomes in which can mount remote filesystems and object stores into the local filesystem.See it now?

-

@robi

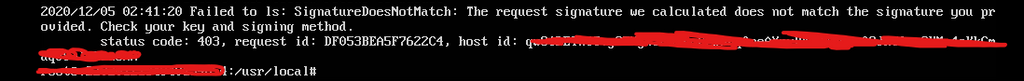

So I have installed rclone, and went through the config step. However, I am getting this

This is the same, if not similar error message I was getting without rclone.What resolves this?

-

@privsec copy/paste issue? double check connecting via mountainduck or similar desktop S3FS/Object Storage tool to validate credentials.

-

@robi I manually type it in. In case the key is bad or wrong I just generated a new key and I will try with mountainduck to ensure its working

-

@privsec Avoid manually typing it in, as it is very error prone. Sometimes the keys have 'Il' and you can't tell which is the L and which is the i.

-

@robi I disabled ssh, and am using my VPS "ssh" console.

Tbh, I dont know how to re-enable root user

I ran this https://github.com/akcryptoguy/vps-harden and ever since I cant get ssh to work. So I am forced to do it all by hand -

@robi Thank you.

I have access again for easier troubleshooting and I ended up reinstalling the OS and everything and once it all came back up I was able to add mounts.

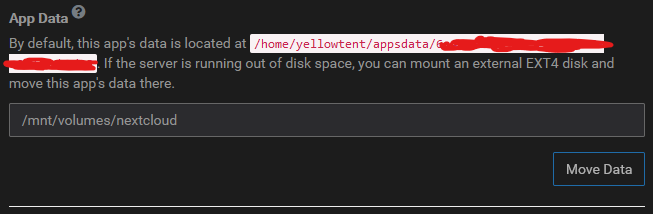

Though its odd. I changed my data directory for nextcloud to /mnt/volumes/nextcloud and not all content is there.

Like if I click files manager it takes me to what Id assume is the cloud drive from wasabi, but there are files there that are not in wasabi

In addition, when I try to view the logs for any application OR for cloudron itself I am getting

-

@robi Thank you.

I have access again for easier troubleshooting and I ended up reinstalling the OS and everything and once it all came back up I was able to add mounts.

Though its odd. I changed my data directory for nextcloud to /mnt/volumes/nextcloud and not all content is there.

Like if I click files manager it takes me to what Id assume is the cloud drive from wasabi, but there are files there that are not in wasabi

In addition, when I try to view the logs for any application OR for cloudron itself I am getting

-

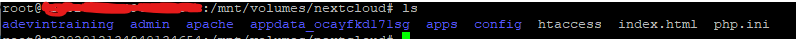

@privsec As an example

That is on my server via CLI

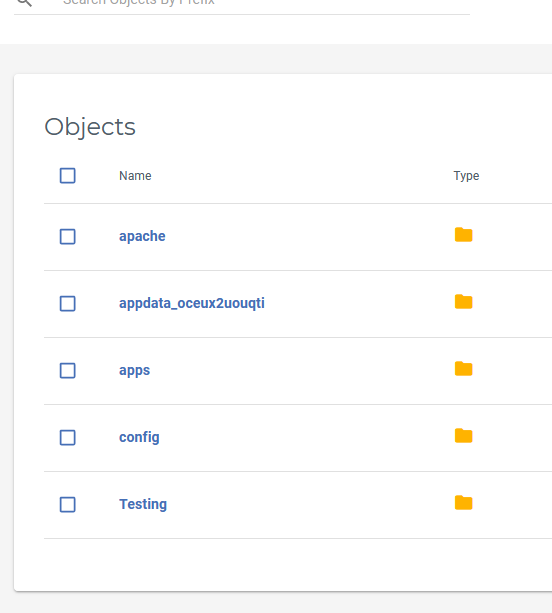

That is on my server via CLIAnd this

That is within the nextcloud bucket for my app.

That is within the nextcloud bucket for my app. -

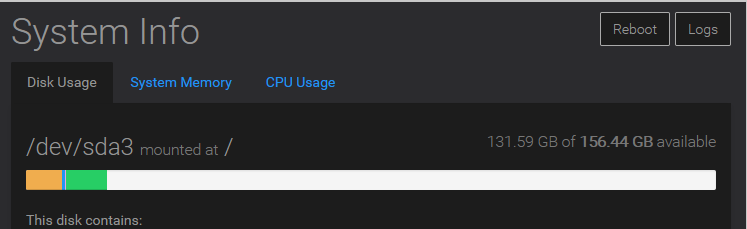

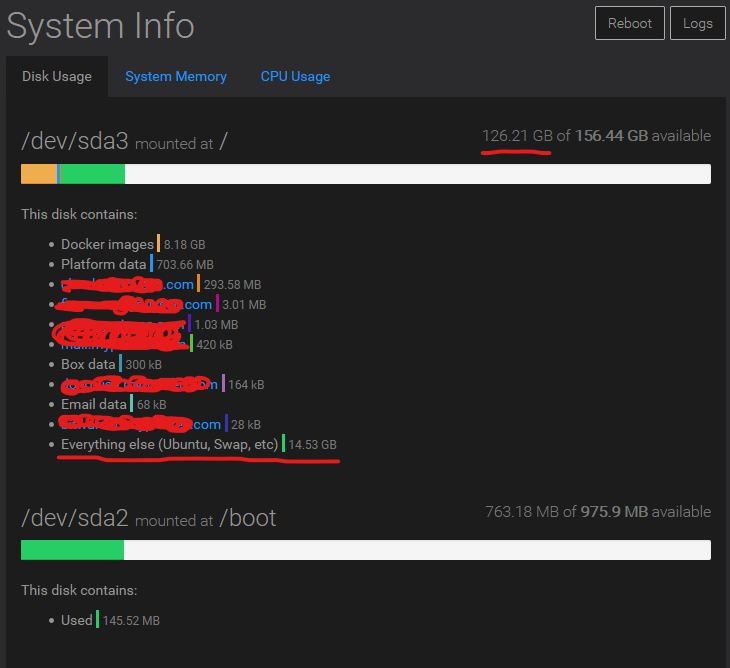

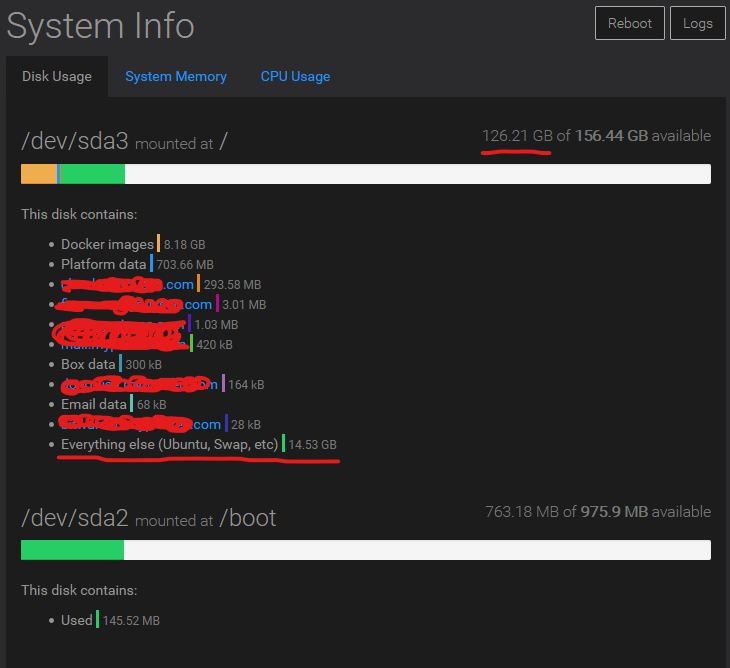

So, I confirmed its saving data to the ssd of the VPS rather than sending it to the cloud

The uploads are not complete yet, but its evident that they are being stored locally rather then in the cloud.

Below is my Nexcloud app storage setting. Nexcloud is the actual rclone mounted folder.

Am I doing something wrong?

-

So, I confirmed its saving data to the ssd of the VPS rather than sending it to the cloud

The uploads are not complete yet, but its evident that they are being stored locally rather then in the cloud.

Below is my Nexcloud app storage setting. Nexcloud is the actual rclone mounted folder.

Am I doing something wrong?

@privsec Did I understand correctly that /mnt/volumes/nextcloud is an rclone wasabi mount and you have also moved the app data directory of nextcloud to this location ? If so, what you have done seems correct. I don't know about the correctness of rclone itself nor do I know about how rclone mounting really works. Does the mount show up in df -h output ?

You say there is a discrepancy between what's the in the filesystem and what's in wasabi, so this looks like some rclone issue?

-

@privsec Did I understand correctly that /mnt/volumes/nextcloud is an rclone wasabi mount and you have also moved the app data directory of nextcloud to this location ? If so, what you have done seems correct. I don't know about the correctness of rclone itself nor do I know about how rclone mounting really works. Does the mount show up in df -h output ?

You say there is a discrepancy between what's the in the filesystem and what's in wasabi, so this looks like some rclone issue?

@girish It might be an rclone issue

@robi

I installed Rclone by following these steps https://rclone.org/install/- curl https://rclone.org/install.sh | sudo bash

- rclone config

3 rclone mount remote:path /path/to/mountpoint --vfs-cache-mode full --daemon

df -h

root@v2202012134940134654:~# df -h Filesystem Size Used Avail Use% Mounted on udev 3.9G 0 3.9G 0% /dev tmpfs 798M 11M 788M 2% /run /dev/sda3 157G 25G 126G 17% / tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/sda2 976M 146M 764M 17% /boot overlay 157G 25G 126G 17% /var/lib/docker/overlay2/5e80dc05d8a594a5a0c51aa5602ca124b428e0edba09181759e184497e45a875/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/903a9ff390459afb42b38eaa6b07c0f30f4f45fa413e154e35f766d3bcec7893/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/6af811d56e81cce0af9c14fcb1f0eadb6466a4a4eaa6bfd750eed95d5207c688/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/fbdaaedecbb9dbeeb0212e3dadb0cb32697ad523af7cbaf713a25bd567536edb/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/0c70992b7b8b2c704fc0a080263eb8eb09ae8518fc7054518ce58b75b4ad7e0d/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/f0d4d24114f30306a670e596a11d42b5fde57d30cee607746760dba613331055/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/ed4fd11e18c018a69bde9307d18a20f7380621126baf20e6de96949cb2a0cb19/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/c03dea8bf938e7044b8f9eb746dfbdcce955e69534c2c6a82b86c22c5aaaca5c/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/2eb8958e2598de5291a0895da411df947b97a6f873022b5eab415b6b2f8678b6/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/57f8ec7cc792bcc8e7f8c6a3a24c89cc2ef74bfcf794423a422d75aec0825144/merged tmpfs 798M 0 798M 0% /run/user/0 overlay 157G 25G 126G 17% /var/lib/docker/overlay2/acd1b16c14ff94c587d697ff2a57c1c52df183d034ac7a7067f6a6b251a88977/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/c65f5aa0b3af3f75055a43b3a8d529b0a3fd51d23806538e4c5b8d2173ac4489/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/299711c86c910f26718ad9670963b511d86726d61cb67716d1f8566dbd83addd/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/043dea9ba03986e56e9fbd7f3760faf2dd4538db77839be209c049e74648a0be/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/0d3609e2d1f83f95df827fce2598eb713edf161e1a8bf0a01045621e1061ad95/merged -

@girish It might be an rclone issue

@robi

I installed Rclone by following these steps https://rclone.org/install/- curl https://rclone.org/install.sh | sudo bash

- rclone config

3 rclone mount remote:path /path/to/mountpoint --vfs-cache-mode full --daemon

df -h

root@v2202012134940134654:~# df -h Filesystem Size Used Avail Use% Mounted on udev 3.9G 0 3.9G 0% /dev tmpfs 798M 11M 788M 2% /run /dev/sda3 157G 25G 126G 17% / tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/sda2 976M 146M 764M 17% /boot overlay 157G 25G 126G 17% /var/lib/docker/overlay2/5e80dc05d8a594a5a0c51aa5602ca124b428e0edba09181759e184497e45a875/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/903a9ff390459afb42b38eaa6b07c0f30f4f45fa413e154e35f766d3bcec7893/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/6af811d56e81cce0af9c14fcb1f0eadb6466a4a4eaa6bfd750eed95d5207c688/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/fbdaaedecbb9dbeeb0212e3dadb0cb32697ad523af7cbaf713a25bd567536edb/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/0c70992b7b8b2c704fc0a080263eb8eb09ae8518fc7054518ce58b75b4ad7e0d/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/f0d4d24114f30306a670e596a11d42b5fde57d30cee607746760dba613331055/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/ed4fd11e18c018a69bde9307d18a20f7380621126baf20e6de96949cb2a0cb19/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/c03dea8bf938e7044b8f9eb746dfbdcce955e69534c2c6a82b86c22c5aaaca5c/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/2eb8958e2598de5291a0895da411df947b97a6f873022b5eab415b6b2f8678b6/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/57f8ec7cc792bcc8e7f8c6a3a24c89cc2ef74bfcf794423a422d75aec0825144/merged tmpfs 798M 0 798M 0% /run/user/0 overlay 157G 25G 126G 17% /var/lib/docker/overlay2/acd1b16c14ff94c587d697ff2a57c1c52df183d034ac7a7067f6a6b251a88977/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/c65f5aa0b3af3f75055a43b3a8d529b0a3fd51d23806538e4c5b8d2173ac4489/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/299711c86c910f26718ad9670963b511d86726d61cb67716d1f8566dbd83addd/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/043dea9ba03986e56e9fbd7f3760faf2dd4538db77839be209c049e74648a0be/merged overlay 157G 25G 126G 17% /var/lib/docker/overlay2/0d3609e2d1f83f95df827fce2598eb713edf161e1a8bf0a01045621e1061ad95/merged@privsec From a casual reading of rclone, it seems that it's a FUSE mount. Isn't the mount point supposed to appear in df -h output ? Also, see https://linoxide.com/linux-command/list-mounted-drives-on-linux/ . I think it's not mounted properly (I also don't know if this mount persists reboots, maybe you rebooted in the middle).