Help with Wasabi mounting

-

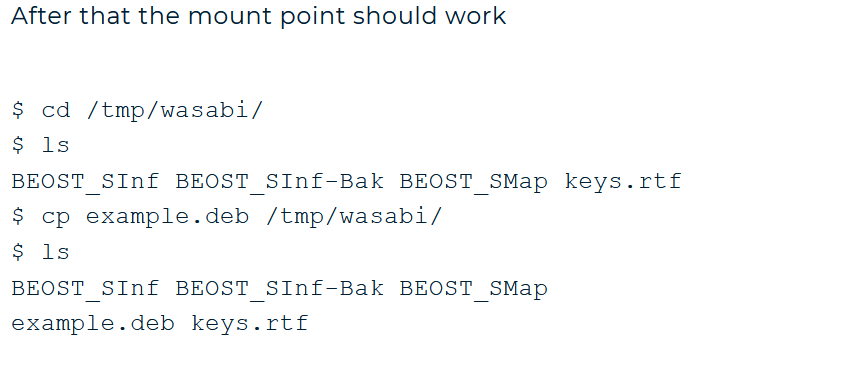

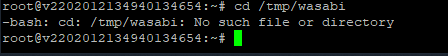

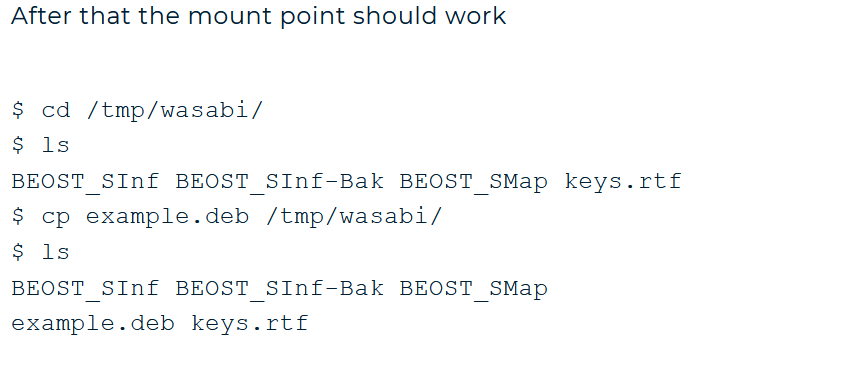

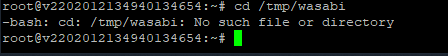

And, I cant do the remaining steps either per

https://wasabi-support.zendesk.com/hc/en-us/articles/115001744651-How-do-I-use-S3FS-with-Wasabi-

As when I try to follow these steps, I get this

And probably my final note,

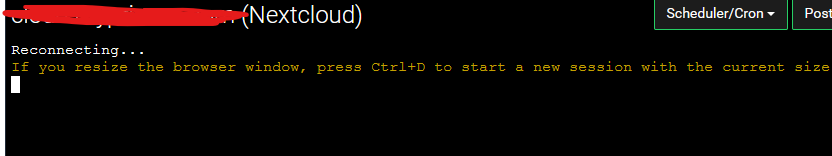

I cant access the console of nextcloud either. It keeps doing this loopFirst this one

And then this one

And hen it goes back to

Keep in mind, this is a brand new, fresh install not even logged into yet, my cache is cleared out as well

-

And probably my final note,

I cant access the console of nextcloud either. It keeps doing this loopFirst this one

And then this one

And hen it goes back to

Keep in mind, this is a brand new, fresh install not even logged into yet, my cache is cleared out as well

-

And, I cant do the remaining steps either per

https://wasabi-support.zendesk.com/hc/en-us/articles/115001744651-How-do-I-use-S3FS-with-Wasabi-

As when I try to follow these steps, I get this

-

@privsec Does the app say "Running" in the Cloudron dashboard? The Web Terminal won't work until the app is running.

-

@privsec I will probably give s3fs a try tomorrow and see if I can write a guide for it

(I have never tried s3fs or rclone mount either, so let's see if I am successful).

(I have never tried s3fs or rclone mount either, so let's see if I am successful). -

@girish Is there another mounting solution?

It is absolutely pivotal that I get the app directories off my servers drive.

@privsec Is the goal here to have a large amount of space external (at correct price) to the server? If you are hosted on a VPS is there an option for you to attach external storage? For example, many VPS providers like time4vps, hetzner etc have quite cheap external disks that can be attached to a server. The reason I ask is even if s3fs or rclone mount does work, it's probably going to be quite slow - for example, I see posts like https://serverfault.com/questions/396100/s3fs-performance-improvements-or-alternative . We have no experience with how well these mounts work and if they work at all. Maybe files go missing, maybe it's unstable, we don't know.

-

So I found some interesting posts:

- https://www.reddit.com/r/aws/comments/dplfoa/why_is_s3fs_such_a_bad_idea/

- https://www.reddit.com/r/aws/comments/a5jdth/what_are_peoples_thoughts_on_using_s3fs_in/

The point of both of them is that S3FS is not a file system and as such file permissions won't work. It's also not consistent (ie. if you write and read back, it may not be the same). This makes it pretty much unsuitable for making it an app data directory. What might work is if you make it as an external storage inside nextcloud - https://docs.nextcloud.com/server/latest/admin_manual/configuration_files/external_storage_configuration_gui.html. I didn't suggest this earlier because I think you said you didn't want this in your initial post. Can you recheck this? Because I think it will provide what you want. You can just add the wasabi storage space inside nextcloud and it will appear as a folder for your users (you can create 1 bucket for each user or something).

@privsec So, I think it's best not to go down this s3fs route, it won't work reliably.

(If you were banking on s3fs somehow, for refunds etc, not a problem

, just reach out to us on support@).

, just reach out to us on support@). -

So I found some interesting posts:

- https://www.reddit.com/r/aws/comments/dplfoa/why_is_s3fs_such_a_bad_idea/

- https://www.reddit.com/r/aws/comments/a5jdth/what_are_peoples_thoughts_on_using_s3fs_in/

The point of both of them is that S3FS is not a file system and as such file permissions won't work. It's also not consistent (ie. if you write and read back, it may not be the same). This makes it pretty much unsuitable for making it an app data directory. What might work is if you make it as an external storage inside nextcloud - https://docs.nextcloud.com/server/latest/admin_manual/configuration_files/external_storage_configuration_gui.html. I didn't suggest this earlier because I think you said you didn't want this in your initial post. Can you recheck this? Because I think it will provide what you want. You can just add the wasabi storage space inside nextcloud and it will appear as a folder for your users (you can create 1 bucket for each user or something).

@privsec So, I think it's best not to go down this s3fs route, it won't work reliably.

(If you were banking on s3fs somehow, for refunds etc, not a problem

, just reach out to us on support@).

, just reach out to us on support@). -

-

There may be another "advanced" way via Minio.

Minio can be used as a storage gateway.

See if you can connect Minio to Wasabi, if you can in gateway mode, then see if you can mount the local Minio instance in Linux, even if s3fs, it would be local.

-

@privsec We tried this once and it was unbearably slow. Personally I'd look for ways to reduce the Nextcloud storage needs and use a native mounted drive from the host, and just offer an Archive Wasabi S3 bucket for people to move things no longer needed for regular access to that to save space needs on the main NC.

-

@privsec We tried this once and it was unbearably slow. Personally I'd look for ways to reduce the Nextcloud storage needs and use a native mounted drive from the host, and just offer an Archive Wasabi S3 bucket for people to move things no longer needed for regular access to that to save space needs on the main NC.

@marcusquinn Cyber Duck/Mountain Duck is very good as a platform to making Wasabi/S3 storage quite accessible to users if they need.

-

@privsec We tried this once and it was unbearably slow. Personally I'd look for ways to reduce the Nextcloud storage needs and use a native mounted drive from the host, and just offer an Archive Wasabi S3 bucket for people to move things no longer needed for regular access to that to save space needs on the main NC.

@marcusquinn

I can not get a mounted drive on my host to actually work. I have tried both Rclone and S3FS, both gave me the same result of no errors, but no syncing.I have decided (atleast for now till I find a better solution) to give each user on nextcloud a wasabi bucket as a external storage drive, and restrict only them to be able to access that bucket.

I am now working on trying to figure out how to remove the built in files for nextcloud to just have the external storage folder listed with the nextcloud folders/files within the wasabi drive.

In regards to Cyber Duck/Mountain Duck, whats the difference between the external storage connection in nextcloud and them ? Is there a performance difference?

-

@marcusquinn

I can not get a mounted drive on my host to actually work. I have tried both Rclone and S3FS, both gave me the same result of no errors, but no syncing.I have decided (atleast for now till I find a better solution) to give each user on nextcloud a wasabi bucket as a external storage drive, and restrict only them to be able to access that bucket.

I am now working on trying to figure out how to remove the built in files for nextcloud to just have the external storage folder listed with the nextcloud folders/files within the wasabi drive.

In regards to Cyber Duck/Mountain Duck, whats the difference between the external storage connection in nextcloud and them ? Is there a performance difference?

@privsec Sorry, I mean just a mounted local host storage, not mounting external S3, which will have such high latency that it makes NC painful to use.

-

@marcusquinn

I can not get a mounted drive on my host to actually work. I have tried both Rclone and S3FS, both gave me the same result of no errors, but no syncing.I have decided (atleast for now till I find a better solution) to give each user on nextcloud a wasabi bucket as a external storage drive, and restrict only them to be able to access that bucket.

I am now working on trying to figure out how to remove the built in files for nextcloud to just have the external storage folder listed with the nextcloud folders/files within the wasabi drive.

In regards to Cyber Duck/Mountain Duck, whats the difference between the external storage connection in nextcloud and them ? Is there a performance difference?

@privsec I realise host local storage isn't cheap, and Wasabi is cheap, which is why we tried doing what you are once. Maybe you'll have a different experience but we just found the juice wasn't worth the squeeze, especially with large numbers of small files.

It might work for something like Video storage where there's fewer files but I just don't fancy the slow user experience costs and would always opt for speed over price since time is so much more expensive if users are spending too much waiting on their file services to show results.

-

@marcusquinn

I can not get a mounted drive on my host to actually work. I have tried both Rclone and S3FS, both gave me the same result of no errors, but no syncing.I have decided (atleast for now till I find a better solution) to give each user on nextcloud a wasabi bucket as a external storage drive, and restrict only them to be able to access that bucket.

I am now working on trying to figure out how to remove the built in files for nextcloud to just have the external storage folder listed with the nextcloud folders/files within the wasabi drive.

In regards to Cyber Duck/Mountain Duck, whats the difference between the external storage connection in nextcloud and them ? Is there a performance difference?

@privsec Just reading this, not sure if you've read? I'm not saying i can't be done, just that we gave up. Maybe this helps? I'm still reading: https://autoize.com/s3-compatible-storage-for-nextcloud/

-

From reading the above article, is sounds like it might need to be that Cloudron has 2 x versions of Nextcloud packaged, or at least an option in installing to make it setup for S3 storage.

Probably needs one of the @appdev team to have a read and confirm or deny.

I agree it would be interesting and our past experience might have been what this article above solves.

Let's get more opinions on this, someone else might also have a need and interest and be willing to try themselves too.

-

From reading the above article, is sounds like it might need to be that Cloudron has 2 x versions of Nextcloud packaged, or at least an option in installing to make it setup for S3 storage.

Probably needs one of the @appdev team to have a read and confirm or deny.

I agree it would be interesting and our past experience might have been what this article above solves.

Let's get more opinions on this, someone else might also have a need and interest and be willing to try themselves too.

@marcusquinn Ill have to give that article above a read.

Anything I can do to help make launching costs low is sought after, while keeping user experience high

-

From reading the above article, is sounds like it might need to be that Cloudron has 2 x versions of Nextcloud packaged, or at least an option in installing to make it setup for S3 storage.

Probably needs one of the @appdev team to have a read and confirm or deny.

I agree it would be interesting and our past experience might have been what this article above solves.

Let's get more opinions on this, someone else might also have a need and interest and be willing to try themselves too.

@marcusquinn said in Help with Wasabi mounting:

From reading the above article, is sounds like it might need to be that Cloudron has 2 x versions of Nextcloud packaged, or at least an option in installing to make it setup for S3 storage

Ihmo no second app necessary, the drawback is though that the s3 backend can only be enabled before any data is added to the app.

I am however sceptical if adding network latency for file access is such a smart idea. Especially for a php application.