Backup size growing

-

@christiaan IIRC, there is a tricky setup in backblaze where "delete" doesn't actually delete but b2 keeps it around for a while and there is some setting to disable this behavior. I recall someone else ran into this as well (maybe it was @marcusquinn)

@girish @christiaan Yes, that's part of their business model I guess.

Naughty of them not to offer the setting when creating the Bucket, but I guess that's providing significant profits from the amount of times I've seen otherwise smart and prudent people caught out by it.

It's under "Lifecycle Settings", you need to select "Keep only the last version of the file", given it is already versioned backups, there are no other versions you would need.

I also recommend that if your host takes daily snapshots and you have a lot of data, you probably only need weekly offsite backups on your quietest day of the week.

-

@girish @christiaan Yes, that's part of their business model I guess.

Naughty of them not to offer the setting when creating the Bucket, but I guess that's providing significant profits from the amount of times I've seen otherwise smart and prudent people caught out by it.

It's under "Lifecycle Settings", you need to select "Keep only the last version of the file", given it is already versioned backups, there are no other versions you would need.

I also recommend that if your host takes daily snapshots and you have a lot of data, you probably only need weekly offsite backups on your quietest day of the week.

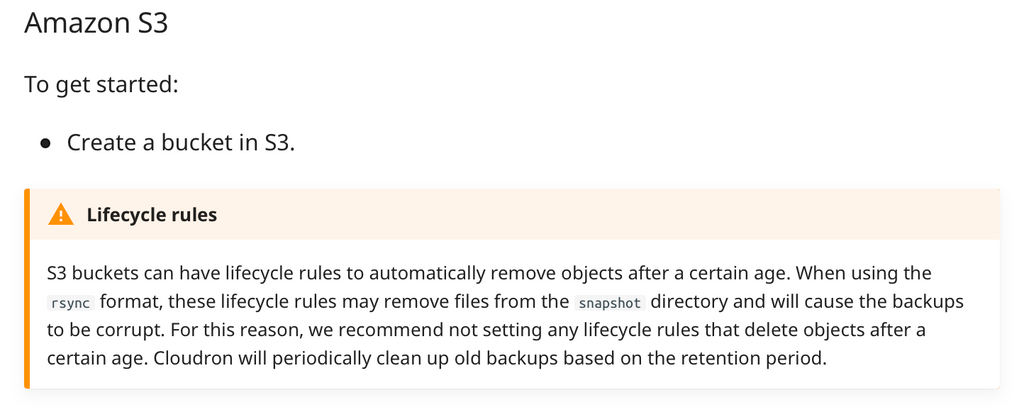

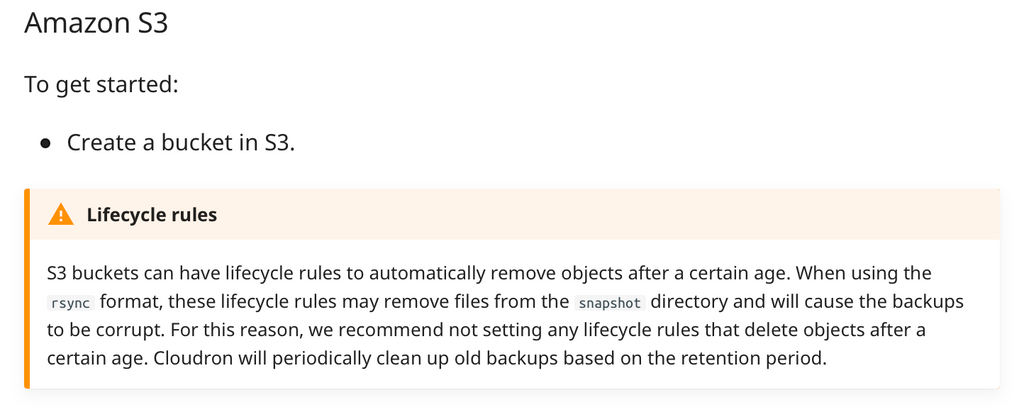

@marcusquinn oh right. I did see that setting but I was following Cloudron instructions not to use it:

https://docs.cloudron.io/backups/

-

@marcusquinn oh right. I did see that setting but I was following Cloudron instructions not to use it:

https://docs.cloudron.io/backups/

@christiaan Ahh, that's even more confusing then as Backblaze is using the same word; "lifecycle", to mean something different to Amazon's. Devil is in the detail on these platforms, where they mostly rely on lack of user calculation or reading for the most profitable features.

-

@christiaan Ahh, that's even more confusing then as Backblaze is using the same word; "lifecycle", to mean something different to Amazon's. Devil is in the detail on these platforms, where they mostly rely on lack of user calculation or reading for the most profitable features.

@marcusquinn ha, okay thanks.

I've also just clocked that I was quoting Amazon instructions instead Backblaze! Just to add to the general confusion, lol.

I turned on Backblaze's Lifecycle Settings to use 'Keep only the last version of the file' and the bucket size has reduced, thanks

So this won't corrupt my rsync files then?

-

@marcusquinn ha, okay thanks.

I've also just clocked that I was quoting Amazon instructions instead Backblaze! Just to add to the general confusion, lol.

I turned on Backblaze's Lifecycle Settings to use 'Keep only the last version of the file' and the bucket size has reduced, thanks

So this won't corrupt my rsync files then?

@christiaan Nope, Cloudron handles the component parts that make up a whole Backup to restore from. You just don't need multiple copies of those parts.

Yeah, that should reduce your storage usage with Backblaze a lot.

You can try a test restore if you're not confident but I'm pretty confident from what you've explained.

As much as I wanted Rsync to work for us, I've moved to a weekly Tarball instead, relying instead on the daily host VPS snapshots as a low-resource cost version of the most recent data, with the Cloudron backups just as a secondary location backup for that redundancy.

-

@marcusquinn ha, okay thanks.

I've also just clocked that I was quoting Amazon instructions instead Backblaze! Just to add to the general confusion, lol.

I turned on Backblaze's Lifecycle Settings to use 'Keep only the last version of the file' and the bucket size has reduced, thanks

So this won't corrupt my rsync files then?

@christiaan said in Backup size growing:

I turned on Backblaze's Lifecycle Settings to use 'Keep only the last version of the file' and the bucket size has reduced, thanks

Is this a permanent fix? Or does this just delete the occasional backup?

-

@christiaan @marcusquinn thanks for note. I have updated the b2 docs accordingly - https://docs.cloudron.io/backups/#backblaze-b2

-

@christiaan said in Backup size growing:

I turned on Backblaze's Lifecycle Settings to use 'Keep only the last version of the file' and the bucket size has reduced, thanks

Is this a permanent fix? Or does this just delete the occasional backup?

@dylightful permanent fix

@girish no prob, thanks

-

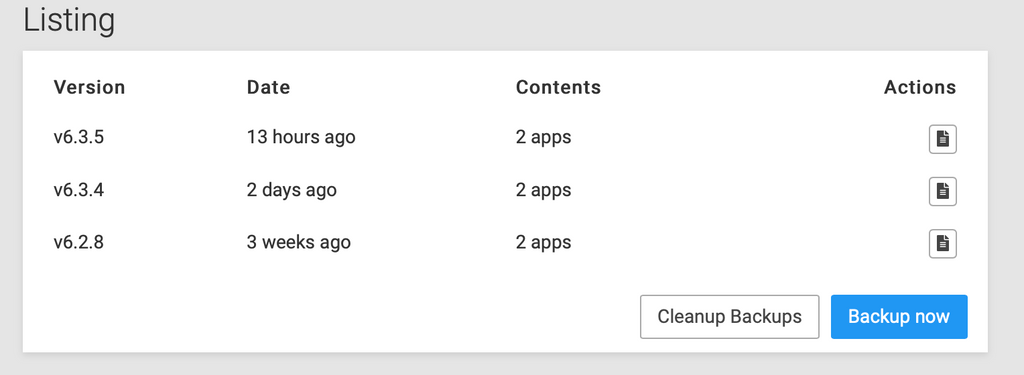

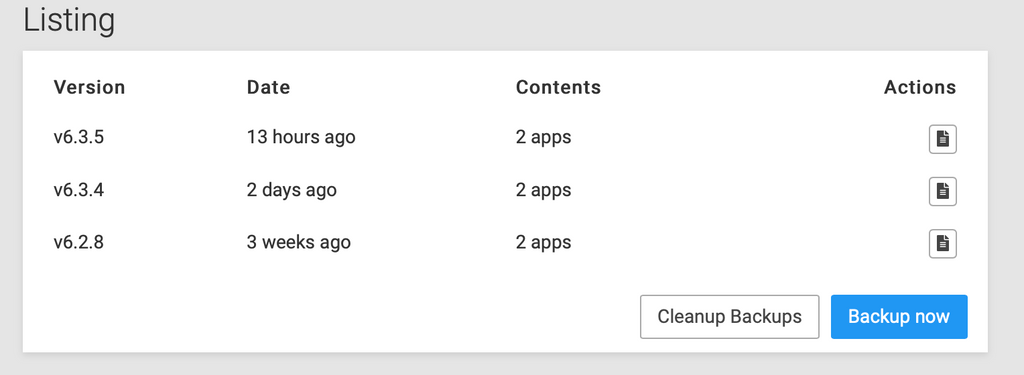

Actually I'm not sure that was a permanent fix. It appears to be storing an additional copy each time there's an upgrade.

-

Actually I'm not sure that was a permanent fix. It appears to be storing an additional copy each time there's an upgrade.

@christiaan I think it's normal for those to be retained

-

@christiaan I think it's normal for those to be retained

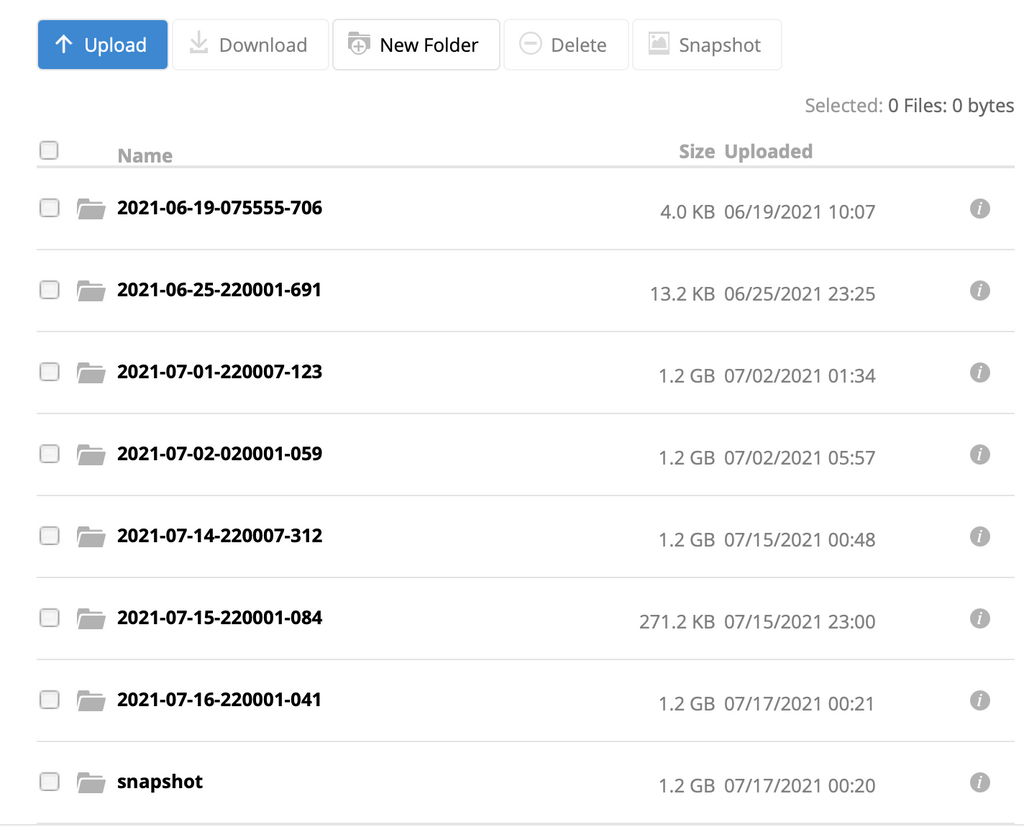

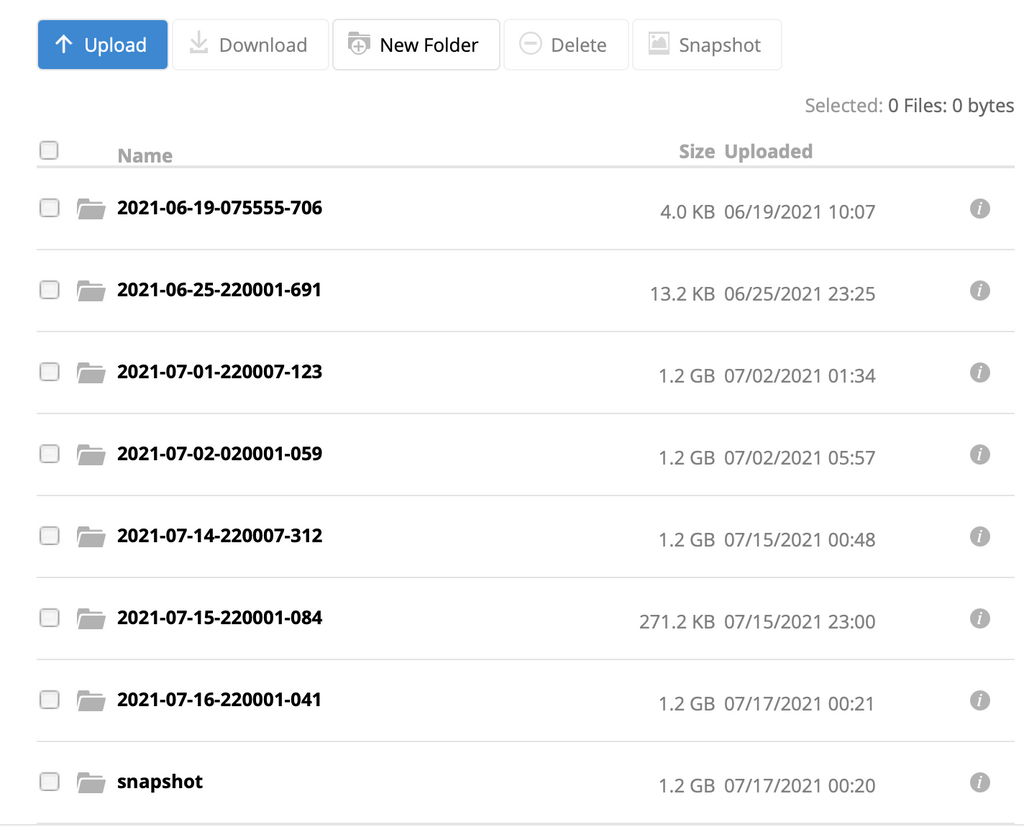

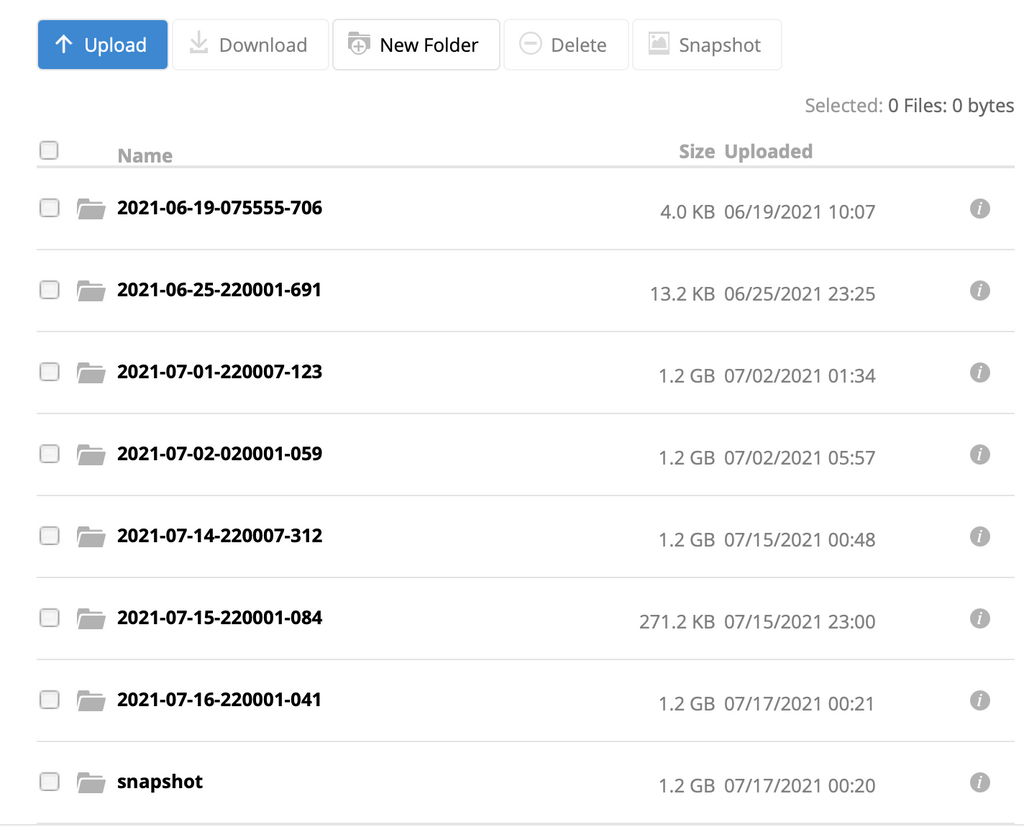

@jdaviescoates how do I remove them safely? Can I manually delete any of these folders without corrupting the backup?

-

@jdaviescoates how do I remove them safely? Can I manually delete any of these folders without corrupting the backup?

@christiaan I'm not sure, but I think you could probably delete all of them apart from the two at the bottom (the most up to date backup and the snapshots) but I wouldn't proceed without getting further clarity from @Staff

-

@jdaviescoates how do I remove them safely? Can I manually delete any of these folders without corrupting the backup?

@christiaan yes, you can safely delete any single timestamped directory safely. The snapshot directory should be left alone. If you click inside a timestamped directory and only find a single app_xx file, it means that backup was created as part of an app upgrade or backup. If you find many app_ files and a box_ file inside it, it was created as part of a Cloudron upgrade or backup.

-

@christiaan yes, you can safely delete any single timestamped directory safely. The snapshot directory should be left alone. If you click inside a timestamped directory and only find a single app_xx file, it means that backup was created as part of an app upgrade or backup. If you find many app_ files and a box_ file inside it, it was created as part of a Cloudron upgrade or backup.

@girish Great, thanks. Would it be possible for you to automate this in Cloudron (i.e. deleting old versions)?

-

@girish Great, thanks. Would it be possible for you to automate this in Cloudron (i.e. deleting old versions)?

@christiaan they would've been deleted eventually. I think maybe after 3 weeks.

But yeah, would be nice for the to be a UI where they could be deleted too. I think that there might even be another post about that

-

@christiaan they would've been deleted eventually. I think maybe after 3 weeks.

But yeah, would be nice for the to be a UI where they could be deleted too. I think that there might even be another post about that

@jdaviescoates I am always wary about adding that feature because a compromised cloudron would then mean that backups can also be deleted. In many backup providers, you can create storage credentials with permissions so that objects cannot be deleted. Then again maybe just like auto-deletion, we can have a button to delete which may or may not work...

-

@christiaan they would've been deleted eventually. I think maybe after 3 weeks.

But yeah, would be nice for the to be a UI where they could be deleted too. I think that there might even be another post about that

@jdaviescoates said in Backup size growing:

@christiaan they would've been deleted eventually. I think maybe after 3 weeks.

Oh right. There was one there that was a month old. Is there anywhere in Cloudron documentation that explains this?

-

@jdaviescoates said in Backup size growing:

@christiaan they would've been deleted eventually. I think maybe after 3 weeks.

Oh right. There was one there that was a month old. Is there anywhere in Cloudron documentation that explains this?

@christiaan some info here - https://docs.cloudron.io/backups/#retention-policy

-

@jdaviescoates I am always wary about adding that feature because a compromised cloudron would then mean that backups can also be deleted. In many backup providers, you can create storage credentials with permissions so that objects cannot be deleted. Then again maybe just like auto-deletion, we can have a button to delete which may or may not work...

@girish said in Backup size growing:

@jdaviescoates I am always wary about adding that feature because a compromised cloudron would then mean that backups can also be deleted. In many backup providers, you can create storage credentials with permissions so that objects cannot be deleted. Then again maybe just like auto-deletion, we can have a button to delete which may or may not work...

Why are they not simply deleted based on the retention policy? Is this for wiggle room if an upgrade goes wrong?

My retention policy is 2 days, for instance, but one of these old version backups was a month old.

-

@christiaan some info here - https://docs.cloudron.io/backups/#retention-policy

@girish said in Backup size growing:

@christiaan some info here - https://docs.cloudron.io/backups/#retention-policy

Thanks girish. This doesn't seem to cover the issue I am seeing though, which is that backups of old versions are not being deleted as per my retention policy.