Amazing app to help with backups and saving space!

-

Some recent posts have dealt with issues due to running out of space. I too had this challenge when I first started , and it was constraining to say the least. I eventually moved up to larger VPSes (my current one has 708GB), which allowed me to try different apps. However, apps like Peertube and Mastodon and Cubby all start taking up lots of space, more than before.

To help with this I started using the free tier of object storage offered by Scaleway, who gives you up to 75GB free! Amazing! But you know what, even with that I started hitting the limit after a few months.

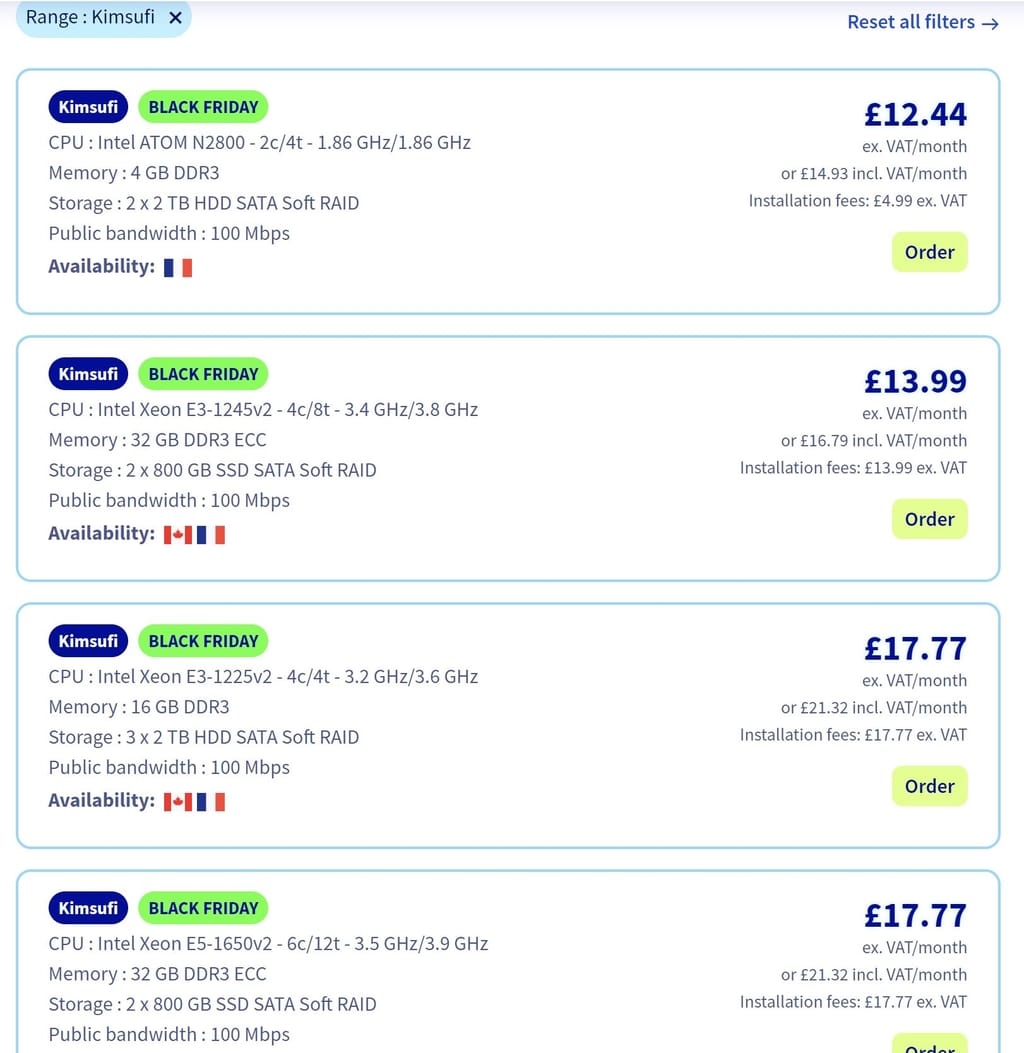

So, now that I was using Object Storage, that got me looking for other ways it might help. My search led me to Minio - self-hosted object storage!? Cool. I also frequent lowendtalk and serverhunter, which eventually led to me snag a KS-1 from OVH/KimSufi. On this I installed Minio, using both the Minio instructions, but also this site was super helpful. I lucked out and discovered that my 1TB VPS was actually a 2TB drive. Double cool.

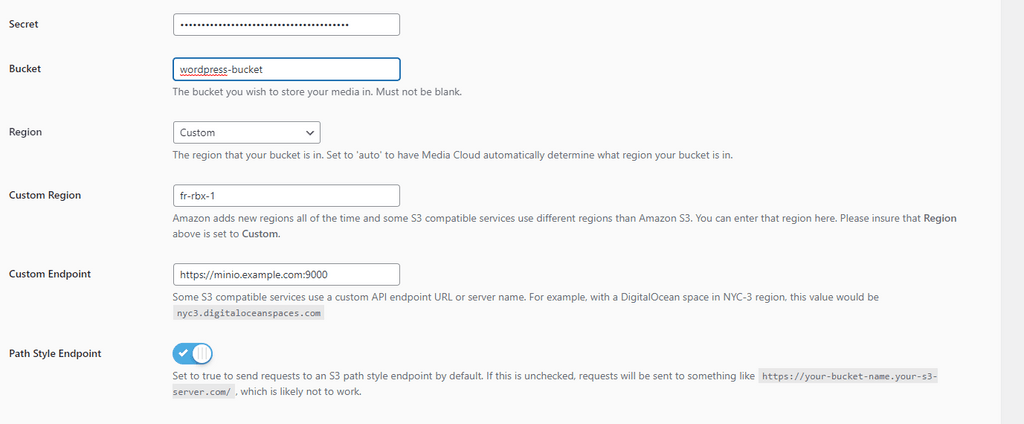

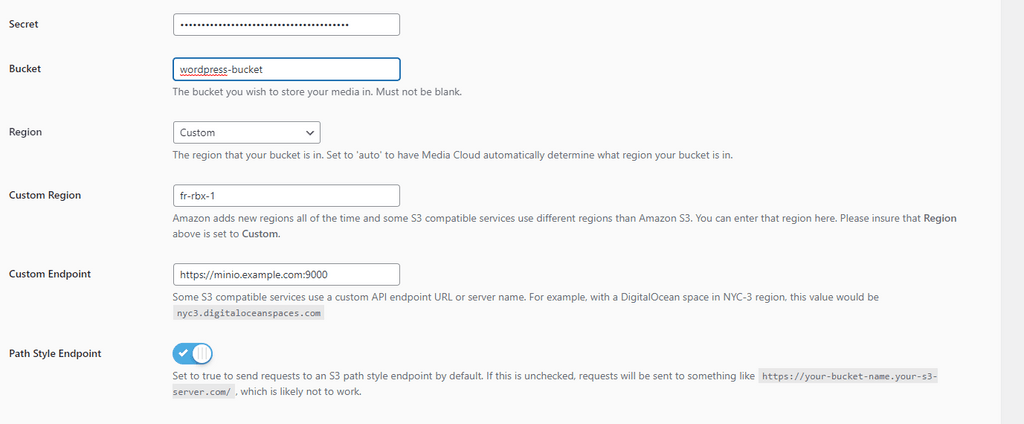

You may have seen some of my posts on this forum asking for help to get the various apps working with Minio. Thanks to all who helped! And let me tell you... I have set up my various Wordpress sites (using Media Cloud), Mastodons, Peertubes, and now the new XBackBone app, and OBJECT STORAGE IS LIT.

The best thing, for me, is that I can now back up ALL my Cloudron apps and not worry about how much space they are taking up because most of the media is stored in my Minio. I notice this most with Peertube, of course, but also Mastodon. The way I've set mine up, within a few hours it had several GBs of data. Backing all this up took forever, and in some cases, the backups wouldn't work because of either how long it took, or due to some file issue. But, the apps back up nicely, and their media is already on the Minio instance.

The most recent coolness is discovering XBackBone. I had no idea what that was. I had to google what "XShare" was... and holy smokes, what a lifesaver. I take tons of screenshots, and sometimes lose track of where they are. But with XShare uploading to XBackBone which I've set up to use my Minio (meaning the screenshots go straight to its bucket). Amazing!

I'm assuming that the Minio offered by Cloudron will be just as useful. Give it a try! So, grab one of those KS-1s (took me months), install Cloudron in it, and you're set!

-

Some recent posts have dealt with issues due to running out of space. I too had this challenge when I first started , and it was constraining to say the least. I eventually moved up to larger VPSes (my current one has 708GB), which allowed me to try different apps. However, apps like Peertube and Mastodon and Cubby all start taking up lots of space, more than before.

To help with this I started using the free tier of object storage offered by Scaleway, who gives you up to 75GB free! Amazing! But you know what, even with that I started hitting the limit after a few months.

So, now that I was using Object Storage, that got me looking for other ways it might help. My search led me to Minio - self-hosted object storage!? Cool. I also frequent lowendtalk and serverhunter, which eventually led to me snag a KS-1 from OVH/KimSufi. On this I installed Minio, using both the Minio instructions, but also this site was super helpful. I lucked out and discovered that my 1TB VPS was actually a 2TB drive. Double cool.

You may have seen some of my posts on this forum asking for help to get the various apps working with Minio. Thanks to all who helped! And let me tell you... I have set up my various Wordpress sites (using Media Cloud), Mastodons, Peertubes, and now the new XBackBone app, and OBJECT STORAGE IS LIT.

The best thing, for me, is that I can now back up ALL my Cloudron apps and not worry about how much space they are taking up because most of the media is stored in my Minio. I notice this most with Peertube, of course, but also Mastodon. The way I've set mine up, within a few hours it had several GBs of data. Backing all this up took forever, and in some cases, the backups wouldn't work because of either how long it took, or due to some file issue. But, the apps back up nicely, and their media is already on the Minio instance.

The most recent coolness is discovering XBackBone. I had no idea what that was. I had to google what "XShare" was... and holy smokes, what a lifesaver. I take tons of screenshots, and sometimes lose track of where they are. But with XShare uploading to XBackBone which I've set up to use my Minio (meaning the screenshots go straight to its bucket). Amazing!

I'm assuming that the Minio offered by Cloudron will be just as useful. Give it a try! So, grab one of those KS-1s (took me months), install Cloudron in it, and you're set!

@scooke

I run a ~8TB Server with a Cloudron running only 32 Minio Apps for all the Backup space I need for other Cloudrons.

(not only 32 as the number more like only Minios)The only problem I had so far where load spiked when all Cloudron Servers tried to backup at the same time

so I poor man load balanced that by switching the times when the backups happen around.

so I poor man load balanced that by switching the times when the backups happen around.

Using the Minios as space for apps tho, hmmm did not try that yet.

-

Some recent posts have dealt with issues due to running out of space. I too had this challenge when I first started , and it was constraining to say the least. I eventually moved up to larger VPSes (my current one has 708GB), which allowed me to try different apps. However, apps like Peertube and Mastodon and Cubby all start taking up lots of space, more than before.

To help with this I started using the free tier of object storage offered by Scaleway, who gives you up to 75GB free! Amazing! But you know what, even with that I started hitting the limit after a few months.

So, now that I was using Object Storage, that got me looking for other ways it might help. My search led me to Minio - self-hosted object storage!? Cool. I also frequent lowendtalk and serverhunter, which eventually led to me snag a KS-1 from OVH/KimSufi. On this I installed Minio, using both the Minio instructions, but also this site was super helpful. I lucked out and discovered that my 1TB VPS was actually a 2TB drive. Double cool.

You may have seen some of my posts on this forum asking for help to get the various apps working with Minio. Thanks to all who helped! And let me tell you... I have set up my various Wordpress sites (using Media Cloud), Mastodons, Peertubes, and now the new XBackBone app, and OBJECT STORAGE IS LIT.

The best thing, for me, is that I can now back up ALL my Cloudron apps and not worry about how much space they are taking up because most of the media is stored in my Minio. I notice this most with Peertube, of course, but also Mastodon. The way I've set mine up, within a few hours it had several GBs of data. Backing all this up took forever, and in some cases, the backups wouldn't work because of either how long it took, or due to some file issue. But, the apps back up nicely, and their media is already on the Minio instance.

The most recent coolness is discovering XBackBone. I had no idea what that was. I had to google what "XShare" was... and holy smokes, what a lifesaver. I take tons of screenshots, and sometimes lose track of where they are. But with XShare uploading to XBackBone which I've set up to use my Minio (meaning the screenshots go straight to its bucket). Amazing!

I'm assuming that the Minio offered by Cloudron will be just as useful. Give it a try! So, grab one of those KS-1s (took me months), install Cloudron in it, and you're set!

-

Some recent posts have dealt with issues due to running out of space. I too had this challenge when I first started , and it was constraining to say the least. I eventually moved up to larger VPSes (my current one has 708GB), which allowed me to try different apps. However, apps like Peertube and Mastodon and Cubby all start taking up lots of space, more than before.

To help with this I started using the free tier of object storage offered by Scaleway, who gives you up to 75GB free! Amazing! But you know what, even with that I started hitting the limit after a few months.

So, now that I was using Object Storage, that got me looking for other ways it might help. My search led me to Minio - self-hosted object storage!? Cool. I also frequent lowendtalk and serverhunter, which eventually led to me snag a KS-1 from OVH/KimSufi. On this I installed Minio, using both the Minio instructions, but also this site was super helpful. I lucked out and discovered that my 1TB VPS was actually a 2TB drive. Double cool.

You may have seen some of my posts on this forum asking for help to get the various apps working with Minio. Thanks to all who helped! And let me tell you... I have set up my various Wordpress sites (using Media Cloud), Mastodons, Peertubes, and now the new XBackBone app, and OBJECT STORAGE IS LIT.

The best thing, for me, is that I can now back up ALL my Cloudron apps and not worry about how much space they are taking up because most of the media is stored in my Minio. I notice this most with Peertube, of course, but also Mastodon. The way I've set mine up, within a few hours it had several GBs of data. Backing all this up took forever, and in some cases, the backups wouldn't work because of either how long it took, or due to some file issue. But, the apps back up nicely, and their media is already on the Minio instance.

The most recent coolness is discovering XBackBone. I had no idea what that was. I had to google what "XShare" was... and holy smokes, what a lifesaver. I take tons of screenshots, and sometimes lose track of where they are. But with XShare uploading to XBackBone which I've set up to use my Minio (meaning the screenshots go straight to its bucket). Amazing!

I'm assuming that the Minio offered by Cloudron will be just as useful. Give it a try! So, grab one of those KS-1s (took me months), install Cloudron in it, and you're set!

-

@scooke did you document your Mastodon and Peertubes minio setup? Would like to er...steal that

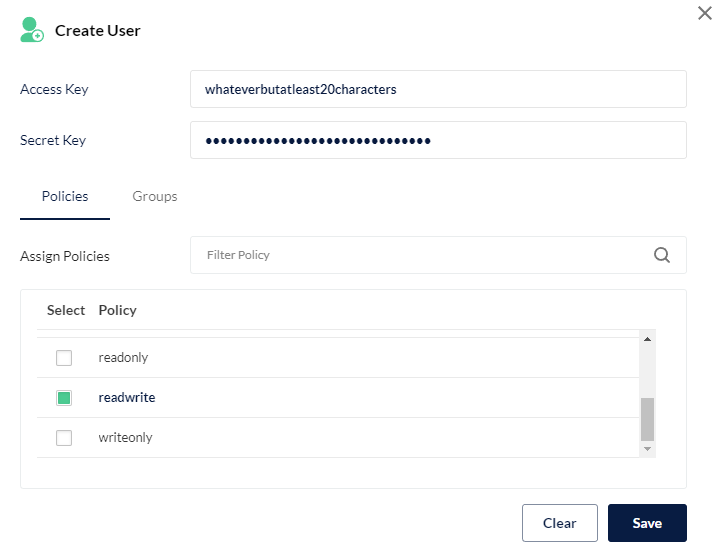

@doodlemania2 I'll do what I can, but first, a small detail which may blow your mind as it did mine. It's not the buckets that get the Access and Secret Keys... it's the User (or Identity)!!:

# Trying to use my own Minio as an S3-enabled file system in Mastodon S3_ENABLED=true S3_BUCKET=mastodon-bucket AWS_ACCESS_KEY_ID=whateverbutatleast20characters AWS_SECRET_ACCESS_KEY=whateverbutatleast40characters S3_REGION=fr-rbx-1 S3_PROTOCOL=https S3_HOSTNAME=minio.example.com:9000The Access Key and Secret Access Key lengths are based on the length of my existing AWS S3 credentials. However, Minio lets you use ANY length, short or long. In fact, what was confusing at first is that the Access Key is referred to as "username" and the Secret Access Key as "password". I read elsewhere that this is likely an attempt to not be beholden to AWS S3's term convention since it is object storage and not a proprietory Amazon thing. So I in fact stuck wth 20 characters, but would use the first 10 or so to write the name of whatever the bucket was going to serve, to help me keep track of them all. So, one bucket might be racknerd2H8e0MSkO31C, with a properly random 40 character password/Secret Key (my example above does not follow this convention).

As far as I can tell (just checked) there was nothing I needed to do in the GUI of Mastodon. I just entered the details in the env.production.

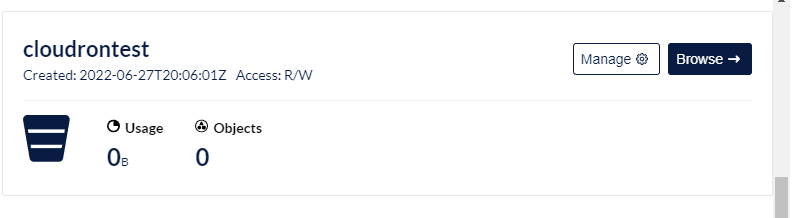

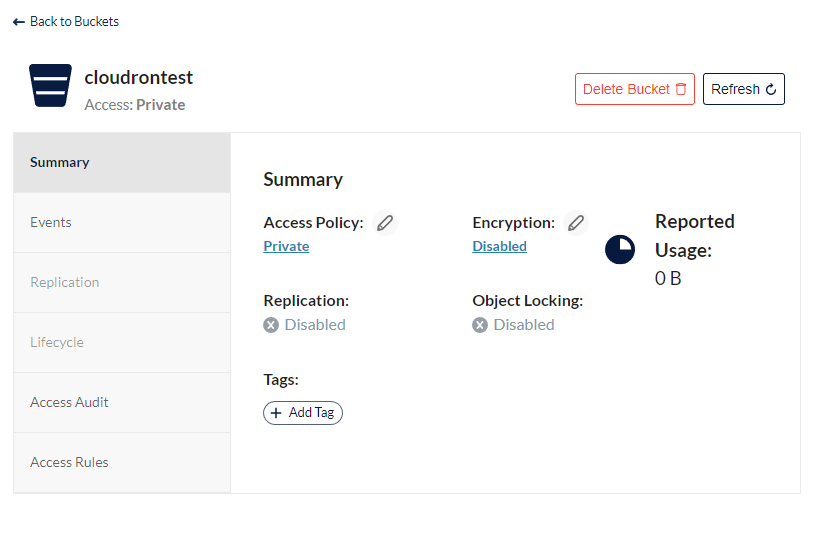

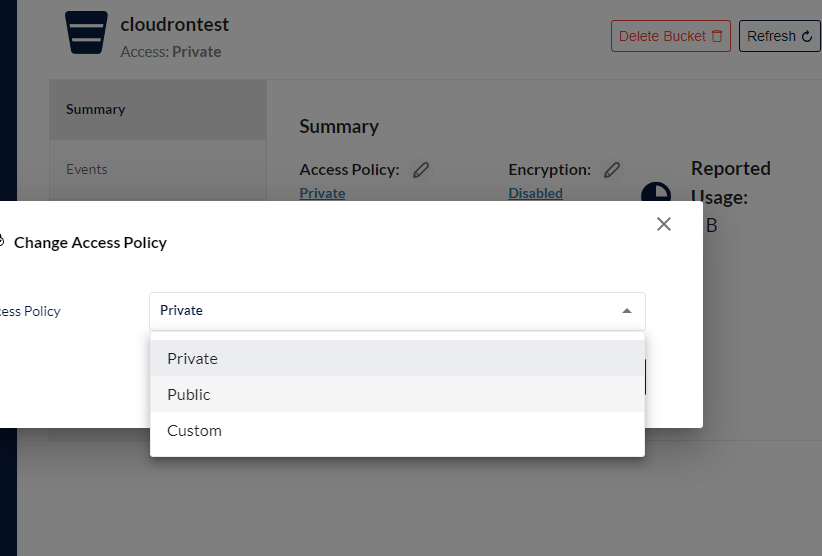

On the Minio side (remember, mine is non-Cloudron, but I don't think there should be any difference), a new bucket was automatically set to PRIVATE. I didn't think it would make a difference, but it wouldn't connect, so I had to go back to the main Dashboard and click "Manage" for the bucket in question, set it to Public.

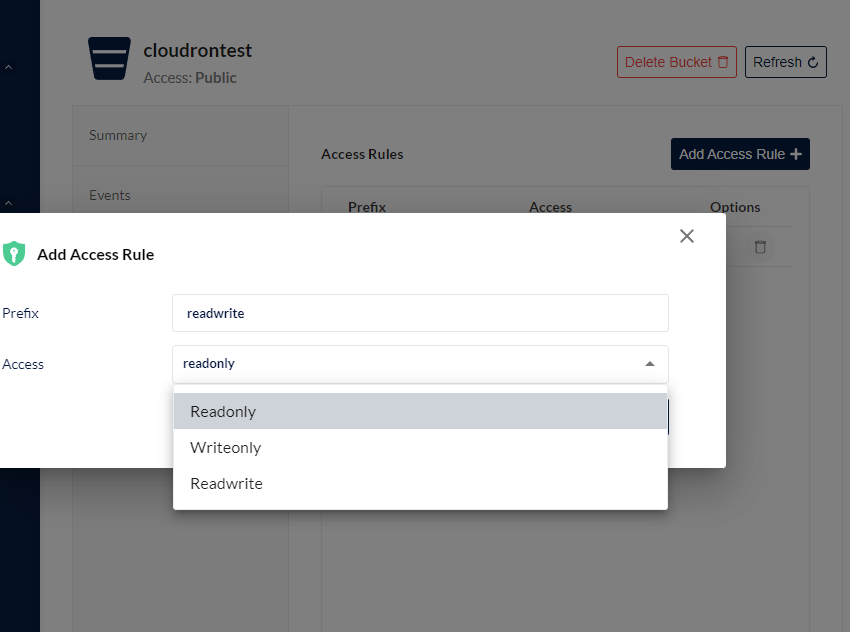

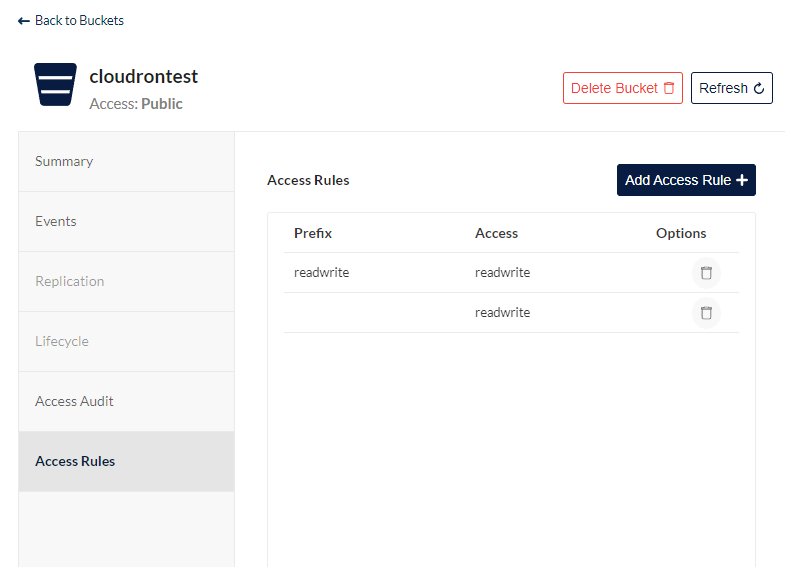

Then, I had to click Access Rules, and even though there was already a "readwrite" there, it wouldn't connect until I added a second one, but with a Prefix.

THEN, it connected. I don't know why.I then created a new User using the 20 character Access Key, 40 character Secret Key, and chose the

readwritePolicy. NOTE: I personally am astounded, but apparently Minio's system means every bucket in your Minio withreadwritepermissions can be read any Identity/User with the same Policy ofreadwrite. You can't assign ONE bucket to just ONE Identity/User, nor can you limit an Identity/User to a specific bucket only.Now obviously there must be some way; why else have

readonly,writeonlyPolicies? But as I said, my Mastodon would only work if the bucket was set to Public andreadwrite, with an Identity with a Policy ofreadwrite. If you can find a different solution, please post here and let us all know. But all I've found so far, on Minio forums themselves, are people writing in to ask this very question, only to eventually be told what I just shared - any bucket that is Public and isreadwritecan be read by any Identity (on that specific Minio instance of course, not just any Minio instance anywhere!). So, keep that in mind if you have multiple Users on your Minio, if they have access to the Dashboard. Your workaround is to NOT give them access to the Dashboard, and to make complicatedly named Buckets with similarly complicated Access and Secret Keys. Each user then has the potential to access other buckets, but they won't if they can't easily figure out the complicatedly name buckets. Just supply them their details.

-

Here is what I have working for my Peertube:

storage: #For some reason you don't need to remove anything here tmp: '/app/data/storage/tmp/' # Use to download data (imports etc), store uploaded files before processing... videos: '/app/data/storage/videos/' streaming_playlists: '/app/data/storage/streaming-playlists/' avatars: '/app/data/storage/avatars/' redundancy: '/app/data/storage/redundancy/' logs: '/app/data/storage/logs/' previews: '/app/data/storage/previews/' thumbnails: '/app/data/storage/thumbnails/' torrents: '/app/data/storage/torrents/' captions: '/app/data/storage/captions/' cache: '/app/data/storage/cache/' plugins: '/app/data/storage/plugins/' client_overrides: '/app/data/storage/client-overrides/' bin: /app/data/storage/bin/ # This is me trying to use my Minio for storage object_storage: enabled: true # Without protocol, will default to HTTPS endpoint: 'https://minio.example.com:9000' # 's3.amazonaws.com' or 's3.fr-par.scw.cloud' for example region: 'fr-rbx-1' # Set this ACL on each uploaded object upload_acl: 'public' credentials: access_key_id: 'whateverbutatleast20characters' secret_access_key: 'whateverbutatleast40characters' # Maximum amount to upload in one request to object storage max_upload_part: 2GB streaming_playlists: bucket_name: 'peertube-bucket' # Allows setting all buckets to the same value but with a different prefix prefix: 'streaming-playlists/' # Example: 'streaming-playlists:' # Base url for object URL generation, scheme and host will be replaced by this URL # Useful when you want to use a CDN/external proxy # base_url: 'https://peertube-bucket.minio.example.com' #NOTE-the sub.subdomain is the name of the bucket. # Same settings but for webtorrent videos videos: bucket_name: 'peertube-bucket' prefix: 'videos/' # base_url: 'http://peertube-bucket.minio.example.com/'I've left in some of the commented out lines just for reference. It didn't work for me with them, so I commented them out. The Bucket name is important, and just as important are the "prefix" entries because these become sub-folders in the Bucket. So my peertube-bucket has two folders,

streaming-playlistsandvideos. As in the Mastodon config above, the Bucket needed to be Public, with the extrareadwritepermission, plus an Identity/User with areadwritePolicy, to work.Even though these Buckets are on the same Minio instance, and the Mastodon and Peertube Identities have

readwritePolicies and thus could access the other buckets, I still opted to make separate Identities for each use... out of habit. And just in case it is possible to limit access using the arcane S3 cryptic policy terminology which I have not yet comprehended.Like with the Mastodon setup, I didn't have to do anything in the GUI of Peertube, other than enter the info into the production.yaml file of the Peertube app.

-

Here is a bit more info:

For the Minio buckets to work with Mastodon, Peertube, XBackBone, and another restic-based backup solution I set up, you MUST enter new A records for the bucket and domain of your Minio setup. I don't know how it will work on Cloudron, but for my installed-by-hand Minio instance on my KS-1, I had buckets likepeertube-bucket,restic-backup,mastodon-bucket, etc. My Minio instance domain is https://minio.example.com. So, I needed to make (new) A records like:A minio.example.com ip123 A peertube-bucket.minio.example.com ip123 A restic-backup.minio.example.com ip123 A mastodon-bucket.minio.example.com ip123After those were active, I then had to rerun

sudo certbot certonly --standalone -d minio.example.com -d peertube-bucket.minio.example.com -d restic-backup.minio.example.com -d mastodon-bucket.minio.example.com -dand then copy the two new certs into the proper place (I imagine the Cloudron-based Minio will do all this automatically?)(Certbot calls this "Expanding" the certificate, and I actually added Expanded the two certs three times, rerunning thecertbot certonly --standalonewith all previous domains, plus whichever was the new one. It didn't work to make a new separate cert, even with it's own A Record, for, for example, resti-backup.minio.example.com plus the original minio,example.com cert. Again, I don't understand completely, but access to Minio depended on there being ONE cert with as many additional domains as necessary within it.) I tried to just use a wildcard entry for the certbot (*.minio.example.com) but it didn't work. I don't recall why, but I had to enter each sub-subdomain fully. Finally, I had to restart Minio.If you read all my previous posts asking for help, you'll see how I achieved Mino-enlightenment bit by bit, with help from others.

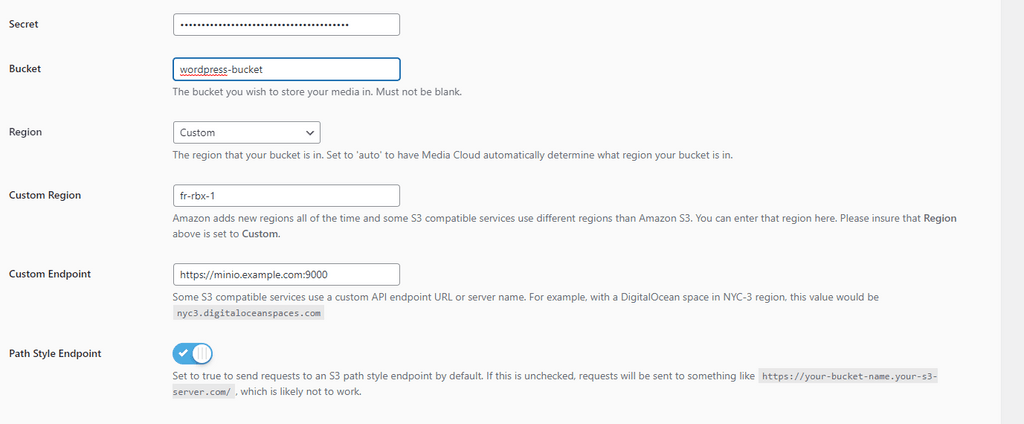

One thing that confused me for awhile was that I initially got into Minio with a Media Cloud plugin for Wordpress. I didn't understand at the time what

Path Style Endpointmeant, which is the default for the Wordpress plugin Media Cloud. Basically, it worked right away just entering the Bucket name, region, and Access and Secret Keys. So I didn't realize the need for A records and SSL certs for Minio to be accessible by the other method (whose name I forget!).

I should probably rewrite all of this, make it more succinct, but for now, voila!

-

Here is a bit more info:

For the Minio buckets to work with Mastodon, Peertube, XBackBone, and another restic-based backup solution I set up, you MUST enter new A records for the bucket and domain of your Minio setup. I don't know how it will work on Cloudron, but for my installed-by-hand Minio instance on my KS-1, I had buckets likepeertube-bucket,restic-backup,mastodon-bucket, etc. My Minio instance domain is https://minio.example.com. So, I needed to make (new) A records like:A minio.example.com ip123 A peertube-bucket.minio.example.com ip123 A restic-backup.minio.example.com ip123 A mastodon-bucket.minio.example.com ip123After those were active, I then had to rerun

sudo certbot certonly --standalone -d minio.example.com -d peertube-bucket.minio.example.com -d restic-backup.minio.example.com -d mastodon-bucket.minio.example.com -dand then copy the two new certs into the proper place (I imagine the Cloudron-based Minio will do all this automatically?)(Certbot calls this "Expanding" the certificate, and I actually added Expanded the two certs three times, rerunning thecertbot certonly --standalonewith all previous domains, plus whichever was the new one. It didn't work to make a new separate cert, even with it's own A Record, for, for example, resti-backup.minio.example.com plus the original minio,example.com cert. Again, I don't understand completely, but access to Minio depended on there being ONE cert with as many additional domains as necessary within it.) I tried to just use a wildcard entry for the certbot (*.minio.example.com) but it didn't work. I don't recall why, but I had to enter each sub-subdomain fully. Finally, I had to restart Minio.If you read all my previous posts asking for help, you'll see how I achieved Mino-enlightenment bit by bit, with help from others.

One thing that confused me for awhile was that I initially got into Minio with a Media Cloud plugin for Wordpress. I didn't understand at the time what

Path Style Endpointmeant, which is the default for the Wordpress plugin Media Cloud. Basically, it worked right away just entering the Bucket name, region, and Access and Secret Keys. So I didn't realize the need for A records and SSL certs for Minio to be accessible by the other method (whose name I forget!).

I should probably rewrite all of this, make it more succinct, but for now, voila!

Everyone give it up for @scooke - that is a freaking AMAZING set of posts. I knew that each Minio instance was essentially "single user" unless you made bucket names goofy long and relied on that.

Will try with my PeerTube and Mastodon (I wonder if it'll migrate!) Thank you so much good sir!

-

Here is a bit more info:

For the Minio buckets to work with Mastodon, Peertube, XBackBone, and another restic-based backup solution I set up, you MUST enter new A records for the bucket and domain of your Minio setup. I don't know how it will work on Cloudron, but for my installed-by-hand Minio instance on my KS-1, I had buckets likepeertube-bucket,restic-backup,mastodon-bucket, etc. My Minio instance domain is https://minio.example.com. So, I needed to make (new) A records like:A minio.example.com ip123 A peertube-bucket.minio.example.com ip123 A restic-backup.minio.example.com ip123 A mastodon-bucket.minio.example.com ip123After those were active, I then had to rerun

sudo certbot certonly --standalone -d minio.example.com -d peertube-bucket.minio.example.com -d restic-backup.minio.example.com -d mastodon-bucket.minio.example.com -dand then copy the two new certs into the proper place (I imagine the Cloudron-based Minio will do all this automatically?)(Certbot calls this "Expanding" the certificate, and I actually added Expanded the two certs three times, rerunning thecertbot certonly --standalonewith all previous domains, plus whichever was the new one. It didn't work to make a new separate cert, even with it's own A Record, for, for example, resti-backup.minio.example.com plus the original minio,example.com cert. Again, I don't understand completely, but access to Minio depended on there being ONE cert with as many additional domains as necessary within it.) I tried to just use a wildcard entry for the certbot (*.minio.example.com) but it didn't work. I don't recall why, but I had to enter each sub-subdomain fully. Finally, I had to restart Minio.If you read all my previous posts asking for help, you'll see how I achieved Mino-enlightenment bit by bit, with help from others.

One thing that confused me for awhile was that I initially got into Minio with a Media Cloud plugin for Wordpress. I didn't understand at the time what

Path Style Endpointmeant, which is the default for the Wordpress plugin Media Cloud. Basically, it worked right away just entering the Bucket name, region, and Access and Secret Keys. So I didn't realize the need for A records and SSL certs for Minio to be accessible by the other method (whose name I forget!).

I should probably rewrite all of this, make it more succinct, but for now, voila!

@scooke said in Amazing app to help with backups and saving space!:

you MUST enter new A records for the bucket and domain of your Minio setup

This is commonly referred to as "path style" vs. "domain style" buckets. Some applications can do both, some just one of the two.

When I was backing up my Synology NAS to my own Minio instance I had a wildcard vhost in Nginx and a wildcard certificate for this.

For domain style buckets to work with Cloudron, the Cloudron app would need to support domain aliases.

-

@scooke said in Amazing app to help with backups and saving space!:

you MUST enter new A records for the bucket and domain of your Minio setup

This is commonly referred to as "path style" vs. "domain style" buckets. Some applications can do both, some just one of the two.

When I was backing up my Synology NAS to my own Minio instance I had a wildcard vhost in Nginx and a wildcard certificate for this.

For domain style buckets to work with Cloudron, the Cloudron app would need to support domain aliases.

@fbartels said in Amazing app to help with backups and saving space!:

For domain style buckets to work with Cloudron, the Cloudron app would need to support domain aliases.

Sounds like perhaps @staff should add the aliases feature to the Minio app

-

Everyone give it up for @scooke - that is a freaking AMAZING set of posts. I knew that each Minio instance was essentially "single user" unless you made bucket names goofy long and relied on that.

Will try with my PeerTube and Mastodon (I wonder if it'll migrate!) Thank you so much good sir!

@doodlemania2 Thanks

An update: I neglected to renew my KS-1 on which the Minio instance I was using in this post resided, so I lost it all. I then managed to get another KS-1 and used Caprover on it. By installing Minio via Caprover I have avoided hand-rolling all those certs - Caprover does it for me, I guess, in the background, with an approriately set up domain (*.example.com, not just example.com). Even though I enjoyed hand-installing it and managing it, using some software is actually nice.The other difference, having installed visa Caprover, is that the HOSTNAME and ENDPOINT are the same (minio-s3.example.com). Not sure why. But the actual webapp of Minio is at mino.example.com. In my previous handrolled setup, the HOSTNAME was minio.example.com and the EndPoint was minio-s3.example.com. Like I said, I'm not sure why this is different. Maybe other software like Cloudron and Yunohost do the same as Caprover... all I know is it works.

Oh yes, another difference is that I used a region, eu-west-1, that doesn't necessarily correspond to my actual server location (Germany), but as long as I used that in the env file as well as the config for Minio, everything was fine.

One more difference is that there didn't seem to be a need to include the S3_PROTOCOL with my handrolled Minio, but it is needed with the Caprover install.

Here is the current env.production:

S3_ENABLED=true

S3_BUCKET=mastodon-bucket

AWS_ACCESS_KEY_ID=longlongkeyand numbers

AWS_SECRET_ACCESS_KEY=anotherlongkeywithevenmorenumbers

S3_REGION=eu-west-1

S3_PROTOCOL=https

S3_HOSTNAME=minio-s3.example.com

S3_ENDPOINT=https://minio-s3.example.comLet me add that I am LOVING my little Mastodon instance; following and getting all kinds of super cool people and info, way more than I ever found on Twitter.

-

@doodlemania2 Thanks

An update: I neglected to renew my KS-1 on which the Minio instance I was using in this post resided, so I lost it all. I then managed to get another KS-1 and used Caprover on it. By installing Minio via Caprover I have avoided hand-rolling all those certs - Caprover does it for me, I guess, in the background, with an approriately set up domain (*.example.com, not just example.com). Even though I enjoyed hand-installing it and managing it, using some software is actually nice.The other difference, having installed visa Caprover, is that the HOSTNAME and ENDPOINT are the same (minio-s3.example.com). Not sure why. But the actual webapp of Minio is at mino.example.com. In my previous handrolled setup, the HOSTNAME was minio.example.com and the EndPoint was minio-s3.example.com. Like I said, I'm not sure why this is different. Maybe other software like Cloudron and Yunohost do the same as Caprover... all I know is it works.

Oh yes, another difference is that I used a region, eu-west-1, that doesn't necessarily correspond to my actual server location (Germany), but as long as I used that in the env file as well as the config for Minio, everything was fine.

One more difference is that there didn't seem to be a need to include the S3_PROTOCOL with my handrolled Minio, but it is needed with the Caprover install.

Here is the current env.production:

S3_ENABLED=true

S3_BUCKET=mastodon-bucket

AWS_ACCESS_KEY_ID=longlongkeyand numbers

AWS_SECRET_ACCESS_KEY=anotherlongkeywithevenmorenumbers

S3_REGION=eu-west-1

S3_PROTOCOL=https

S3_HOSTNAME=minio-s3.example.com

S3_ENDPOINT=https://minio-s3.example.comLet me add that I am LOVING my little Mastodon instance; following and getting all kinds of super cool people and info, way more than I ever found on Twitter.

-

I'm trying to follow the info in this thread to get an install of Mastodon to store media files on a Scaleway bucket, but so far I'm failing

I think it maybe something to do with needing to add DNS records to my bucket or something.

But I'm not sure how/ where/ what to do next

Currently I have this in my

env.production:# Trying to store data on Scaleway S3 object S3_ENABLED=true S3_BUCKET=safe-just-space AWS_ACCESS_KEY_ID=<key_id> AWS_SECRET_ACCESS_KEY=<secret_key> S3_REGION=fr-par S3_PROTOCOL=https S3_HOSTNAME=s3.fr-par.scw.cloudBut when I try to add any media I just get a 503 Service Unavailable

Do I need to create a record in my DNS for safejust.space that relates to s3.fr-par.scw.cloud or something?

@scooke @fbartels @staff any ideas? Thanks!

Edit: also, it's be nice if I could

S3_ALIAS_HOST=<url>settings too so that media URLs would e.g. bemedia.safejust.spaceinstead of as3.fr-par.scw.clouddomainCan anyone help? Thanks!

-

I'm trying to follow the info in this thread to get an install of Mastodon to store media files on a Scaleway bucket, but so far I'm failing

I think it maybe something to do with needing to add DNS records to my bucket or something.

But I'm not sure how/ where/ what to do next

Currently I have this in my

env.production:# Trying to store data on Scaleway S3 object S3_ENABLED=true S3_BUCKET=safe-just-space AWS_ACCESS_KEY_ID=<key_id> AWS_SECRET_ACCESS_KEY=<secret_key> S3_REGION=fr-par S3_PROTOCOL=https S3_HOSTNAME=s3.fr-par.scw.cloudBut when I try to add any media I just get a 503 Service Unavailable

Do I need to create a record in my DNS for safejust.space that relates to s3.fr-par.scw.cloud or something?

@scooke @fbartels @staff any ideas? Thanks!

Edit: also, it's be nice if I could

S3_ALIAS_HOST=<url>settings too so that media URLs would e.g. bemedia.safejust.spaceinstead of as3.fr-par.scw.clouddomainCan anyone help? Thanks!

@jdaviescoates said in Amazing app to help with backups and saving space!:

Do I need to create a record in my DNS for safejust.space that relates to s3.fr-par.scw.cloud or something?

Doesn't seem like I did need to do that!

I just needed to add

S3_ENDPOINT=https://s3.fr-par.scw.cloudSo now I have this and it seems to all be working:

# Trying to store data on Scaleway S3 object S3_ENABLED=true S3_BUCKET=safe-just-space AWS_ACCESS_KEY_ID=<key_id> AWS_SECRET_ACCESS_KEY=<secret_key> S3_REGION=fr-par S3_PROTOCOL=https S3_HOSTNAME=s3.fr-par.scw.cloud S3_ENDPOINT=https://s3.fr-par.scw.cloud@jdaviescoates said in Amazing app to help with backups and saving space!:

be nice if I could S3_ALIAS_HOST=<url> settings too so that media URLs would e.g. be media.safejust.space instead of a s3.fr-par.scw.cloud domain

Can anyone help? Thanks!Would still like to try that too if anyone can help?

-

@jdaviescoates said in Amazing app to help with backups and saving space!:

Do I need to create a record in my DNS for safejust.space that relates to s3.fr-par.scw.cloud or something?

Doesn't seem like I did need to do that!

I just needed to add

S3_ENDPOINT=https://s3.fr-par.scw.cloudSo now I have this and it seems to all be working:

# Trying to store data on Scaleway S3 object S3_ENABLED=true S3_BUCKET=safe-just-space AWS_ACCESS_KEY_ID=<key_id> AWS_SECRET_ACCESS_KEY=<secret_key> S3_REGION=fr-par S3_PROTOCOL=https S3_HOSTNAME=s3.fr-par.scw.cloud S3_ENDPOINT=https://s3.fr-par.scw.cloud@jdaviescoates said in Amazing app to help with backups and saving space!:

be nice if I could S3_ALIAS_HOST=<url> settings too so that media URLs would e.g. be media.safejust.space instead of a s3.fr-par.scw.cloud domain

Can anyone help? Thanks!Would still like to try that too if anyone can help?

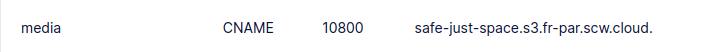

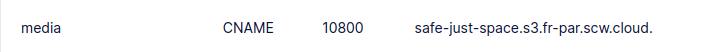

@jdaviescoates can't you just add a CNAME record that points to the S3_ALIAS_HOST ?

-

@jdaviescoates can't you just add a CNAME record that points to the S3_ALIAS_HOST ?

@robi thanks, I think it's something to do with that yes

(from what I've read on https://thomas-leister.de/en/mastodon-s3-media-storage/ and https://chrishubbs.com/2022/11/19/hosting-a-mastodon-instance-moving-asset-storage-to-s3/ and https://github.com/cybrespace/cybrespace-meta/blob/master/s3.md )

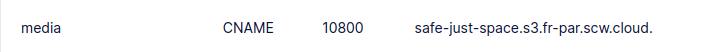

In fact, I've done that. Here's the relevant DNS entry for safejust.space:

But that didn't seem to do the trick, I think because of cert issues which I'm not sure how to resolve.

But perhaps I'm doing something wrong?

-

@robi thanks, I think it's something to do with that yes

(from what I've read on https://thomas-leister.de/en/mastodon-s3-media-storage/ and https://chrishubbs.com/2022/11/19/hosting-a-mastodon-instance-moving-asset-storage-to-s3/ and https://github.com/cybrespace/cybrespace-meta/blob/master/s3.md )

In fact, I've done that. Here's the relevant DNS entry for safejust.space:

But that didn't seem to do the trick, I think because of cert issues which I'm not sure how to resolve.

But perhaps I'm doing something wrong?

@jdaviescoates can you retrieve media manually via that CNAME?

-

@robi thanks, I think it's something to do with that yes

(from what I've read on https://thomas-leister.de/en/mastodon-s3-media-storage/ and https://chrishubbs.com/2022/11/19/hosting-a-mastodon-instance-moving-asset-storage-to-s3/ and https://github.com/cybrespace/cybrespace-meta/blob/master/s3.md )

In fact, I've done that. Here's the relevant DNS entry for safejust.space:

But that didn't seem to do the trick, I think because of cert issues which I'm not sure how to resolve.

But perhaps I'm doing something wrong?

@jdaviescoates said in Amazing app to help with backups and saving space!:

But perhaps I'm doing something wrong?

Aha!

I am doing something wrong, the bucket name needs to be the same as the URL

https://www.scaleway.com/en/docs/tutorials/s3-customize-url-cname/

-

@jdaviescoates can you retrieve media manually via that CNAME?

@robi said in Amazing app to help with backups and saving space!:

@jdaviescoates can you retrieve media manually via that CNAME?

Nope, because I need to change my bucket name...

-

@robi said in Amazing app to help with backups and saving space!:

@jdaviescoates can you retrieve media manually via that CNAME?

Nope, because I need to change my bucket name...

@jdaviescoates yep.. media.s3...