Disk space usage seems incorrect on external disk

-

Update:

For my main disk...

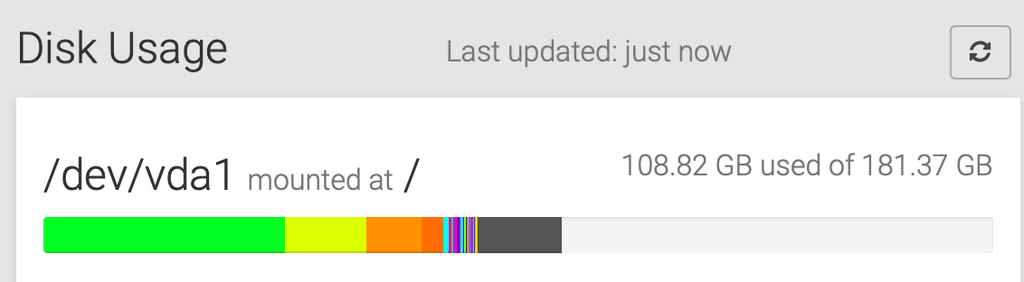

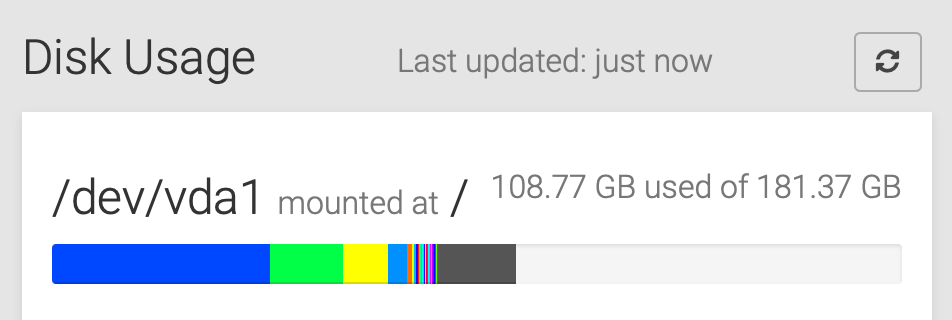

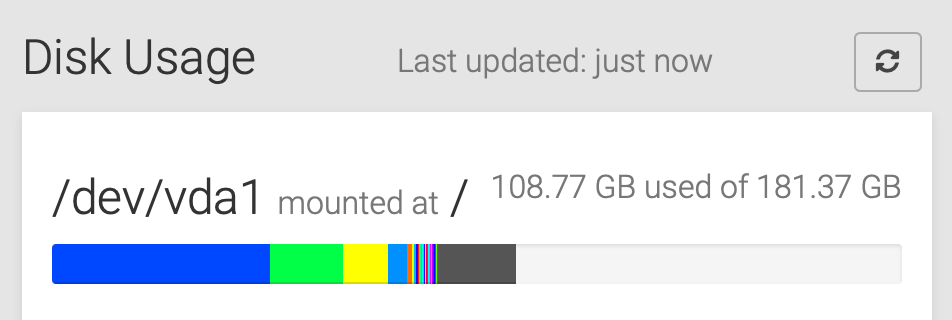

df -Hshows this for vda1 / (root):/dev/vda1 182G 100G 73G 58% /Yet Cloudron shows the following: (108 GB used)

Where is it getting that extra nearly 9 GB used from? The total disk size is close enough (181.37 GB vs 182 GB shown from command), it's just the usage part that seems quite inaccurate, especially if we're using si units this time with version 7.3.2.

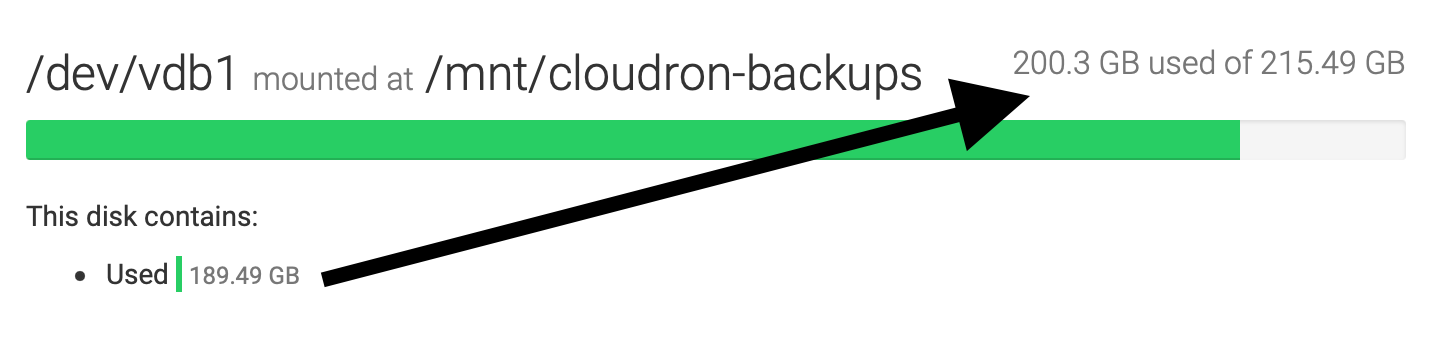

For my backup disk it's similar situation:

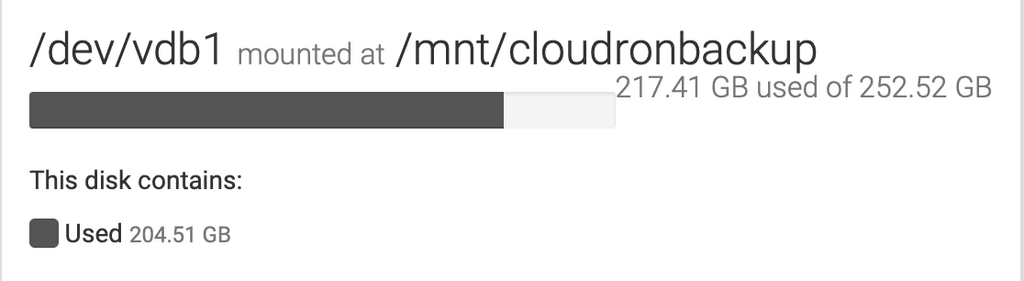

df -Hshows this for vdb1 (cloudronbackup):/dev/vdb1 253G 205G 36G 86% /mnt/cloudronbackupYet Cloudron shows this: (217 GB used above graph, 204 GB used below)

In this case for the external backup disk, the lower Used number of 204.51 GB is pretty close to the 205 GB from the command, so that seems accurate, however in that case where is that extra ~12 GB coming from for the above-graph used number?

There seems to be a lot of discrepancies in the calculations here still, unfortunately. Cc @girish

-

@girish Out of curiosity... how often will it check for updated disk sizes by default without manual intervention?

Btw, I think it'd be nice if the GUI showed free space too in an easy manner instead of having us do the math ourselves

haha. For your consideration...

haha. For your consideration...

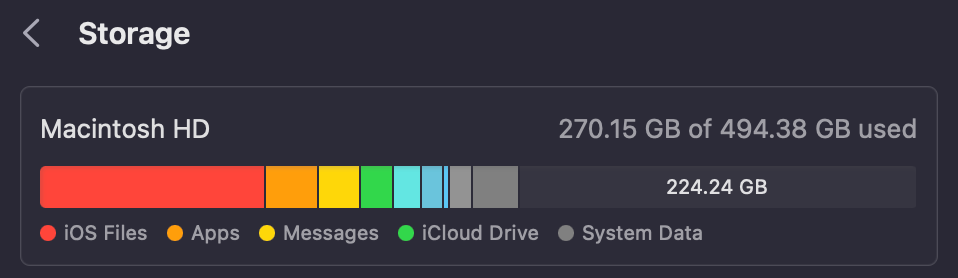

Or maybe it could be closer to how Apple displays it where the used bit is how you have it but then in the graph itself the free bit is the available space left?

@d19dotca said in Disk space usage seems incorrect on external disk:

Btw, I think it'd be nice if the GUI showed free space too in an easy manner instead of having us do the math ourselves

+1

Been saying this for ages

-

G girish marked this topic as a question on

G girish marked this topic as a question on

-

G girish has marked this topic as solved on

G girish has marked this topic as solved on

-

@girish - I noticed you marked this as Solved 4 days ago, but I don't believe this has actually been solved.

There's a pretty large discrepancy in usage for my external disk as seen in earlier screenshots, and I don't see that having been resolved yet.

For example, "used" under the "this disk contains:" section is 204 GB, but then the graph part shows 217 GB used. Seems like a defect that there's multiple different "used" numbers presented, and by quite a few GBs too (approximately 5 - 20 GB discrepancy).

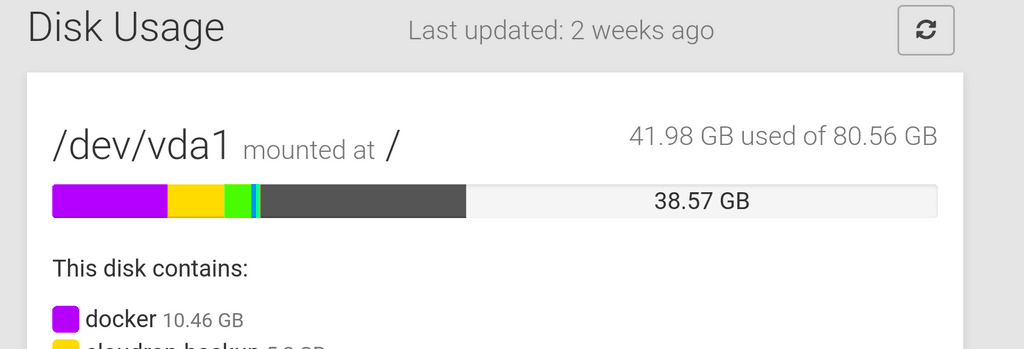

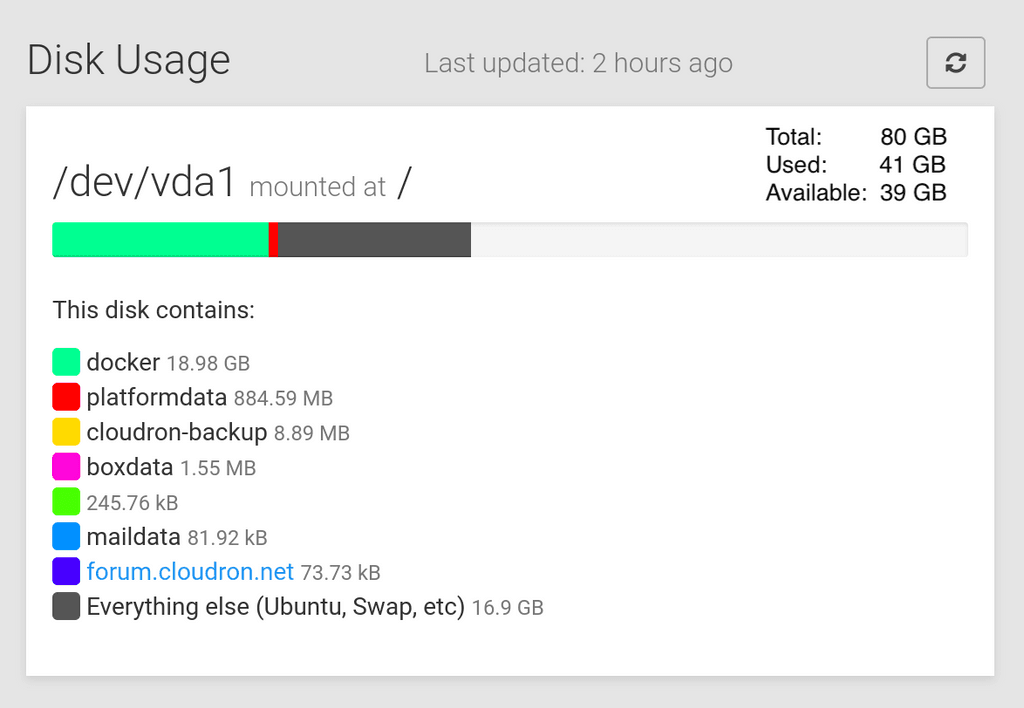

Even in your own screenshot from earlier, the total underneath the graph adds up to about 36 GB but yet above the graph it shows 41 GB used (this is the minimum difference I've seen so far but still a large 5 GB difference in used space).

-

@girish - I noticed you marked this as Solved 4 days ago, but I don't believe this has actually been solved.

There's a pretty large discrepancy in usage for my external disk as seen in earlier screenshots, and I don't see that having been resolved yet.

For example, "used" under the "this disk contains:" section is 204 GB, but then the graph part shows 217 GB used. Seems like a defect that there's multiple different "used" numbers presented, and by quite a few GBs too (approximately 5 - 20 GB discrepancy).

Even in your own screenshot from earlier, the total underneath the graph adds up to about 36 GB but yet above the graph it shows 41 GB used (this is the minimum difference I've seen so far but still a large 5 GB difference in used space).

@d19dotca said in Disk space usage seems incorrect on external disk:

@girish - I noticed you marked this as Solved 4 days ago, but I don't believe this has actually been solved.

Also:

it'd be nice if the GUI showed free space too in an easy manner instead of having us do the math ourselves

-

G girish has marked this topic as unsolved on

G girish has marked this topic as unsolved on

-

@d19dotca Can you give us the output of

df -B1 --output=source,fstype,size,used,avail,pcent,target? This is the command used to get disk information.The du information is got from the du command. Can you check if running

du -DsB1 <path>matches with what you see in graph for the apps and backup directory ?Finally, for docker itself, the size comes from

docker system dfoutput. -

@d19dotca Can you give us the output of

df -B1 --output=source,fstype,size,used,avail,pcent,target? This is the command used to get disk information.The du information is got from the du command. Can you check if running

du -DsB1 <path>matches with what you see in graph for the apps and backup directory ?Finally, for docker itself, the size comes from

docker system dfoutput.@girish are you going to add the space available?!?!

-

@girish are you going to add the space available?!?!

-

@jdaviescoates yes, your message has been relayed to @nebulon

-

@d19dotca Can you give us the output of

df -B1 --output=source,fstype,size,used,avail,pcent,target? This is the command used to get disk information.The du information is got from the du command. Can you check if running

du -DsB1 <path>matches with what you see in graph for the apps and backup directory ?Finally, for docker itself, the size comes from

docker system dfoutput.@girish said in Disk space usage seems incorrect on external disk:

@d19dotca Can you give us the output of

df -B1 --output=source,fstype,size,used,avail,pcent,target? This is the command used to get disk information.The du information is got from the du command. Can you check if running

du -DsB1 <path>matches with what you see in graph for the apps and backup directory ?Finally, for docker itself, the size comes from

docker system dfoutput.Hi Girish. Happy to help here. I ran the commands as requested.

The output for that df command is below (my backup disk is currently full, need to expand that in a minute, haha):

ubuntu@my:~$ df -B1 --output=source,fstype,size,used,avail,pcent,target Filesystem Type 1B-blocks Used Avail Use% Mounted on udev devtmpfs 4125618176 0 4125618176 0% /dev tmpfs tmpfs 834379776 6078464 828301312 1% /run /dev/vda1 ext4 181372190720 99085045760 72606834688 58% / tmpfs tmpfs 4171890688 0 4171890688 0% /dev/shm tmpfs tmpfs 5242880 0 5242880 0% /run/lock tmpfs tmpfs 4171890688 0 4171890688 0% /sys/fs/cgroup /dev/vdb1 ext4 252515119104 252498403328 0 100% /mnt/cloudronbackup /dev/loop1 squashfs 58327040 58327040 0 100% /snap/core18/2560 /dev/loop2 squashfs 66322432 66322432 0 100% /snap/core20/1623 /dev/loop4 squashfs 50331648 50331648 0 100% /snap/snapd/17336 /dev/loop0 squashfs 58327040 58327040 0 100% /snap/core18/2566 /dev/loop3 squashfs 50331648 50331648 0 100% /snap/snapd/17029 /dev/loop6 squashfs 71303168 71303168 0 100% /snap/lxd/22526 /dev/loop5 squashfs 71172096 71172096 0 100% /snap/lxd/22753 /dev/loop7 squashfs 65011712 65011712 0 100% /snap/core20/1611 tmpfs tmpfs 834375680 0 834375680 0% /run/user/1000This seems to show an issue... if I'm understanding the output correctly, this means that I have a /dev/vda1 (root) disk size of about 181 GB with about 99 GB used, yet it's showing 108.77 GB used in the UI (screenshot included):

Hopefully that clarifies the issue a bit by showing the discrepancies between what's shown in the UI vs what's shown in the command line terminal on the VM.

The du command output is below:

ubuntu@my:~$ sudo du -DsB1 /home/yellowtent/platformdata/ 9498304512 /home/yellowtent/platformdata/ ubuntu@my:~$ sudo du -DsB1 /home/yellowtent/boxdata/ 46611869696 /home/yellowtent/boxdata/Based on that output and my UI, I believe the values of each item beneath the graphs are correct, but the total numbers calculated at the top (above the graph) are incorrect, or at least the above-graph used space number seems to be incorrect.

The other docker command output is below:

ubuntu@my:~$ sudo docker system df TYPE TOTAL ACTIVE SIZE RECLAIMABLE Images 29 21 15.58GB 6.058GB (38%) Containers 94 70 0B 0B Local Volumes 606 138 1.559GB 1.02GB (65%) Build Cache 0 0 0B 0B -

N nebulon referenced this topic on

N nebulon referenced this topic on

-

@girish said in Disk space usage seems incorrect on external disk:

@d19dotca Can you give us the output of

df -B1 --output=source,fstype,size,used,avail,pcent,target? This is the command used to get disk information.The du information is got from the du command. Can you check if running

du -DsB1 <path>matches with what you see in graph for the apps and backup directory ?Finally, for docker itself, the size comes from

docker system dfoutput.Hi Girish. Happy to help here. I ran the commands as requested.

The output for that df command is below (my backup disk is currently full, need to expand that in a minute, haha):

ubuntu@my:~$ df -B1 --output=source,fstype,size,used,avail,pcent,target Filesystem Type 1B-blocks Used Avail Use% Mounted on udev devtmpfs 4125618176 0 4125618176 0% /dev tmpfs tmpfs 834379776 6078464 828301312 1% /run /dev/vda1 ext4 181372190720 99085045760 72606834688 58% / tmpfs tmpfs 4171890688 0 4171890688 0% /dev/shm tmpfs tmpfs 5242880 0 5242880 0% /run/lock tmpfs tmpfs 4171890688 0 4171890688 0% /sys/fs/cgroup /dev/vdb1 ext4 252515119104 252498403328 0 100% /mnt/cloudronbackup /dev/loop1 squashfs 58327040 58327040 0 100% /snap/core18/2560 /dev/loop2 squashfs 66322432 66322432 0 100% /snap/core20/1623 /dev/loop4 squashfs 50331648 50331648 0 100% /snap/snapd/17336 /dev/loop0 squashfs 58327040 58327040 0 100% /snap/core18/2566 /dev/loop3 squashfs 50331648 50331648 0 100% /snap/snapd/17029 /dev/loop6 squashfs 71303168 71303168 0 100% /snap/lxd/22526 /dev/loop5 squashfs 71172096 71172096 0 100% /snap/lxd/22753 /dev/loop7 squashfs 65011712 65011712 0 100% /snap/core20/1611 tmpfs tmpfs 834375680 0 834375680 0% /run/user/1000This seems to show an issue... if I'm understanding the output correctly, this means that I have a /dev/vda1 (root) disk size of about 181 GB with about 99 GB used, yet it's showing 108.77 GB used in the UI (screenshot included):

Hopefully that clarifies the issue a bit by showing the discrepancies between what's shown in the UI vs what's shown in the command line terminal on the VM.

The du command output is below:

ubuntu@my:~$ sudo du -DsB1 /home/yellowtent/platformdata/ 9498304512 /home/yellowtent/platformdata/ ubuntu@my:~$ sudo du -DsB1 /home/yellowtent/boxdata/ 46611869696 /home/yellowtent/boxdata/Based on that output and my UI, I believe the values of each item beneath the graphs are correct, but the total numbers calculated at the top (above the graph) are incorrect, or at least the above-graph used space number seems to be incorrect.

The other docker command output is below:

ubuntu@my:~$ sudo docker system df TYPE TOTAL ACTIVE SIZE RECLAIMABLE Images 29 21 15.58GB 6.058GB (38%) Containers 94 70 0B 0B Local Volumes 606 138 1.559GB 1.02GB (65%) Build Cache 0 0 0B 0B -

@d19dotca actually I think we need a more direct look at your system to figure out what is happening there. If you like, can you enable remote SSh support for us and send us a mail with your dashboard domain to support@cloudron.io ?

-

@nebulon No problem at all. I just enabled access for you and submitted the ticket for it through the Support page in Cloudron. Hope that helps.

@d19dotca so the root cause for the discrepancy is the reserved disk space for the root user on ext4. Some info on that at https://wiki.archlinux.org/title/ext4#Reserved_blocks

This is also why

dfshows a discrepancy between the values. I have fixed up the UI now to reflect exactly whatdfreports to avoid confusion. -

N nebulon has marked this topic as solved on

N nebulon has marked this topic as solved on

-

@d19dotca so the root cause for the discrepancy is the reserved disk space for the root user on ext4. Some info on that at https://wiki.archlinux.org/title/ext4#Reserved_blocks

This is also why

dfshows a discrepancy between the values. I have fixed up the UI now to reflect exactly whatdfreports to avoid confusion.@nebulon just to clarify, by “ext4” are we referring to my use of that for the backup type where I did the ext4 option with the “by-uuid” loading? Or is that related to something else? Just wanting to better understand it. Regardless though, thanks for fixing it!

-

@nebulon just to clarify, by “ext4” are we referring to my use of that for the backup type where I did the ext4 option with the “by-uuid” loading? Or is that related to something else? Just wanting to better understand it. Regardless though, thanks for fixing it!