Object Storage or Block Storage for backups of growing 60+ GB?

-

I'm debating whether to go with Object Storage or Block Storage. I constantly go back and forth on this this myself and figured I should ask what you guys think.

One one hand, I want to use Object Storage (i.e. Wasabi) because it's somewhat inexpensive and it has unlimited storage. I won't have to frequently battle the "no more space on disk" issues with EXT4. This also allows me to feel comfortable in being able to restore with a different Datacentre or Hosting provider when needed (though this is infrequent). Seems more "reliable" too when from different providers in different data centres during a catastrophe, where-as Block Storage could possibly be corrupt after a Datacentre failure.

On the other hand, I want to use the Block Storage type even though it's a tad more expensive (we're only talking maybe $2-3/month more than object storage) because it's lightening quick compared to Object Storage. A full rsync backup maybe takes 10-15 minutes, where-as the Object Storage (tgz) is over 1.5 hours. This makes me concerned when I want to do things which involve a backup where I want to disable email for example before doing a full system restore on a new server, my downtime should be greatly reduced due to the low latency of Block Storage instead of Object Storage, but does still take time to attach the EXT4 to the new server, etc during that time too.

So this all leads me to wonder... what do you guys use? Are you typically choosing to just do a daily backup to Object Storage even when it takes a long time (60+ GB in size ~ 1.5 hours) or are you using Block Storage instead when you have larger storage sizes to backup?

Would love some insight or maybe some suggestions as I'm sure there's some things I haven't considered too.

@d19dotca

My advice is not not use block storage because if some one get in to your Cloudron they get in to your backup too.Set up S3 + your own lifetime policy, and not using incremental backups.

And obviously not allowing the key in Cloudron to delete any thing. -

@MooCloud_Matt I see. Info on Block Storage is generally hard to come by.

But for example https://digitalocean.github.io/navigators-guide/book/03-backup/ch07-storage-on-digitalocean.html (I don't know if this is some authoritative documents), it says for Block Storage "Data spans multiple nodes. File systems could experience corruption." It does also say "The Volume storage cluster is a distributed system that has multiple copies of your data within the cluster" . Not sure what all this translates to

Does this mean it will protect me against bitrot?

Does this mean it will protect me against bitrot?@girish

Do is really bad in explain what they use, even if you contact them.But mostly likely is just to cover there ass, if you have a distribute system (especially if you use block storage and not file storage) you have parity check in the block of data.

Ceph is the most use currently (if someone can check that, maybe something new is been use) in big Clusters, do all of that as a default.

-

Having worked in the storage industry, there are many insights one gets from years of experience that you cannot get anywhere else.

The little known thing is that stupidity and ignorance always comes first.

Here's the perfect example:

All history of storage has a legacy that started with blocks on spinning disks. Taking this forward in time, all protocols, drivers and interfaces have been written to support block storage. Along comes solid state, and now all the slow block ways of doing things are just put on top of flash storage. Convenient right?Stupid? You bet.

Now we have speed and volume of data in tsunamis greater than what disks can handle, and along comes object storage, which can do all that and handle sizes greater than terabytes, but petabytes (PB), exabytes (EB) and beyond!

What does the industry do first? They use block storage protocols and write directly to object stores. Convenient right?

Stupid? You bet.

And slow too. Not because object storage is slow, but because using block storage concepts on object stores makes them slow.

It's like putting diesel fuel in your gasoline car. It doesn't match and won't work very well, and may even destroy it.

That is exactly what happens when block storage concepts, with small random writes are used to dump data to a nice object store, who's architecture is optimized for large sequential transactions, for which it's brilliant at, especially at scale. It slow everything down and trashes the system, further causing problems.

Only in the last several years have a few smart folks begun to recognize this difference and write object storage connectors (and even start companies) that are actually tuned and understand what an object store does and use it properly.

Those are how the likes of Apple, Disney and other huge companies with enormous media assets are able to store all the apps, movies and data globally, so things just work when you click "play".

-

Thanks guys! As much as I hate the 1.5 hour backup time (and it'll grow longer as more data is collected), I think it's the safer bet to just do it at like 4 AM or something each day, the storage then is out of the Datacentre and will be safer that way.

Do you guys use rsync or tgz for the object storage backups, do you see any performance improvement using one over the other? I normally used rsync when using block storage as that was usually the quickest, but I'm guessing that's not the case with Object Storage, eh?

-

Thanks guys! As much as I hate the 1.5 hour backup time (and it'll grow longer as more data is collected), I think it's the safer bet to just do it at like 4 AM or something each day, the storage then is out of the Datacentre and will be safer that way.

Do you guys use rsync or tgz for the object storage backups, do you see any performance improvement using one over the other? I normally used rsync when using block storage as that was usually the quickest, but I'm guessing that's not the case with Object Storage, eh?

@d19dotca depends on what kind of data you have. 99% of my backup contains small files below 1mb, no audio/video or big files.

With tgz you get compression especially for data like documents/mails, no problems with naming or deep folder structures and the benefit that you transfer one big file (archive) instead of sometimes a 6 digit number of small files. It takes way longer for RSYNC to check all these files then just to pack and transfer them.

If you have lots of big files that don't compress well, tgz isn't much of a help and transfering them would be waaaaay slower in comparison to RSYNC that just needs to compare source and target.

I use Netcup and Hetzner root server and a 5TB Hetzner Storage Box for tgz backups that does daily snapshots (limited to 3) in addition. For my Hetzner servers I have a storage VPS with Minio at AlphaVPS.

While having your backup storage at the same provider has speed benefits, it is bad practice and destroys what you actually want to achieve with a backup in the first place.

-

@d19dotca depends on what kind of data you have. 99% of my backup contains small files below 1mb, no audio/video or big files.

With tgz you get compression especially for data like documents/mails, no problems with naming or deep folder structures and the benefit that you transfer one big file (archive) instead of sometimes a 6 digit number of small files. It takes way longer for RSYNC to check all these files then just to pack and transfer them.

If you have lots of big files that don't compress well, tgz isn't much of a help and transfering them would be waaaaay slower in comparison to RSYNC that just needs to compare source and target.

I use Netcup and Hetzner root server and a 5TB Hetzner Storage Box for tgz backups that does daily snapshots (limited to 3) in addition. For my Hetzner servers I have a storage VPS with Minio at AlphaVPS.

While having your backup storage at the same provider has speed benefits, it is bad practice and destroys what you actually want to achieve with a backup in the first place.

@subven Okay I ran some tests. If using tgz it was about 1.5 hour upload. If I used rsync, the first one was about an hour (I think it was 55 minutes), but the second and third one so far have been in the area of 22 minutes, so much quicker. I guess the downside is this takes up more storage than tgz would have but that's okay I guess since Wasabi takes the full 5.99 USD for 1 TB regardless of how much is stored under 1 TB. I guess I'll continue to use Wasabi via Cloudron's rsync type for now.

-

@subven Okay I ran some tests. If using tgz it was about 1.5 hour upload. If I used rsync, the first one was about an hour (I think it was 55 minutes), but the second and third one so far have been in the area of 22 minutes, so much quicker. I guess the downside is this takes up more storage than tgz would have but that's okay I guess since Wasabi takes the full 5.99 USD for 1 TB regardless of how much is stored under 1 TB. I guess I'll continue to use Wasabi via Cloudron's rsync type for now.

@d19dotca

Please check better wasabi pricing, is 5.99 for stored and deleted files.

With rsync Cloudron will create a lot of delete request, and you will end up paying a lot. -

@subven Okay I ran some tests. If using tgz it was about 1.5 hour upload. If I used rsync, the first one was about an hour (I think it was 55 minutes), but the second and third one so far have been in the area of 22 minutes, so much quicker. I guess the downside is this takes up more storage than tgz would have but that's okay I guess since Wasabi takes the full 5.99 USD for 1 TB regardless of how much is stored under 1 TB. I guess I'll continue to use Wasabi via Cloudron's rsync type for now.

@d19dotca said in Object Storage or Block Storage for backups of growing 60+ GB?:

more storage

Depends if you store backup for 2 days or for 1 year.

Rsync is an incremental backup, you will never store the same file 2 times.

But is disadvantage is that I'd you even get 1 corrupted snapshot you will lose everything after that. -

@d19dotca said in Object Storage or Block Storage for backups of growing 60+ GB?:

more storage

Depends if you store backup for 2 days or for 1 year.

Rsync is an incremental backup, you will never store the same file 2 times.

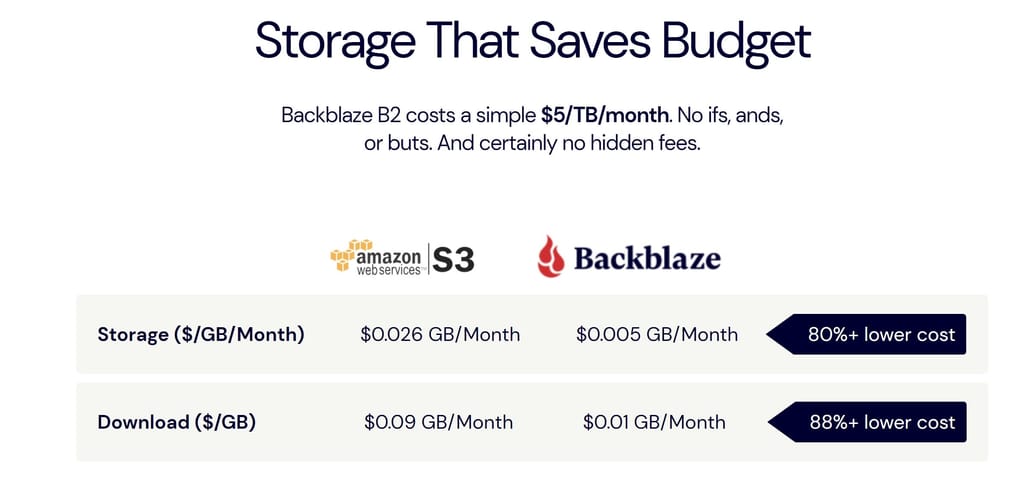

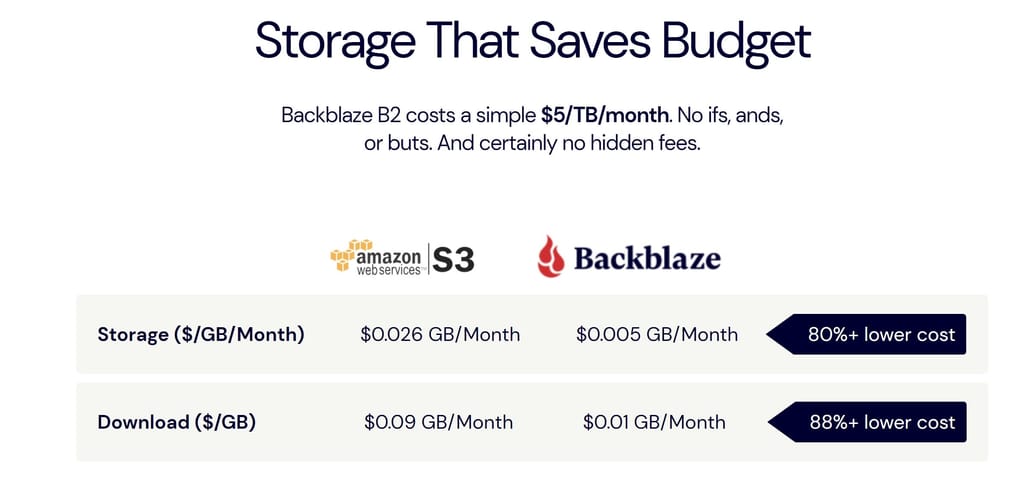

But is disadvantage is that I'd you even get 1 corrupted snapshot you will lose everything after that.@MooCloud_Matt Would you suggest Backblaze over Wasabi in that case? Backblaze charges on command types too so I assumed I’d be better with Wasabi but point taken as their 3-month lifespan requirement struck me as very strange. Went with Wasabi still as it seemed unlikely I’d hit the 1TB limit even with deleted files, plus it has a Canadian Datacentre which will be more performant latency-wise then Backblaze’s California Datacentre when my VPS is hosted in Toronto, Canada.

-

@MooCloud_Matt Would you suggest Backblaze over Wasabi in that case? Backblaze charges on command types too so I assumed I’d be better with Wasabi but point taken as their 3-month lifespan requirement struck me as very strange. Went with Wasabi still as it seemed unlikely I’d hit the 1TB limit even with deleted files, plus it has a Canadian Datacentre which will be more performant latency-wise then Backblaze’s California Datacentre when my VPS is hosted in Toronto, Canada.

-

@d19dotca On Vultr, object storage starts at $5/month for 250GB and 1TB of transfer. Block storage is $25/month for 250GB.

@LoudLemur Seems to be half that price on Contabo, even less on Wasabi.

-

@LoudLemur Seems to be half that price on Contabo, even less on Wasabi.

@robi said in Object Storage or Block Storage for backups of growing 60+ GB?:

@LoudLemur Seems to be half that price on Contabo.

I was surprised as the Vultr Object Storage is nvme, but the block storage can be HDD, so I thought the block storage would be cheaper.

What is the best toolto browse through block storage files to find one you want?

-

@robi said in Object Storage or Block Storage for backups of growing 60+ GB?:

@LoudLemur Seems to be half that price on Contabo.

I was surprised as the Vultr Object Storage is nvme, but the block storage can be HDD, so I thought the block storage would be cheaper.

What is the best toolto browse through block storage files to find one you want?

@LoudLemur there are many tools, depends what OS you use.. WinSCP or say Cyberduck on MacOS.

-

@d19dotca On Vultr, object storage starts at $5/month for 250GB and 1TB of transfer. Block storage is $25/month for 250GB.

@LoudLemur Yeah I used Vultr for a little bit when testing but their Datacentre location for their object storage was far away from my VPS Datacentre in Vultr, and it's not too cheap at least compared to what sort of storage you get with Wasabi or Backblaze for example. Not too bad though, for sure, and worth consideration for some.

-

@LoudLemur Yeah I used Vultr for a little bit when testing but their Datacentre location for their object storage was far away from my VPS Datacentre in Vultr, and it's not too cheap at least compared to what sort of storage you get with Wasabi or Backblaze for example. Not too bad though, for sure, and worth consideration for some.

@d19dotca said in Object Storage or Block Storage for backups of growing 60+ GB?:

@LoudLemur Yeah I used Vultr for a little bit when testing but their Datacentre location for their object storage was far away from my VPS Datacentre in Vultr, and it's not too cheap at least compared to what sort of storage you get with Wasabi or Backblaze for example. Not too bad though, for sure, and worth consideration for some.

-

@MooCloud_Matt Would you suggest Backblaze over Wasabi in that case? Backblaze charges on command types too so I assumed I’d be better with Wasabi but point taken as their 3-month lifespan requirement struck me as very strange. Went with Wasabi still as it seemed unlikely I’d hit the 1TB limit even with deleted files, plus it has a Canadian Datacentre which will be more performant latency-wise then Backblaze’s California Datacentre when my VPS is hosted in Toronto, Canada.

@d19dotca

You must understand what is more valuable for you: speed, reliability, or price.

reliability mostly Backblaze’s services are one of the best.

Speed, Wasabi is good enough if is near to your data center, but the is no duplication of data, and is something that we have to use more than want I like to admit. -

@d19dotca said in Object Storage or Block Storage for backups of growing 60+ GB?:

@LoudLemur Yeah I used Vultr for a little bit when testing but their Datacentre location for their object storage was far away from my VPS Datacentre in Vultr, and it's not too cheap at least compared to what sort of storage you get with Wasabi or Backblaze for example. Not too bad though, for sure, and worth consideration for some.

Interesting. I decided to test something with a local Datacentre closer to my VPS called IDrive e2 (seems like a recent s3 competitor from mid-2022 which promises high speeds).

Backblaze is good although I find it quite slow (mostly because my VPS is in a very far away Datacentre from Backblaze's California (us-west) location. Speed isn't critical since it's just backups but definitely helps still.

Backblaze's pricing for their API calls scares me a little bit, makes me think it'll be much more pricey than I'm anticipating. May just need to test it out for a while to verify.

I see what you mean about Wasabi's weird 90-day storage policy which means even deleted files are still counted for 90 days, and my current estimate is quickly adding up, so I think despite initially happy with Wasabi's performance I may need to abandon that provider.

Still experimenting. Currently in the middle of a large 60+GB backup to IDrive e2 (using rsync instead of tarball for now) and have to say I'm super impressed with the speeds. Their pricing is also quite minimal. Will see if they end up being the one I use.

-

@d19dotca

You must understand what is more valuable for you: speed, reliability, or price.

reliability mostly Backblaze’s services are one of the best.

Speed, Wasabi is good enough if is near to your data center, but the is no duplication of data, and is something that we have to use more than want I like to admit.@MooCloud_Matt said in Object Storage or Block Storage for backups of growing 60+ GB?:

@d19dotca

You must understand what is more valuable for you: speed, reliability, or price.

reliability mostly Backblaze’s services are one of the best.

Speed, Wasabi is good enough if is near to your data center, but the is no duplication of data, and is something that we have to use more than want I like to admit.And price? Which one?

-

@MooCloud_Matt said in Object Storage or Block Storage for backups of growing 60+ GB?:

@d19dotca

You must understand what is more valuable for you: speed, reliability, or price.

reliability mostly Backblaze’s services are one of the best.

Speed, Wasabi is good enough if is near to your data center, but the is no duplication of data, and is something that we have to use more than want I like to admit.And price? Which one?

@LoudLemur probably contabo, they use ceph.

This means that u have replication by default and a really good support for S3. -

FWIW, I’m quite impressed with the pricing and performance of the newer IDrive e2 storage (with s3 API).

Curious though on a related but different topic… when uploading to s3, do you find yourself using rsync for larger backups or do you opt to just use tgz? I so far tend to find rsync a bit more performant (likely because of the concurrency settings) but the downside is it takes forever to delete files out of the bucket when there’s so many of them, the deletion process even with s3 API calls is incredibly slow when so many files exist. Makes me think it may be better to just stick to tarball images instead.