Has anyone set up Nextcloud with a Hetzner Storagebox cifs mount as primary storage?

-

@andreasdueren

It won't show it as a proper volume either.

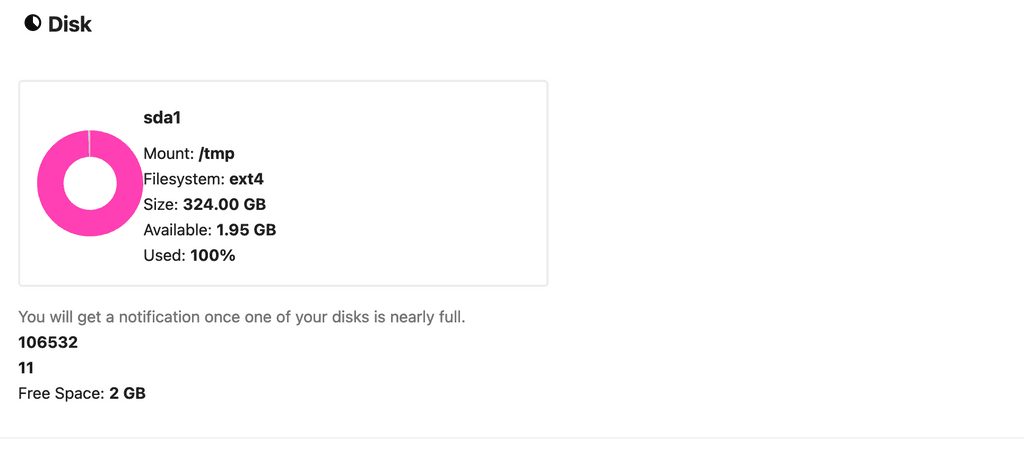

It won't show it as a proper volume either.Plus for some reason my internal volume is now almost completely full with mystery data.

@andreasdueren I've mounted it via volumes after getting rid of fstab

-

It looks like Hetzner are now pretty-much doing this exact same thing for their "Storage Share" solution:

@girish I think this needs to be a core feature of Cloudron, given the cost-efficiency, scaleability, and additional backup snapshots this offers for very little €s compared to things like GDrive & Dropbox per-user pricing.

-

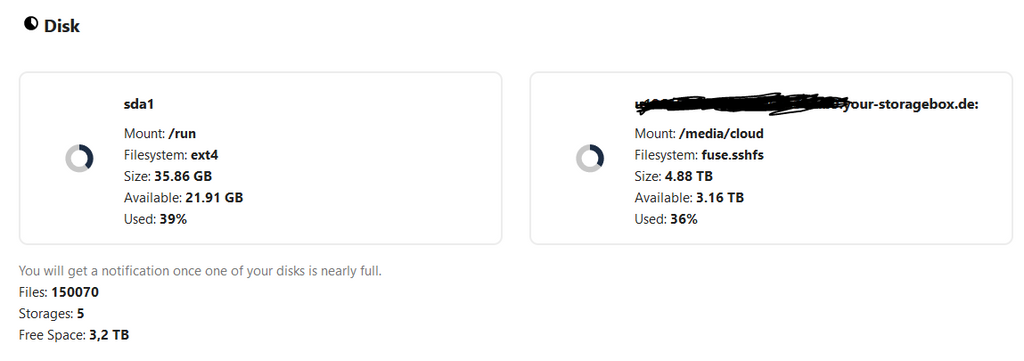

Ok so quick update here: I was following the mount procedure from the first link and it's looking good! However, I started with a fresh installation instead of moving my files around, but seeing as it worked out, migrating an existing installation should be no problem either.

So what I did:

- deployed a new Cloudron with Nextcloud installed on a fresh CPX21 on Hetzner Cloud (kept it at 40GB though, might scale down if performance allows it)

- mounted a 5TB Hetzner Storagebox via sshfs into

/mnt/cloudand added it as Cloudron volume (/media/cloud) - mounted that volume into the Nextcloud app (uncheck read-only)

- change the datadirectory in

config/config.phpfrom'datadirectory' => '/app/data',to'datadirectory' => '/media/cloud', - cp -r

/app/data/adminto the mounted volume andtouch .ocdatain/media/cloud

That's pretty much it I reckon, if you log in now you have this extra storage available:

I have both, uploaded a few GB manually and the rest via sync client, no errors so far. A restart won't change the datadirectory, so this should continue working after reboots. Encryption is also enabled and working as intended, still waiting for something that doesn't work properly.

@msbt

HiI got a similar configuration with a CPX21 instance connected to a BX21 Storagebox.

While transfer speed for large files is fine, transfer speed for large amount of small files is horrible:root@nextcloud:~# for f in {1..2000}; do mktemp ; done /tmp/tmp.qabMyUDO5N /tmp/tmp.QPuk8CcXSB /tmp/tmp.NhErmerWFl /tmp/tmp.CtkY3jvTbL [...]root@nextcloud:~# time cp /tmp/tmp.* /backups/test/ real 4m20.998s user 0m0.061s sys 0m0.565sroot@nextcloud:~# time rm -fr /backups/test/* real 1m36.682s user 0m0.050s sys 0m0.196sAre you experiencing the same behaviour ?

-

@msbt

HiI got a similar configuration with a CPX21 instance connected to a BX21 Storagebox.

While transfer speed for large files is fine, transfer speed for large amount of small files is horrible:root@nextcloud:~# for f in {1..2000}; do mktemp ; done /tmp/tmp.qabMyUDO5N /tmp/tmp.QPuk8CcXSB /tmp/tmp.NhErmerWFl /tmp/tmp.CtkY3jvTbL [...]root@nextcloud:~# time cp /tmp/tmp.* /backups/test/ real 4m20.998s user 0m0.061s sys 0m0.565sroot@nextcloud:~# time rm -fr /backups/test/* real 1m36.682s user 0m0.050s sys 0m0.196sAre you experiencing the same behaviour ?

@jcgonnard many factors influence the availability of IO on the system.

Shared systems get busy.

See what is available with dd:

dd if=/dev/zero of=/tmp/test1.img bs=1G count=1 oflag=dsync ; rm /tmp/test1.img -

@msbt

HiI got a similar configuration with a CPX21 instance connected to a BX21 Storagebox.

While transfer speed for large files is fine, transfer speed for large amount of small files is horrible:root@nextcloud:~# for f in {1..2000}; do mktemp ; done /tmp/tmp.qabMyUDO5N /tmp/tmp.QPuk8CcXSB /tmp/tmp.NhErmerWFl /tmp/tmp.CtkY3jvTbL [...]root@nextcloud:~# time cp /tmp/tmp.* /backups/test/ real 4m20.998s user 0m0.061s sys 0m0.565sroot@nextcloud:~# time rm -fr /backups/test/* real 1m36.682s user 0m0.050s sys 0m0.196sAre you experiencing the same behaviour ?

SSHFS:

root@nextcloud:~# dd if=/dev/zero of=/backups/test.img bs=1G count=1 oflag=dsync 1+0 records in 1+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 50.1201 s, 21.4 MB/s root@nextcloud:~# dd if=/dev/zero of=/backups/test.img bs=4M count=250 oflag=dsync 250+0 records in 250+0 records out 1048576000 bytes (1.0 GB, 1000 MiB) copied, 78.6863 s, 13.3 MB/s root@nextcloud:~# dd if=/dev/zero of=/backups/test.img bs=1 count=1000 oflag=dsync 1000+0 records in 1000+0 records out 1000 bytes (1.0 kB) copied, 70.862 s, 0.0 kB/sLOCAL:

root@nextcloud:~# dd if=/dev/zero of=/tmp/test.img bs=1G count=1 oflag=dsync 1+0 records in 1+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.863714 s, 1.2 GB/s root@nextcloud:~# dd if=/dev/zero of=/tmp/test.img bs=4M count=250 oflag=dsync 250+0 records in 250+0 records out 1048576000 bytes (1.0 GB, 1000 MiB) copied, 1.31499 s, 797 MB/s root@nextcloud:~# dd if=/dev/zero of=/tmp/test.img bs=1 count=1000 oflag=dsync 1000+0 records in 1000+0 records out 1000 bytes (1.0 kB) copied, 0.500667 s, 2.0 kB/sAs I said, transfer speed for medium/big files is fine enough, the problem is for small files.

I just asked if some of you experienced the same issue with the same configuration (hetzner VM + storagebox) and if there is a way to improve performances or if I need to find something else that would match my needs -

SSHFS:

root@nextcloud:~# dd if=/dev/zero of=/backups/test.img bs=1G count=1 oflag=dsync 1+0 records in 1+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 50.1201 s, 21.4 MB/s root@nextcloud:~# dd if=/dev/zero of=/backups/test.img bs=4M count=250 oflag=dsync 250+0 records in 250+0 records out 1048576000 bytes (1.0 GB, 1000 MiB) copied, 78.6863 s, 13.3 MB/s root@nextcloud:~# dd if=/dev/zero of=/backups/test.img bs=1 count=1000 oflag=dsync 1000+0 records in 1000+0 records out 1000 bytes (1.0 kB) copied, 70.862 s, 0.0 kB/sLOCAL:

root@nextcloud:~# dd if=/dev/zero of=/tmp/test.img bs=1G count=1 oflag=dsync 1+0 records in 1+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.863714 s, 1.2 GB/s root@nextcloud:~# dd if=/dev/zero of=/tmp/test.img bs=4M count=250 oflag=dsync 250+0 records in 250+0 records out 1048576000 bytes (1.0 GB, 1000 MiB) copied, 1.31499 s, 797 MB/s root@nextcloud:~# dd if=/dev/zero of=/tmp/test.img bs=1 count=1000 oflag=dsync 1000+0 records in 1000+0 records out 1000 bytes (1.0 kB) copied, 0.500667 s, 2.0 kB/sAs I said, transfer speed for medium/big files is fine enough, the problem is for small files.

I just asked if some of you experienced the same issue with the same configuration (hetzner VM + storagebox) and if there is a way to improve performances or if I need to find something else that would match my needs@jcgonnard that is pretty good performance for SSHFS.

You'll have to switch to a different mount option to increase small file performance, unless you can get all your small files to stream over via tar/zip/etc.

-

It looks like Hetzner are now pretty-much doing this exact same thing for their "Storage Share" solution:

@girish I think this needs to be a core feature of Cloudron, given the cost-efficiency, scaleability, and additional backup snapshots this offers for very little €s compared to things like GDrive & Dropbox per-user pricing.

@marcusquinn

Hetzner don't use remote storage use remote clustered DB + local storage.

So it's not possible to compare the 2 installs cloudron + storagebox and hetzner storageshare, performance will be a lot better on storageshare, in order of magnitude especially on small files. -

@msbt

HiI got a similar configuration with a CPX21 instance connected to a BX21 Storagebox.

While transfer speed for large files is fine, transfer speed for large amount of small files is horrible:root@nextcloud:~# for f in {1..2000}; do mktemp ; done /tmp/tmp.qabMyUDO5N /tmp/tmp.QPuk8CcXSB /tmp/tmp.NhErmerWFl /tmp/tmp.CtkY3jvTbL [...]root@nextcloud:~# time cp /tmp/tmp.* /backups/test/ real 4m20.998s user 0m0.061s sys 0m0.565sroot@nextcloud:~# time rm -fr /backups/test/* real 1m36.682s user 0m0.050s sys 0m0.196sAre you experiencing the same behaviour ?

-

@jcgonnard I can't really tell, it's always running in the background and I haven't encountered any issues so far

@msbt

the issue could start with a lot of concurrent users and a lot of small changes to file, due to SMB not working at FS lvl but at file level/block of data.

IF hetzner would offer NFS, that could be a solution or better even if there would be support for iscsi. -

M marcusquinn referenced this topic on

M marcusquinn referenced this topic on