After Ubuntu 22/24 Upgrade syslog getting spammed and grows way to much clogging up the diskspace

-

Currently investigating the following issue.

I am upgrading multiple servers to Ubuntu 22/24 and it seems that the/var/log/syslogis getting spammed:2025-02-27T09:05:33.316275+00:00 my syslog.js[829]: 2025-02-27T09:05:33.316Z syslog:server Unable to parse: <30>1 2025-02-27T09:05:33Z my fc10b9f8-33b4-4f22-b442-74120cfaad1d 1023 fc10b9f8-33b4-4f22-b442-74120cfaad1d - #033[2m2025-02-27T09:05:33.315944Z#033[0m #033[32m INFO#033[0m #033[1mHTTP request#033[0m#033[1m{#033[0m#033[3mmethod#033[0m#033[2m=#033[0mPOST #033[3mhost#033[0m#033[2m=#033[0m"REDACTED.SUB.DOMAIN.TLD" #033[3mroute#033[0m#033[2m=#033[0m/indexes/REDACTED/facet-search #033[3mquery_parameters#033[0m#033[2m=#033[0m #033[3muser_agent#033[0m#033[2m=#033[0mMeilisearch PHP (v1.7.0) #033[3mstatus_code#033[0m#033[2m=#033[0m200#033[1m}#033[0m#033[2m:#033[0m #033[2mmeilisearch#033[0m#033[2m:#033[0m close #033[3mtime.busy#033[0m#033[2m=#033[0m88.0µs #033[3mtime.idle#033[0m#033[2m=#033[0m1.01msThis log is from a custom Meilisearch app. But other logs with "normal" apps also have the parse error

Growing the syslog files to sizes that my monitoring system kicked in

-rw-r----- 1 syslog adm 20G Feb 27 08:55 syslog -rw-r----- 1 syslog adm 14G Feb 23 00:00 syslog.1Also noticed this entry:

2025-02-27T09:13:45.449795+00:00 my collectd[1044086]: ERROR: ld.so: object '/usr/lib/python3.10/config-3.10-x86_64-linux-gnu/libpython3.10.so' from LD_PRELOAD cannot be preloaded (cannot open shared object file): ignored. 2025-02-27T09:13:45.450546+00:00 my collectd[1044087]: ERROR: ld.so: object '/usr/lib/python3.10/config-3.10-x86_64-linux-gnu/libpython3.10.so' from LD_PRELOAD cannot be preloaded (cannot open shared object file): ignored. 2025-02-27T09:13:46.373778+00:00 my syslog.js[829]: 2025-02-27T09:13:46.373Z syslog:server Unable to parse: <27>1 2025-02-27T09:13:46Z my sftp 1023 sftp - 120.27.30.185 UNKNOWN - [27/Feb/2025:09:13:46 +0000] "USER root" 331 - 2025-02-27T09:13:47.829026+00:00 my syslog.js[829]: 2025-02-27T09:13:47.828Z syslog:server Unable to parse: <27>1 2025-02-27T09:13:47Z my sftp 1023 sftp - 120.27.30.185 UNKNOWN - [27/Feb/2025:09:13:47 +0000] "USER root" 331 -Is this maybe connected to the post-upgrade step of Ubuntu 24?

Fixup collectd. /etc/default/collectd might have a LD_PRELOAD line from previous releases. If you make any changes, restart collectd using systemctl restart collectd .

Hmmm yes system in question did not have a cleaned up

/etc/default/collectdBut the unable to parse persists:

2025-02-27T09:18:35.333933+00:00 my syslog.js[829]: 2025-02-27T09:18:35.333Z syslog:server Unable to parse: <30>1 2025-02-27T09:18:35Z my d1235d4c-4418-4cfe-8b2b-bc6abdd9afa2 1023 d1235d4c-4418-4cfe-8b2b-bc6abdd9afa2 - Task apps.uptime.tasks.dispatch_checks[427f04a8-9cb7-4914-b26d-db5cc31b860c] succeeded in 0.01911751099396497s: None -

Ahh The

syslog.jsis part of Cloudron in/home/yellowtent/box/syslog.js.

So now I can do some javascript investigation

-

Just checked a rather freshly setup Ubuntu 24 with Cloudron and there is also the same issue.

So it seems not related to the do-release-upgrade to 22/24:2025-02-27T09:34:13.375494+00:00 my-DOMAIN-TLD syslog.js[785]: 2025-02-27T09:34:13.375Z syslog:server Unable to parse: <30>1 2025-02-27T09:34:13Z my-DOMAIN-TLD 4ec5603e-7db7-49c2-a442-bf99721ba379 1043 4ec5603e-7db7-49c2-a442-bf99721ba379 - 162.158.86.97 - - [27/Feb/2025:09:34:13 +0000] "GET /api/config HTTP/1.1" 200 503 "-" "Bitwarden_Mobile/2025.1.2 (release/standard) (Android 14; SDK 34; Model SM-F741B)" -

G girish marked this topic as a question on

G girish marked this topic as a question on

-

G girish has marked this topic as solved on

G girish has marked this topic as solved on

-

Quickfix for users who need it NOW:

# get patch file, apply and remove and restart cloudron-syslog.service cd /home/yellowtent/box wget https://git.cloudron.io/platform/box/-/commit/063b1024616706971d4a1f9c50b5032727640120.diff git apply 063b1024616706971d4a1f9c50b5032727640120.diff rm -v 063b1024616706971d4a1f9c50b5032727640120.diff systemctl restart cloudron-syslog.service -

Extra analysis.

Did this issue really just come up after the upgrade to 22/24?System Upgrade timers:

2025-02-19 14:33:55 Linux version 6.8.0-53-generic (buildd@lcy02-amd64-046) (x86_64-linux-gnu-gcc-13 (Ubuntu 13.3.0-6ubuntu2~24.04) 13.3.0, GNU ld (GNU Binutils for Ubuntu) 2.42) #55-Ubuntu SMP PREEMPT_DYNAMIC Fri Jan 17 15:37:52 UTC 2025 2025-02-19 12:33:55 Linux version 5.15.0-131-generic (buildd@lcy02-amd64-057) (gcc (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0, GNU ld (GNU Binutils for Ubuntu) 2.38) #141-Ubuntu SMP Fri Jan 10 21:18:28 UTC 2025 2025-02-19 00:33:55 Linux version 5.4.0-205-generic (buildd@lcy02-amd64-055) (gcc version 9.4.0 (Ubuntu 9.4.0-1ubuntu1~20.04.2)) #225-Ubuntu SMP Fri Jan 10 22:23:35 UTC 2025

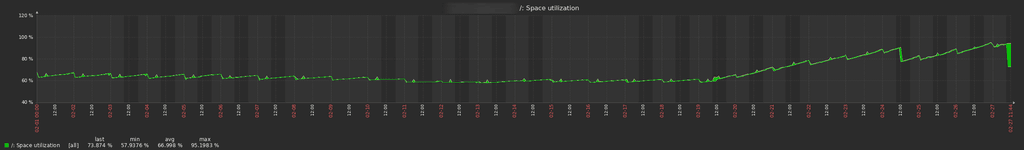

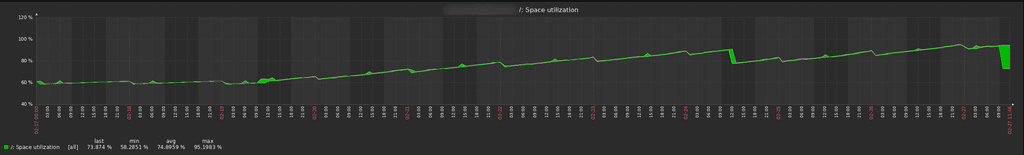

View of the whole month of February 2025.Zoom to 2025-02-17:

Yep, this looks very conclusive to me. This issue is only apparent in Ubuntu 22/24 with the non-fixed

syslog.js -

We're currently seeing this issue on v8.3.1 (Ubuntu 24.04.1 LTS)

@girish thank you for fixing this! When will this fix be rolled out?

@BrutalBirdie thanks for the quick fix! We applied it and it worked perfectly.

-

B BrutalBirdie referenced this topic on

B BrutalBirdie referenced this topic on

-

The patch described in https://forum.cloudron.io/topic/13361/after-ubuntu-22-24-upgrade-syslog-getting-spammed-and-grows-way-to-much-clogging-up-the-diskspace/11# Is not available anymore (Error 500)

-

The patch described in https://forum.cloudron.io/topic/13361/after-ubuntu-22-24-upgrade-syslog-getting-spammed-and-grows-way-to-much-clogging-up-the-diskspace/11# Is not available anymore (Error 500)

@necrevistonnezr That is because https://git.cloudron.io/ is currently throwing err 500.

Has been resolved, is now all good again.

-

Quickfix for users who need it NOW:

# get patch file, apply and remove and restart cloudron-syslog.service cd /home/yellowtent/box wget https://git.cloudron.io/platform/box/-/commit/063b1024616706971d4a1f9c50b5032727640120.diff git apply 063b1024616706971d4a1f9c50b5032727640120.diff rm -v 063b1024616706971d4a1f9c50b5032727640120.diff systemctl restart cloudron-syslog.service@BrutalBirdie this is great, solved the issue for me!

-

J james referenced this topic on

J james referenced this topic on

-

A alex-a-soto referenced this topic on

-

S SansGuidon referenced this topic on

S SansGuidon referenced this topic on

-

FYI I got the same problem a few times in past weeks, I understand this will be solved in Cloudron 9, right? But if yes I'm a bit confused that we need to apply such a patch manually when this could be part of an update. Anyway truncating the syslog + applying the patch got me rid of 60GB of spam in log files.

I'm interested in how others are dealing with this. -

@SansGuidon the issue arises only with the logs of some specific apps it seems. Did you notice which app specifically is growing in log size? Or is it all the app logs? But you are right, this problem is solved only in Cloudron 9.

@girish I don't think I've hit this issue myself, but why not just push out an 8.3.3 with this fix?

-

@SansGuidon the issue arises only with the logs of some specific apps it seems. Did you notice which app specifically is growing in log size? Or is it all the app logs? But you are right, this problem is solved only in Cloudron 9.

@girish said in After Ubuntu 22/24 Upgrade syslog getting spammed and grows way to much clogging up the diskspace:

@SansGuidon the issue arises only with the logs of some specific apps it seems. Did you notice which app specifically is growing in log size? Or is it all the app logs? But you are right, this problem is solved only in Cloudron 9.

Based on early investigation, some apps like Syncthing and Lamp, or even wallos, generate more logs than the rest. But this is just when looking at the data of past hours, and after applying the diff + logrotate tuning. I'll keep you posted if I find more interesting evidence. If someone has a script to quickly generate relevant stats, I'm interested.

-

@girish I don't think I've hit this issue myself, but why not just push out an 8.3.3 with this fix?

@jdaviescoates Yes, could help, as in current state, the syslog implementation generate errors in my logs, which could explain the logs growing in size. So I had to apply the diff to avoid this repeated pattern

2025-08-31T20:42:40.149390+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:40Z ubuntu-cloudron-16gb-nbg1-3 b5b418fc-0f16-4cde-81a1-1213880c9a10 1123 b5b418fc-0f16-4cde-81a1-1213880c9a10 - IndexError: list index out of range 2025-08-31T20:42:40.240033+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:40Z ubuntu-cloudron-16gb-nbg1-3 cd4a6fed-6fd7-4616-ba0d-d0c38972774b 1123 cd4a6fed-6fd7-4616-ba0d-d0c38972774b - 172.18.0.1 - - [31/Aug/2025:20:42:40 +0000] "GET / HTTP/1.1" 200 45257 "-" "Mozilla (CloudronHealth)" 2025-08-31T20:42:41.676806+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:41Z ubuntu-cloudron-16gb-nbg1-3 mongodb 1123 mongodb - {"t":{"$date":"2025-08-31T20:42:41.675+00:00"},"s":"D1", "c":"REPL", "id":21223, "ctx":"NoopWriter","msg":"Set last known op time","attr":{"lastKnownOpTime":{"ts":{"$timestamp":{"t":1756672961,"i":1}},"t":42}}} 2025-08-31T20:42:43.067695+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:43Z ubuntu-cloudron-16gb-nbg1-3 mongodb 1123 mongodb - {"t":{"$date":"2025-08-31T20:42:43.066+00:00"},"s":"D1", "c":"NETWORK", "id":4668132, "ctx":"ReplicaSetMonitor-TaskExecutor","msg":"ReplicaSetMonitor ping success","attr":{"host":"mongodb:27017","replicaSet":"rs0","durationMicros":606}} 2025-08-31T20:42:44.061046+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:44Z ubuntu-cloudron-16gb-nbg1-3 b5b418fc-0f16-4cde-81a1-1213880c9a10 1123 b5b418fc-0f16-4cde-81a1-1213880c9a10 - url = link.split(" : ")[0].split(" ")[1].strip("[]") 2025-08-31T20:42:44.061077+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:44Z ubuntu-cloudron-16gb-nbg1-3 b5b418fc-0f16-4cde-81a1-1213880c9a10 1123 b5b418fc-0f16-4cde-81a1-1213880c9a10 - ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~^^^ 2025-08-31T20:42:44.061100+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:44Z ubuntu-cloudron-16gb-nbg1-3 b5b418fc-0f16-4cde-81a1-1213880c9a10 1123 b5b418fc-0f16-4cde-81a1-1213880c9a10 - IndexError: list index out of range