Minio backup fails for no reason

-

Hello,

I have configured backups with Minio and it worked perfectly for weeks.

But it does no longer work. I think the problem started after an update, but I am not sure. As far as I understand from the logs (both from Cloudron and Minio sides) Cloudron tries to copy to Minio bucket a multipart archive and it fails. And Minio log says Cloudron tries to rename and/or copy a file that does not exist (yet, I guess).

Abstract from Cloudron log :

Unable to backup { BoxError: Error copying crsav_/snapshot/app_f0770611-2970-4e86-9047-0260e0297b79.tar.gz.enc (35961955060 bytes): XMLParserError XMLParserError: Unexpected close tag\nLine: 5\nColumn: 7\nChar: >' }Abstract from Minio log :

Error: rename /data/.minio.sys/tmp/057fd8e5-234e-4e99-abe9-4ddb96796b34/e4b6158e-b4f5-4afc-8bb2-08e63228e618.2ff401ac-895f-43f9-aa2a-e40e9b862aef.9 /data/.minio.sys/multipart/caa122a8292342e6d4f7592bf3aed821c15f88ae471d458b70474d192b99be02/e4b6158e-b4f5-4afc-8bb2-08e63228e618/00009.0d55bc9f9c910f5b64049580ba387d34.1073741824: no such file or directory 5: github.com/minio/minio@/cmd/fs-v1-helpers.go:397:cmd.fsSimpleRenameFile() 4: github.com/minio/minio@/cmd/fs-v1-multipart.go:331:cmd.(*FSObjects).PutObjectPart() 3: github.com/minio/minio@/cmd/fs-v1-multipart.go:259:cmd.(*FSObjects).CopyObjectPart() 2: github.com/minio/minio@/cmd/object-handlers.go:1892:cmd.objectAPIHandlers.CopyObjectPartHandler() 1: net/http/server.go:2007:http.HandlerFunc.ServeHTTP()Thank you,

Thibaud -

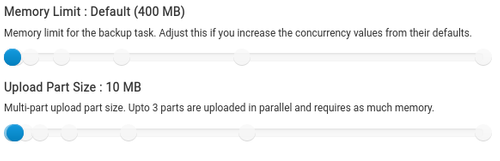

@thibaud Do you have a guesstimate of what is the size of that file? Also, in the backup configuration, do you minio or s3 compatible as the provider? Can you also post a screenshot of Backups -> configure -> advanced settings (just the bottom part of the advanced settings screenshot).

-

@girish I have no idea. I understand the backup fails when it comes to Nextcloud. So basically, the file is large.

Backup conf is on Minio.

Are those settings new ? I am pretty sure I have never seen them. I guess they have something to do with my problem...

-

You can check this from the graphs maybe as to how much the app consumes? It's under System view.

Yes. As expected its huge as it is a Nextcloud. The entire app consumes 36 GB.

Yes, new in 5.4 and 5.5

And I think my problem appeared after 5.4 update. So basically I guess I need to increase memory limit? Up to... how much?

-

You can check this from the graphs maybe as to how much the app consumes? It's under System view.

Yes. As expected its huge as it is a Nextcloud. The entire app consumes 36 GB.

Yes, new in 5.4 and 5.5

And I think my problem appeared after 5.4 update. So basically I guess I need to increase memory limit? Up to... how much?

-

You can check this from the graphs maybe as to how much the app consumes? It's under System view.

Yes. As expected its huge as it is a Nextcloud. The entire app consumes 36 GB.

Yes, new in 5.4 and 5.5

And I think my problem appeared after 5.4 update. So basically I guess I need to increase memory limit? Up to... how much?

-

Thank you all.

@girish I increased the parameters step by step and tried to backup for each (until the max i.e. 16 go). I still have the same error message. Any help?

-

@thibaud Are you able to mail the entire backup log to support@cloudron.io ? Let's see if it's something obvious from the logs.

-

@thibaud Thanks. It seems multi-part copies are failing altogether. As in, even the first part is failing. I thought it was failing much later.

Retrying (1) multipart copy of crsav_/snapshot/app_f0770611-2970-4e86-9047-0260e0297b79.tar.gz.enc. Error: XMLParserError: Unexpected close tag\nLine: 5\nColumn: 7\nChar: > 405 (Let me quickly test this and see if it's easy to reproduce.

-

@thibaud Mmm, I cannot reproduce this. I tried to backup and it succeeded over ~40 parts (https://paste.cloudron.io/ihulamulow.apache). So, to take a step back, it failed in "rename" . Is there something special about the filesystem where minio is located? Is it ext4? What is the underlying filesytem of Cloudron itself?

-

@thibaud Mmm, I cannot reproduce this. I tried to backup and it succeeded over ~40 parts (https://paste.cloudron.io/ihulamulow.apache). So, to take a step back, it failed in "rename" . Is there something special about the filesystem where minio is located? Is it ext4? What is the underlying filesytem of Cloudron itself?

-

@thibaud said in Minio backup fails for no reason:

Minio is self-hosted in a Docker on a Synology NAS which underlying filesystem is proprietary (btfrs)

I have exactly the same situation on two Cloudrons to Minio's on two NAS's, only difference is that my backups are rsync and not tar. I experience no single issue and with Watchtower in Docker Minio is automatically updated to the latest version within 24 hours.

-

@thibaud said in Minio backup fails for no reason:

Minio is self-hosted in a Docker on a Synology NAS which underlying filesystem is proprietary (btfrs)

I have exactly the same situation on two Cloudrons to Minio's on two NAS's, only difference is that my backups are rsync and not tar. I experience no single issue and with Watchtower in Docker Minio is automatically updated to the latest version within 24 hours.

-

@imc67 said in Minio backup fails for no reason:

my backups are rsync and not tar

Thanks for the tip. I switched to rsync and I was full of hope. Unfortunately it also fails.

@girish I send you Minio full log by mail.

@thibaud I replied to you on support@ but the issue is that there is some long file name. The current rsync+encryption backup has some file name length limitation - https://docs.cloudron.io/backups/#encryption . There is a feature request at https://forum.cloudron.io/topic/3057/consider-improvements-to-the-backup-experience-to-support-long-filenames-directory-names .

Run the following command in

/home/yellowtent/appsdatato find the large filenames:find . -type f -printf "%f\n" | awk '{ print length(), $0 | "sort -rn" }' | less