Backup Stuck until manually stopped.

-

Might be totally unrelated, but I've had backups fails and stalls and narrowed it down to externally linked images. If I stopped linking remotely and uploaded the image to the Cloudron app, the backup behaved. I unfortunately have no documentation about it though, just my experience with it.

-

@robi Do you know what it says in the last few lines of the backup logs when it is stuck? Also, where are you backing up to?

@girish said in Backup Stuck until manually stopped.:

@robi Do you know what it says in the last few lines of the backup logs when it is stuck? Also, where are you backing up to?

I already mentioned this, it's a local, .tgz backup.

in/backups/I have a 7MB logs.txt file you're welcome to.

-

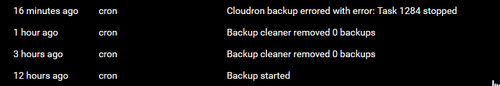

seem to be having the same issue, happens with rsync or tarball (to Wasabi, which is probably irrelevant). Last few lines from the logs:

Nov 03 07:44:54 box:shell backup-snapshot/app_7eb7aeb8-387b-46ca-92c6-fb16ab0000e2 code: null, signal: SIGKILL Nov 03 07:44:54 box:backups healthshop.net Unable to backup { BoxError: Backuptask crashed at /home/yellowtent/box/src/backups.js:864:29 at f (/home/yellowtent/box/node_modules/once/once.js:25:25) at ChildProcess.<anonymous> (/home/yellowtent/box/src/shell.js:69:9) at ChildProcess.emit (events.js:198:13) at ChildProcess.EventEmitter.emit (domain.js:448:20) at Process.ChildProcess._handle.onexit (internal/child_process.js:248:12) name: 'BoxError', reason: 'Internal Error', details: {}, message: 'Backuptask crashed' } Nov 03 07:44:54 box:taskworker Task took 6293.696 seconds Nov 03 07:44:54 box:tasks setCompleted - 1960: {"result":null,"error":{"stack":"BoxError: Backuptask crashed\n at /home/yellowtent/box/src/backups.js:864:29\n at f (/home/yellowtent/box/node_modules/once/once.js:25:25)\n at ChildProcess.<anonymous> (/home/yellowtent/box/src/shell.js:69:9)\n at ChildProcess.emit (events.js:198:13)\n at ChildProcess.EventEmitter.emit (domain.js:448:20)\n at Process.ChildProcess._handle.onexit (internal/child_process.js:248:12)","name":"BoxError","reason":"Internal Error","details":{},"message":"Backuptask crashed"}} Nov 03 07:44:54 box:tasks 1960: {"percent":100,"result":null,"error":{"stack":"BoxError: Backuptask crashed\n at /home/yellowtent/box/src/backups.js:864:29\n at f (/home/yellowtent/box/node_modules/once/once.js:25:25)\n at ChildProcess.<anonymous> (/home/yellowtent/box/src/shell.js:69:9)\n at ChildProcess.emit (events.js:198:13)\n at ChildProcess.EventEmitter.emit (domain.js:448:20)\n at Process.ChildProcess._handle.onexit (internal/child_process.js:248:12)","name":"BoxError","reason":"Internal Error","details":{},"message":"Backuptask crashed"}} -

A bit more (earlier) logs could be helpful here, given that the process got a SIGKILL this could indicate that the system was simply running out of memory and the kernel has killed that process to free up some. Usually

dmesgorjournalctl --systemwould give more indication here. -

A bit more (earlier) logs could be helpful here, given that the process got a SIGKILL this could indicate that the system was simply running out of memory and the kernel has killed that process to free up some. Usually

dmesgorjournalctl --systemwould give more indication here.@nebulon emailed to support@cloudron.io - thanks

-

There was nothing useful in the main log.

There is a different set of backup task logs in the Cloudron Backups menu at the bottom of the page.

This was requested for the relevant backup task and sent in.

Pro Tip: The URL of the backups log can be modified to specify the task to search for.

-

@robi I was just looking into the logs. It has

2020-10-31T21:00:18.640Z box:database Connection 548 error: Connection lost: The server closed the connection. PROTOCOL_CONNECTION_LOST 2020-10-31T21:00:18.854Z box:database Connection 543 error: Connection lost: The server closed the connection. PROTOCOL_CONNECTION_LOST 2020-10-31T21:00:19.146Z box:database Connection 544 error: Connection lost: The server closed the connection. PROTOCOL_CONNECTION_LOST 2020-10-31T21:00:19.419Z box:database Connection 545 error: Connection lost: The server closed the connection. PROTOCOL_CONNECTION_LOST 2020-10-31T21:00:19.797Z box:shell backup-snapshot/app_0800bb6e-f3a5-48fa-994b-1bf0d0d6a67f (stdout): 2020-10-31T21:00:19.797Z box:database Connection 549 error: Connection lost: The server closed the connection. PROTOCOL_CONNECTION_LOST 2020-10-31T21:00:19.800Z box:shell backup-snapshot/app_0800bb6e-f3a5-48fa-994b-1bf0d0d6a67f (stdout): 2020-10-31T21:00:19.800Z box:database Connection 550 error: Connection lost: The server closed the connection. PROTOCOL_CONNECTION_LOSTThis suggest that MySQL went down. Do you see out of memory errors in

dmesgoutput ? -

@nebulon emailed to support@cloudron.io - thanks

@marcusquinn said in Backup Stuck until manually stopped.:

@nebulon emailed to support@cloudron.io - thanks

In your case, the backup got Killed because of lack of memory. I have bumped up the memory for the backup process under Backups -> Configure and started a new backup now. Let's see.

-

@marcusquinn said in Backup Stuck until manually stopped.:

@nebulon emailed to support@cloudron.io - thanks

In your case, the backup got Killed because of lack of memory. I have bumped up the memory for the backup process under Backups -> Configure and started a new backup now. Let's see.

@girish Danke

-

Also, can you provide the output of

free -m. That should give a good idea of how much memory is available on the server.no memory errors in dmesg, 6.5GB free mem, the server graphs show mysql using 15% of the 2.5GB allocated, so it's not that.

My guess, we look at the DB health during that time?

What can we do about recovering from the connection error and continuing the backup or erroring out sooner so we avoid the deadlock and let the next backup run?

-

@marcusquinn said in Backup Stuck until manually stopped.:

@nebulon emailed to support@cloudron.io - thanks

In your case, the backup got Killed because of lack of memory. I have bumped up the memory for the backup process under Backups -> Configure and started a new backup now. Let's see.

@girish said in Backup Stuck until manually stopped.:

In your case, the backup got Killed because of lack of memory. I have bumped up the memory for the backup process under Backups -> Configure and started a new backup now. Let's see.

To post a final update on this: the issue was that one app had 2 million files and another had a million files. It looks like node gets a heap out of memory when dealing with such large number of files (specifically the tar module we use). Don't know if this is easily fixable.

-

no memory errors in dmesg, 6.5GB free mem, the server graphs show mysql using 15% of the 2.5GB allocated, so it's not that.

My guess, we look at the DB health during that time?

What can we do about recovering from the connection error and continuing the backup or erroring out sooner so we avoid the deadlock and let the next backup run?

@robi said in Backup Stuck until manually stopped.:

What can we do about recovering from the connection error and continuing the backup or erroring out sooner so we avoid the deadlock and let the next backup run?

I think there is a bug. It seems when the database connection goes down, the backup process gets stuck. Ideally, it should just fail at that point. I am looking into reproducing this.

-

@robi said in Backup Stuck until manually stopped.:

What can we do about recovering from the connection error and continuing the backup or erroring out sooner so we avoid the deadlock and let the next backup run?

I think there is a bug. It seems when the database connection goes down, the backup process gets stuck. Ideally, it should just fail at that point. I am looking into reproducing this.

-

J james has marked this topic as solved on

J james has marked this topic as solved on