[Backups] Ability to add multiple storage provider/location

-

To add to this, for home installations, I want to be able to push encrypted backups to a friend's cloudron

(after he/she has given me permission, of course).

(after he/she has given me permission, of course). -

To add to this, for home installations, I want to be able to push encrypted backups to a friend's cloudron

(after he/she has given me permission, of course).

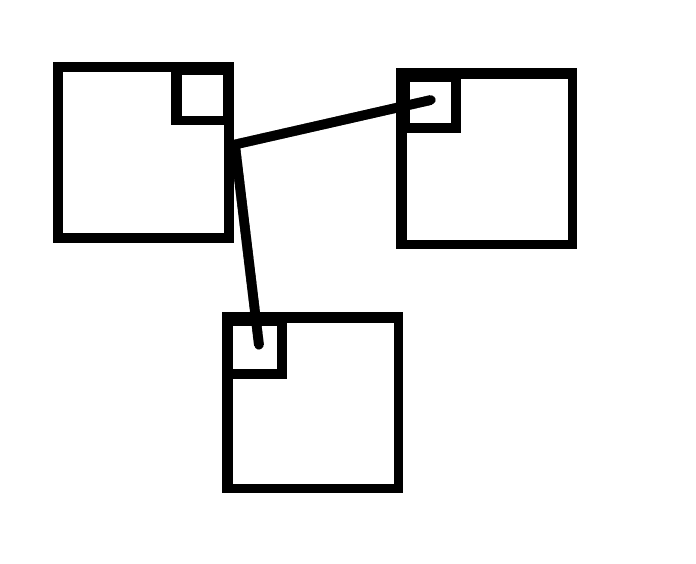

(after he/she has given me permission, of course).@girish Yes, I would be good to say have a Minio bucket to which to push backups to on your friends' Cloudrons, and be able to have a backup on each Cloudron. (See diagram below)

*Small square is Minio bucket

-

It might be more CPU/bandwidth friendly if one Rsync process sends the backup to location A, as it does right now, and a second initiates the copy from B to C, and if desired a D, E, F location too if people wanted to go crazy with backup locations.

From another angle, it might be that we want one entirely different backup method to one place (could be unencrypted rsync), and another to another (perhaps encrypted tarball).

Would very much like this though, especially as cyber attacks are only growing as more and more economic value is online, and on other people's servers.

-

It might be more CPU/bandwidth friendly if one Rsync process sends the backup to location A, as it does right now, and a second initiates the copy from B to C, and if desired a D, E, F location too if people wanted to go crazy with backup locations.

From another angle, it might be that we want one entirely different backup method to one place (could be unencrypted rsync), and another to another (perhaps encrypted tarball).

Would very much like this though, especially as cyber attacks are only growing as more and more economic value is online, and on other people's servers.

-

@marcusquinn yes, we will try to do this for the release after. I think it's important to support 3-2-1 style backups - atleast the 3-2 part more easily.

A good way to handle the 3-2-1 for cloudron can be to replicate what Proxmox has done, delegate the replication to the other software(Proxmox Backup Server) installed on the destination backup server, this is for 2 reasons: storage servers/vps often have low resources but those are good enough to enable replication and they are really cheap, probably more expensive then Wasabi, but Wasabi is slow and really not efficient in cost for cold Rsync backup.

-

A good way to handle the 3-2-1 for cloudron can be to replicate what Proxmox has done, delegate the replication to the other software(Proxmox Backup Server) installed on the destination backup server, this is for 2 reasons: storage servers/vps often have low resources but those are good enough to enable replication and they are really cheap, probably more expensive then Wasabi, but Wasabi is slow and really not efficient in cost for cold Rsync backup.

I was looking around for a way to solve this issue without using a complicated setup like Ceph (like we did, and we would love to get out of it but that's another story).

I found Restic, it could be used instead of rsync, and it supports S3 but also their own rest server that can run on the destination server of the backup and with their cli, you can create a copy of one of the snapshots without involving the production server, and store it on an HDD offline or on the others providers.https://github.com/restic

https://github.com/restic/rest-server -

I was looking around for a way to solve this issue without using a complicated setup like Ceph (like we did, and we would love to get out of it but that's another story).

I found Restic, it could be used instead of rsync, and it supports S3 but also their own rest server that can run on the destination server of the backup and with their cli, you can create a copy of one of the snapshots without involving the production server, and store it on an HDD offline or on the others providers.https://github.com/restic

https://github.com/restic/rest-server@moocloud_matt said in [Backups] Ability to add multiple storage provider/location:

Restic

There's a bunch of discussion about that here:

https://forum.cloudron.io/post/2466

Sounds like both @necrevistonnezr and @fbartels have some experience with it

-

@moocloud_matt said in [Backups] Ability to add multiple storage provider/location:

Restic

There's a bunch of discussion about that here:

https://forum.cloudron.io/post/2466

Sounds like both @necrevistonnezr and @fbartels have some experience with it

@jdaviescoates yes, restic is quite nice. I am however not using their server backend, but rather push backups to a s3 target.

I am however not sure how restic would solve the 3rd (offsite) part. I kind of achieve this by mirroring s3 buckets, but this is something I can already do when Cloudron is already writing stuff to s3.

-

@jdaviescoates yes, restic is quite nice. I am however not using their server backend, but rather push backups to a s3 target.

I am however not sure how restic would solve the 3rd (offsite) part. I kind of achieve this by mirroring s3 buckets, but this is something I can already do when Cloudron is already writing stuff to s3.

@fbartels

you have a command/API that can copy a snapshot to a new location.

(i just had time to check their documentation and a basic install) -

@fbartels

you have a command/API that can copy a snapshot to a new location.

(i just had time to check their documentation and a basic install)@moocloud_matt ah, true. This however means that the copy needs to go through a local client (download & upload) and apparently also reencrypts data (with the potential of deduplication not working).

Its been ages since I last looked into restic, as it "just worked" for me. The thing to highlight is that apparently there has been a successful handover in maintainership in the past, as the original author hasn't done any work himself in quite a while.

Another upside is its portability due to being written in golang.

Edit: if one wants to look into restic, https://autorestic.vercel.app/ is a nice wrapper to simplify setup and handling. My old systems still use bits of bash for that.

-

@moocloud_matt ah, true. This however means that the copy needs to go through a local client (download & upload) and apparently also reencrypts data (with the potential of deduplication not working).

Its been ages since I last looked into restic, as it "just worked" for me. The thing to highlight is that apparently there has been a successful handover in maintainership in the past, as the original author hasn't done any work himself in quite a while.

Another upside is its portability due to being written in golang.

Edit: if one wants to look into restic, https://autorestic.vercel.app/ is a nice wrapper to simplify setup and handling. My old systems still use bits of bash for that.

@fbartels said in [Backups] Ability to add multiple storage provider/location:

local client (download & upload)

True but is Go, and a Storage Server/VPS have enough resources to have both server and client on it to replicate to an cold s3 storage.

@fbartels said in [Backups] Ability to add multiple storage provider/location:

Another upside is its portability due to being written in golang.

We also need to take into consideration that setup a golang script is easy and can be done by almost anybody in the cloudron community and tanks to the Rest API, cloudron can manage the server super easily, instead if it will be selected a nodejs or py script it can be a lot more difficult to setup.

I think that docker should be excluded as a tool to distribute the destination server for the backup, because many VPS provider of Storage Server offers OpenVZ and not KVM, we would not have that issue but many the will. -

Don't forget about rclone.org

-

Don't forget about rclone.org

@robi

I actually don't like that the 3-2-1 is managed by the main server, because if that is compromised you will have compromised also your backup.I think that: if cloudron wants to offer a better backup solution should have a 3° party software/node be in charge of the replication for the 2-1.

This will protect the server from any ransomware or if your server is compromised. -

Borrowing an answer from StackOverflow that may work:

- Minio Cloudron instance: using the command

mc mirroron a cron job.

If that works, it could just be a case of documenting and maybe a GUI to make it user-friendly @girish ?

- Minio Cloudron instance: using the command

-

Borrowing an answer from StackOverflow that may work:

- Minio Cloudron instance: using the command

mc mirroron a cron job.

If that works, it could just be a case of documenting and maybe a GUI to make it user-friendly @girish ?

@marcusquinn

a full install of cloudron is too many resources w8st for many storage servers, we speak of old CPU (many of our storage servers have Haswell xeon) or just 1 vCore (time4vps) , and often without the support of docker. - Minio Cloudron instance: using the command

-

@robi

I actually don't like that the 3-2-1 is managed by the main server, because if that is compromised you will have compromised also your backup.I think that: if cloudron wants to offer a better backup solution should have a 3° party software/node be in charge of the replication for the 2-1.

This will protect the server from any ransomware or if your server is compromised.@moocloud_matt said in [Backups] Ability to add multiple storage provider/location:

I actually don't like that the 3-2-1 is managed by the main server, because if that is compromised you will have compromised also your backup.

That's the problem with traditional backups.

Next gen way of thinking about backups is simply having a much more resilient storage system. For example, when your data is sprinkled across 8 places and you only need 5 to restore any file/object. There are some very clever and efficient algorithms for this m of n approach which removes the need for 3x replication.

Minio can do this, and as a community we can pool resources to have 20+ places and only need 7 or so to be available at any one time. Maybe even start a coop.

-

@moocloud_matt said in [Backups] Ability to add multiple storage provider/location:

I actually don't like that the 3-2-1 is managed by the main server, because if that is compromised you will have compromised also your backup.

That's the problem with traditional backups.

Next gen way of thinking about backups is simply having a much more resilient storage system. For example, when your data is sprinkled across 8 places and you only need 5 to restore any file/object. There are some very clever and efficient algorithms for this m of n approach which removes the need for 3x replication.

Minio can do this, and as a community we can pool resources to have 20+ places and only need 7 or so to be available at any one time. Maybe even start a coop.

@robi great idea. +1 for m of n approach with minio!

-

@moocloud_matt said in [Backups] Ability to add multiple storage provider/location:

I actually don't like that the 3-2-1 is managed by the main server, because if that is compromised you will have compromised also your backup.

That's the problem with traditional backups.

Next gen way of thinking about backups is simply having a much more resilient storage system. For example, when your data is sprinkled across 8 places and you only need 5 to restore any file/object. There are some very clever and efficient algorithms for this m of n approach which removes the need for 3x replication.

Minio can do this, and as a community we can pool resources to have 20+ places and only need 7 or so to be available at any one time. Maybe even start a coop.

@robi said in [Backups] Ability to add multiple storage provider/location:

For example, when your data is sprinkled across 8 places and you only need 5 to restore any file/object.

True, that's why we are using ceph, but it's not efficient (storage speaking) to protect the files we need to use snapshot or versioning in ceph too, because if the access of the bucket is compromised on the cloudron side all files even if they are slit into multiple nodes can still be deleted/encrypted, so that made the all advantage of using Software Define Storage/ Distributed storage = to a normal NAS offer by the datacenter over NFS.

I really would like to analyze better what push proxmox to build a dedicated Client for their Storage Server. And what I have understood un till now is that they want maximal protection made easy which means that the ssh key used by their hypervisor server is not able to access the 2 and 1 copy of the backup.

I really don't care about what software/ stack Cloudron will use, I just want to get out of Ceph for the backup and use a better setup that is not less safe.

-

@moocloud_matt said in [Backups] Ability to add multiple storage provider/location:

I actually don't like that the 3-2-1 is managed by the main server, because if that is compromised you will have compromised also your backup.

That's the problem with traditional backups.

Next gen way of thinking about backups is simply having a much more resilient storage system. For example, when your data is sprinkled across 8 places and you only need 5 to restore any file/object. There are some very clever and efficient algorithms for this m of n approach which removes the need for 3x replication.

Minio can do this, and as a community we can pool resources to have 20+ places and only need 7 or so to be available at any one time. Maybe even start a coop.