Disk space should never bring a whole server down

-

Right now I have a whole server down and because it's Saturday, can't get hold of anyone.

Running out of disk space should never bring a whole server down.

How about some safety margin that preserves 10% of disk space to keep the OS and Cloudron running?

This really isn't my area but I'm beyond words right now.

I've tried clearing out var/backups, numerous reboots - still a total fail

-

Last time we checked it was actually not easy to preserve space for the system as such, we would need support from the filesystem here.

Either way after clearing up some space, did you follow https://docs.cloudron.io/troubleshooting/#recovery-after-disk-full ?

-

Right now I have a whole server down and because it's Saturday, can't get hold of anyone.

Running out of disk space should never bring a whole server down.

How about some safety margin that preserves 10% of disk space to keep the OS and Cloudron running?

This really isn't my area but I'm beyond words right now.

I've tried clearing out var/backups, numerous reboots - still a total fail

@marcusquinn when you say whole server down, do you mean that the dashboard is unreachable? Is the box code running

systemctl status boxandsystemctl status nginx. If those two are up, things should be working.AFAIK, there is no easy way to implement this feature. If a process starts writing into disk like crazy because of a bug or intentionally, then other processes which write to disk start crashing. Unless the app itself is resilient to this, it' tricky to implement (I guess one approach is to also disk sandbox all apps but that also AFAIK is not easy to do).

-

@nebulon Thanks - tried all of that but all was OK.

@girish yeah, the Dashboard.

I think I've found an outlier symptom but not worked out how to solve yet.

If I login a fresh with Firefox at my.example.com I can get in again.

However, my main daily-driver "browser" for this is a WebCatalog (Electron) webapp.

So, here's the weird thing - I clear cookies and cache, I can get to the login screen. I login and just a blank https://my.brandlight.org/#/apps page, with nothing showing.

All other Cloudron servers and instances working fine.

Since it's working in Firefox now, I think it's safe to say no so urgent. My feeling is something went wrong when the disk filled up and somehow the browser now is all confused.

Anyway, lower priority - I'll keep digging and see if I can find the cause/cure with some luck...

-

Ugh, nope, disk full again, whole server down. Makes me dislike weekends when this stuff happens.

No clue what to do but the post title remains valid - maybe Apps need diskspace limits because I'm caught between hard server resets, brief times of access, and then lockout again.

-

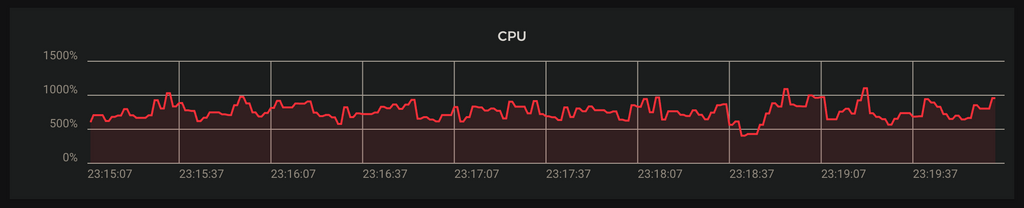

Whatever 1000% CPU is doing, it's not showing a Cloudron Dashboard:

-

# systemctl status box ● box.service - Cloudron Admin Loaded: loaded (/etc/systemd/system/box.service; enabled; vendor preset: enabled) Active: activating (auto-restart) (Result: exit-code) since Sat 2021-03-06 23:22:48 UTC; 88ms ago Process: 311 ExecStart=/home/yellowtent/box/box.js (code=exited, status=1/FAILURE) Main PID: 311 (code=exited, status=1/FAILURE)# systemctl status nginx ● nginx.service - nginx - high performance web server Loaded: loaded (/lib/systemd/system/nginx.service; enabled; vendor preset: enabled) Drop-In: /etc/systemd/system/nginx.service.d └─cloudron.conf Active: active (running) since Sat 2021-03-06 23:09:24 UTC; 14min ago Docs: http://nginx.org/en/docs/ Process: 1431 ExecStart=/usr/sbin/nginx -c /etc/nginx/nginx.conf (code=exited, status=0/SUCCESS) Main PID: 1634 (nginx) Tasks: 17 (limit: 4915) CGroup: /system.slice/nginx.service ├─1634 nginx: master process /usr/sbin/nginx -c /etc/nginx/nginx.conf ├─1638 nginx: worker process ├─1639 nginx: worker process ├─1641 nginx: worker process ├─1642 nginx: worker process ├─1645 nginx: worker process ├─1646 nginx: worker process ├─1647 nginx: worker process ├─1648 nginx: worker process ├─1649 nginx: worker process ├─1650 nginx: worker process ├─1651 nginx: worker process ├─1652 nginx: worker process ├─1653 nginx: worker process ├─1654 nginx: worker process ├─1655 nginx: worker process └─1656 nginx: worker process Mar 06 23:09:23 cloudron01 systemd[1]: Starting nginx - high performance web server... Mar 06 23:09:24 cloudron01 systemd[1]: Started nginx - high performance web server.Sorry, I have to work evenings and weekends, it's the only time I can concentrate on the deep work without all the emails & messages interruptions of the week days.

-

# systemctl status unbound ● unbound.service - Unbound DNS Resolver Loaded: loaded (/etc/systemd/system/unbound.service; enabled; vendor preset: enabled) Active: active (running) since Sat 2021-03-06 23:26:38 UTC; 8s ago Main PID: 20802 (unbound) Tasks: 1 (limit: 4915) CGroup: /system.slice/unbound.service └─20802 /usr/sbin/unbound -d Mar 06 23:26:38 cloudron01 systemd[1]: Started Unbound DNS Resolver. Mar 06 23:26:38 cloudron01 unbound[20802]: [20802:0] notice: init module 0: subnet Mar 06 23:26:38 cloudron01 unbound[20802]: [20802:0] notice: init module 1: validator Mar 06 23:26:38 cloudron01 unbound[20802]: [20802:0] notice: init module 2: iterator Mar 06 23:26:38 cloudron01 unbound[20802]: [20802:0] info: start of service (unbound 1.6.7). Mar 06 23:26:39 cloudron01 unbound[20802]: [20802:0] error: could not fflush(/var/lib/unbound/root.key): No space left on device Mar 06 23:26:39 cloudron01 unbound[20802]: [20802:0] error: could not fflush(/var/lib/unbound/root.key): No space left on device -

In just cannot get my head around how the disk can be allowed to fill to the point of a total system failure.

Slowdown, sure I understand - but it's a total fail and I can't see why this isn't preventable.

Is it really all one has to to bring a Cloudron down is load it up with data?

There's a bunch of Apps that allow for uploads, it really wouldn't take much effort to flood those with a few GB.

-

Last time we checked it was actually not easy to preserve space for the system as such, we would need support from the filesystem here.

Either way after clearing up some space, did you follow https://docs.cloudron.io/troubleshooting/#recovery-after-disk-full ?

@nebulon @girish App have Memory & CPU allocations - any reason they can't have disk- space allocations too?

I'd rather a single app hits a wall than an entire server.

It seems all one would have to do to bring a whole Cloudron down this way would be send a lot of email attachments to the point of disk saturation.

Maybe I'm wrong and it's something else - but feel free to delete this post and move to email if it's a reproducible risk.

-

@nebulon @girish App have Memory & CPU allocations - any reason they can't have disk- space allocations too?

I'd rather a single app hits a wall than an entire server.

It seems all one would have to do to bring a whole Cloudron down this way would be send a lot of email attachments to the point of disk saturation.

Maybe I'm wrong and it's something else - but feel free to delete this post and move to email if it's a reproducible risk.

@marcusquinn said in Disk space should never bring a whole server down:

@nebulon @girish App have Memory & CPU allocations - any reason they can't have disk- space allocations too?

Yes, the memory & CPU allocations are features of the linux kernel cgroups. However, disk space allocation is not part of them.

I guess the issue atleast to handle right now is that for some reason disk space is full. Running

docker image prune -asometimes frees up some disk space. Can you try that? Alternately, if you drop me a mail on support , I can look into the server. -

@marcusquinn said in Disk space should never bring a whole server down:

@nebulon @girish App have Memory & CPU allocations - any reason they can't have disk- space allocations too?

Yes, the memory & CPU allocations are features of the linux kernel cgroups. However, disk space allocation is not part of them.

I guess the issue atleast to handle right now is that for some reason disk space is full. Running

docker image prune -asometimes frees up some disk space. Can you try that? Alternately, if you drop me a mail on support , I can look into the server.@girish said in Disk space should never bring a whole server down:

docker image prune -a

OK, thanks, tried that: "Total reclaimed space: 816.4MB"

Still no respondio though. Have emailed support@ but 2am here and an early start, so back online in 8h or so, by which it'll be your 2am, and appreciate it's Saturday, so just grateful for pointers and hoping I might have some other requests for assistance waking up soon too.

-

Can we add a disk space email alert, and event where after some critical threshold /tmp is cleaned up & docker images pruned by Cloudron.

Completely avoidable with a bit of this..

I'm wondering if maybe Cloudron should have its own volume by default.

A quick search in the subject but kinda tired now:

- https://www.reddit.com/r/docker/comments/loleal/how_to_limit_disk_space_for_a_docker_container/

- https://guide.blazemeter.com/hc/en-us/articles/115003812129-Overcoming-Container-Storage-Limitation-Overcoming-Container-Storage-Limitation#:~:text=In the current Docker version,be left in the container

- https://stackoverflow.com/questions/38542426/docker-container-specific-disk-quota

-

I'm wondering if maybe Cloudron should have its own volume by default.

A quick search in the subject but kinda tired now:

- https://www.reddit.com/r/docker/comments/loleal/how_to_limit_disk_space_for_a_docker_container/

- https://guide.blazemeter.com/hc/en-us/articles/115003812129-Overcoming-Container-Storage-Limitation-Overcoming-Container-Storage-Limitation#:~:text=In the current Docker version,be left in the container

- https://stackoverflow.com/questions/38542426/docker-container-specific-disk-quota

-

@marcusquinn Managed to bring it up by truncating many logs. Should be coming up in a bit, hold on.

@girish Ahhhh - thank you kindly!

I have an unused 1TB volume mounted, although I'm not sure how much of the remaining free space is used in the Move function, as I guess that was killing it when I triggered to move the 16GB Jira App data to it?

-

@marcusquinn Managed to bring it up by truncating many logs. Should be coming up in a bit, hold on.

@girish said in Disk space should never bring a whole server down:

Managed to bring it up by truncating many logs

Is this perhaps related to the issue I reported a little while back too, regarding the logrotate not running properly under certain circumstances?

-

@girish said in Disk space should never bring a whole server down:

Managed to bring it up by truncating many logs

Is this perhaps related to the issue I reported a little while back too, regarding the logrotate not running properly under certain circumstances?

@d19dotca I remembered that mention, although fading brain never found or got to looking at that. I kinda think this situation is a bit too easy to get into and hard to get out of once its Terminal only.

-

Going to trigger a move on Confluence to the mounted volume, it's 4.5GB with 7.5GB free space now on the main volume - so hopefully that's enough working space but I have to zzz, problems where I know I don't immediately know how to solve are kinda exhausting.

-

@girish Ahhhh - thank you kindly!

I have an unused 1TB volume mounted, although I'm not sure how much of the remaining free space is used in the Move function, as I guess that was killing it when I triggered to move the 16GB Jira App data to it?