Here is the start of an N8N workflow.

Copy and paste all the JSON into your N8N instance it will detect the JSON and nodes.

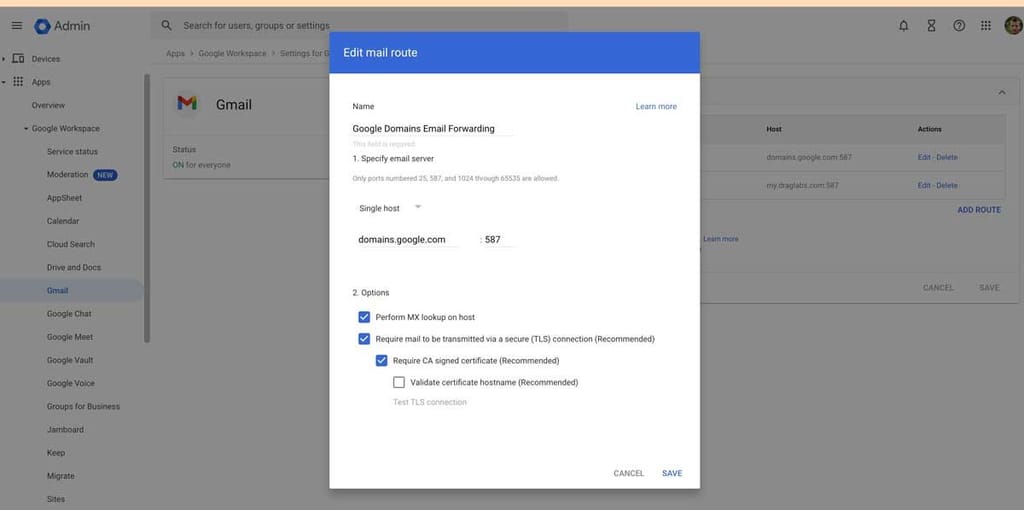

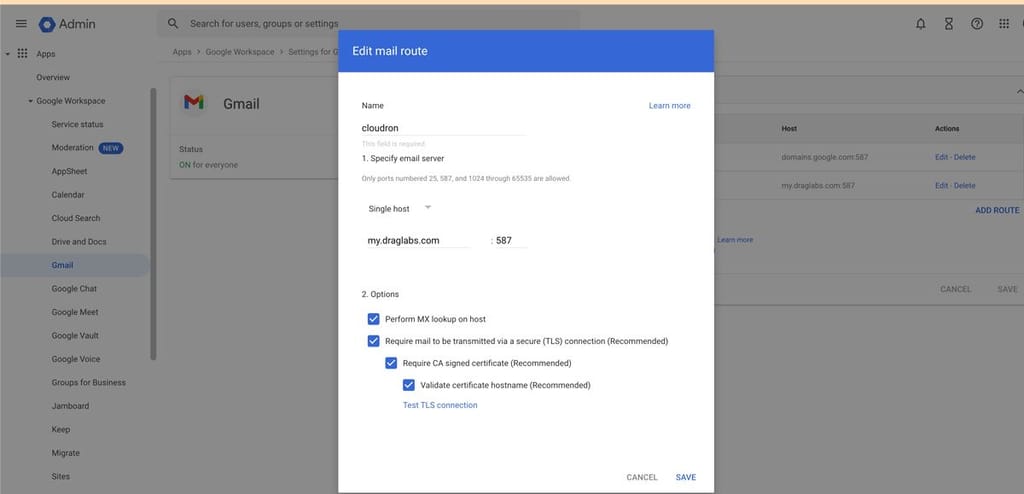

We are using query auth here because we do not know how to do the Bearer Token.

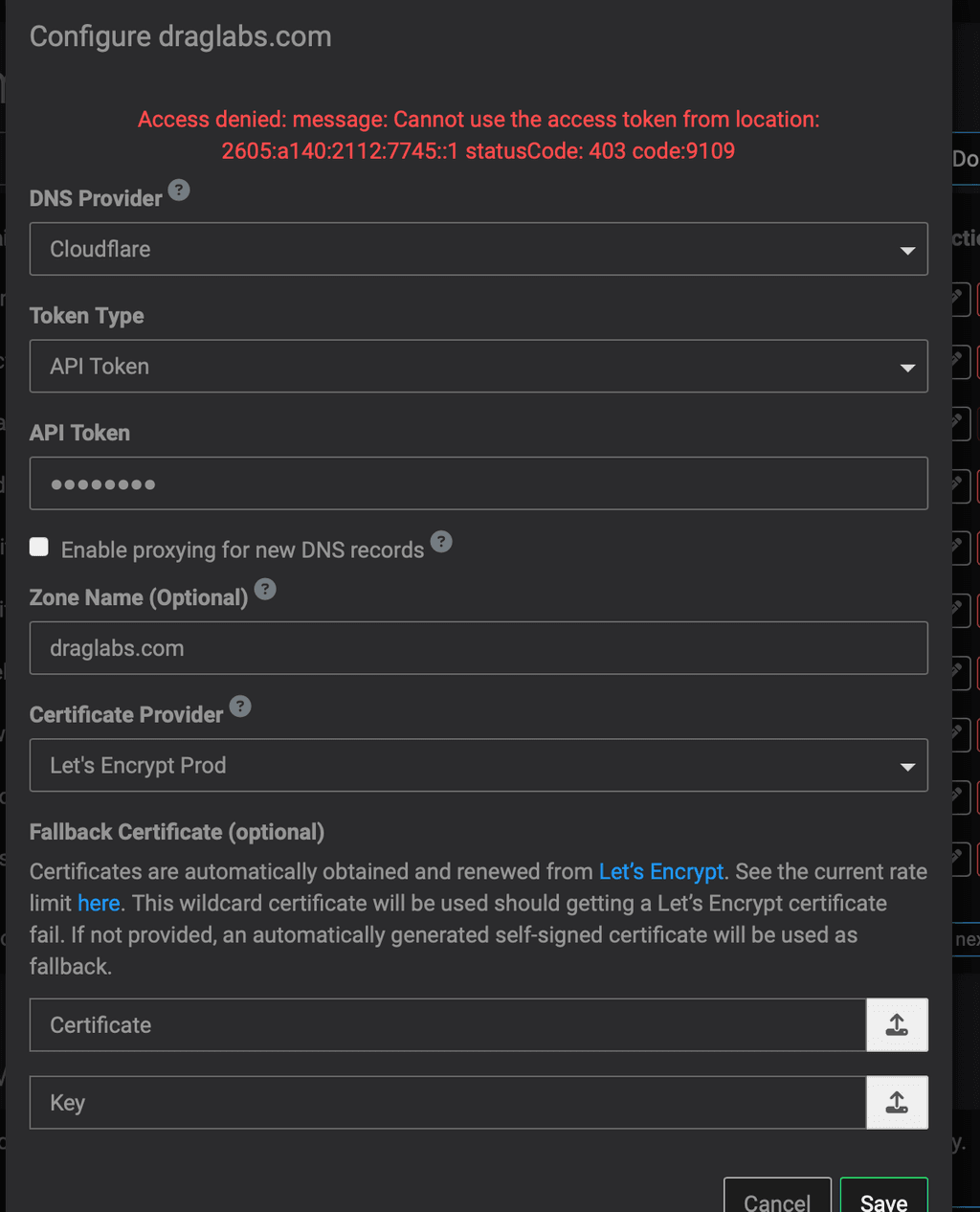

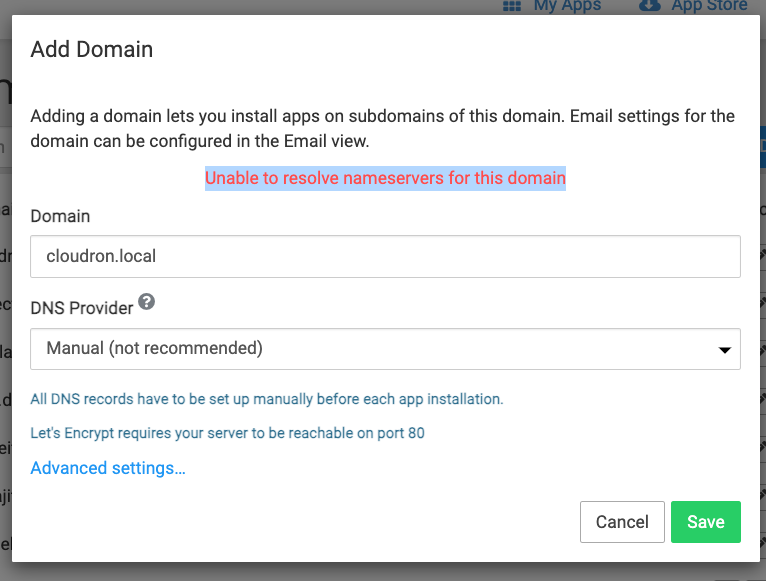

And we are having trouble figuring out how to tell the destination cloudron to pull a backup from a remote location.

{

"meta": {

"instanceId": "8d8e1b7ceae09105ea1231dc6c31045b9d36dc713f8e22970f6eacf9f1f4d996"

},

"nodes": [

{

"parameters": {},

"id": "4be687c9-4a4b-4be3-a8fc-d1405a66fa95",

"name": "When clicking \"Execute Workflow\"",

"type": "n8n-nodes-base.manualTrigger",

"typeVersion": 1,

"position": [

680,

320

]

},

{

"parameters": {

"url": "https://my.demo.cloudron.io/api/v1/apps",

"authentication": "genericCredentialType",

"genericAuthType": "httpQueryAuth",

"options": {}

},

"id": "33c66a41-753b-491a-bf03-8683f86b95c5",

"name": "PullAppsFromSource",

"type": "n8n-nodes-base.httpRequest",

"typeVersion": 4.1,

"position": [

900,

320

],

"credentials": {

"httpHeaderAuth": {

"id": "11",

"name": "RobCloudron-Demo"

},

"httpQueryAuth": {

"id": "12",

"name": "Query Auth account"

}

}

},

{

"parameters": {

"jsCode": "// Loop over input items and add a new field called 'myNewField' to the JSON of each one\nfor (const item of $input.all()) {\n item.json.myNewField = 1;\n}\n\nreturn $input.all();"

},

"id": "a1d203d0-4d01-421a-9d88-7299f8f515ce",

"name": "ManipulateResponse",

"type": "n8n-nodes-base.code",

"typeVersion": 1,

"position": [

1120,

320

]

},

{

"parameters": {

"url": "=https://my.demo.cloudron.io/api/v1/apps/{{ $json.apps[0].id }}/backups",

"authentication": "genericCredentialType",

"genericAuthType": "httpQueryAuth",

"options": {}

},

"id": "9c925efa-458f-4599-8172-7d9fafc3ce23",

"name": "PullBackupsFromSource",

"type": "n8n-nodes-base.httpRequest",

"typeVersion": 4.1,

"position": [

1320,

320

],

"credentials": {

"httpHeaderAuth": {

"id": "11",

"name": "RobCloudron-Demo"

},

"httpQueryAuth": {

"id": "12",

"name": "Query Auth account"

}

}

}

],

"connections": {

"When clicking \"Execute Workflow\"": {

"main": [

[

{

"node": "PullAppsFromSource",

"type": "main",

"index": 0

}

]

]

},

"PullAppsFromSource": {

"main": [

[

{

"node": "ManipulateResponse",

"type": "main",

"index": 0

}

]

]

},

"ManipulateResponse": {

"main": [

[

{

"node": "PullBackupsFromSource",

"type": "main",

"index": 0

}

]

]

}

}

}