App Packaging & Development

App package development & help

292

Topics

2.7k

Posts

Subcategories

-

Help Wanted or Offered

Looking to collaborate? Post here if you need help or willing to offer help.

-

Cloudron CLI - Updates

Pinned -

App contributions hall of fame

Pinned Moved -

Translators hall of fame

Pinned Moved -

-

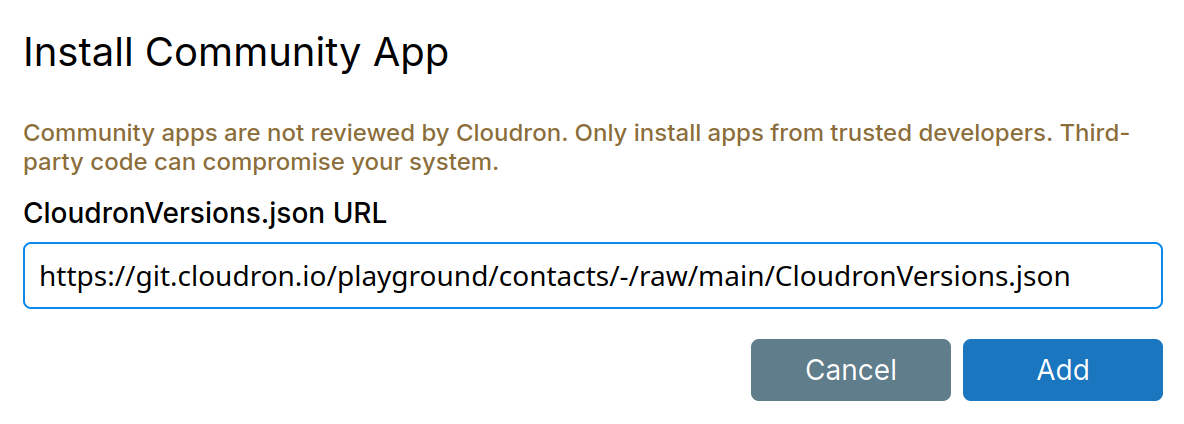

Building custom packages

Pinned -

-

-

-

-

-

-

-

-

-

-

-

-

-

-