6.3.3 a few quirks

-

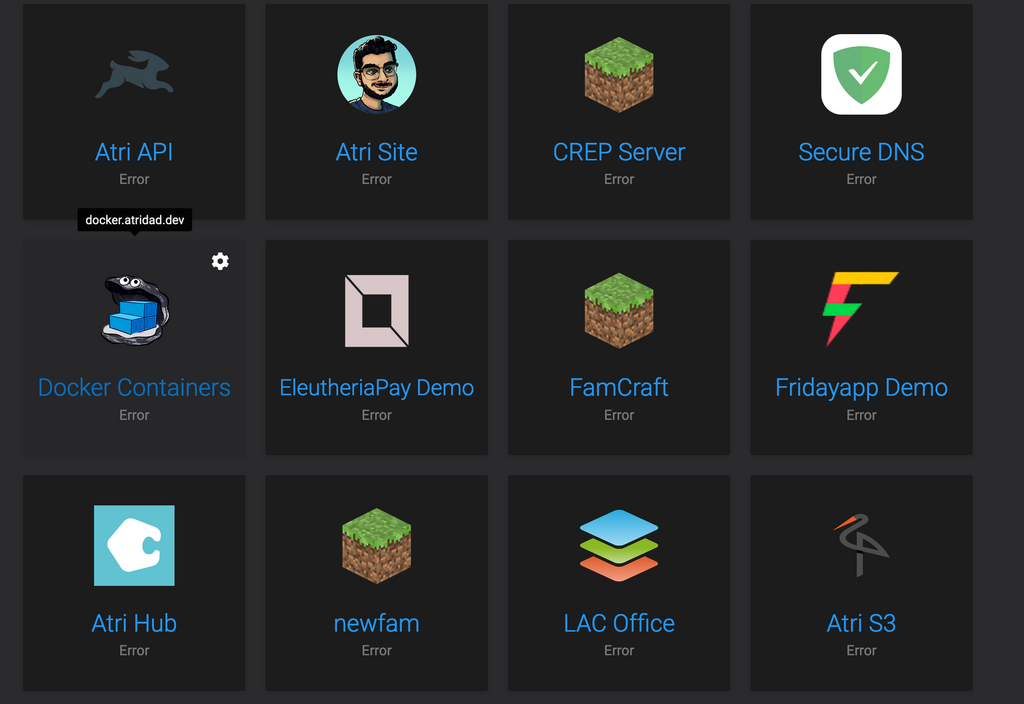

I have a similar issue where 55 apps were backed up, but then many had to reconfigure so that took 20m, and all of the apps redirect to the "you found a cloudron in the wild" page.

Restarted Nginx from services menu, no change.

Restarted box from CLI, no change. (It seems we could also use a restart button for thecloudronservice.)

All my apps areoffline.Update: After 30m or so, apps are coming back online.

-

The update has to do a "rebuild" of all the containers and services because of the multi-host changes. This can take a bit depending on how fast the server CPU and disk is. It's best to follow the progress under

/home/yellowtent/platformdata/logs/box.log. That said 30m does seem quite excessive. Maybe the databases are taking a long time to come up? If you can see in the logs where the most time is spent, would be good to know. -

The update has to do a "rebuild" of all the containers and services because of the multi-host changes. This can take a bit depending on how fast the server CPU and disk is. It's best to follow the progress under

/home/yellowtent/platformdata/logs/box.log. That said 30m does seem quite excessive. Maybe the databases are taking a long time to come up? If you can see in the logs where the most time is spent, would be good to know.@girish Hi Girish, I think I had mentioned this a long time ago, but for what it's worth... I think it'd be helpful to include in the release notes a bit of an "info/alert" when there is to be more downtime than usual.

For me, it's alarming and jarring to see a random lengthy update when the usual update time to Cloudron is only a minute or two. So when it unexpectedly takes 10-30+ minutes (depending on performance of the server I guess) due to the containers needing to be re-created for example, it's not the best user experience.

I tend to make "30 minute" maintenance windows to sort of cover myself (and during off-peak hours of course) so the length of downtime isn't necessarily the main issue, but for me at least I was caught by surprise at the length it was taking and started to panic a little thinking something was wrong because it's so unusual for it to take that long. Thankfully I saw the containers being recreated when I looked at the system logs so knew it was okay and just needed some time, but I think having that pre-warning would be very helpful so admins at least know to expect a lengthier update instead of the usual update time.

-

Having the same issue.

Is this what others experienced?

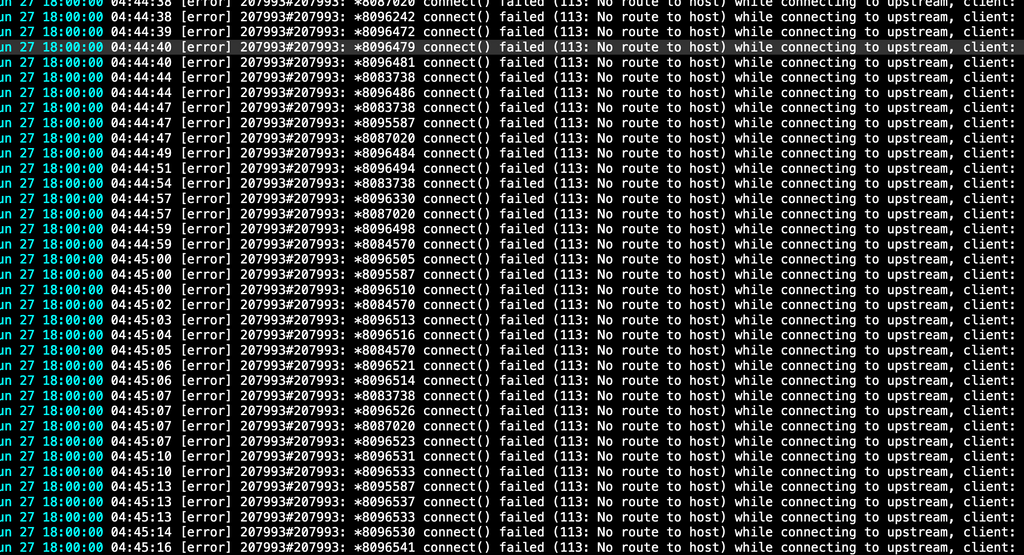

@girish This is my nginx logs... I don't have physical access to the server right now but I can drive over to take a look if you have ideas.

-

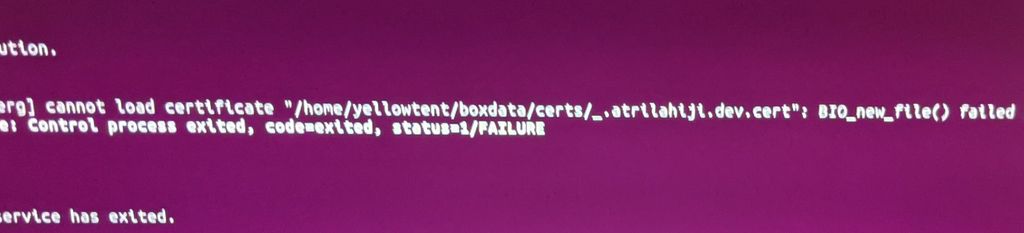

Ok so now after a restart it looks like nginx just refuses to start...

Fuck... guess im nuking my install, restoring from wasabi, and praying to the dieties i dont believe in that it just goes back to normal.

@staff any idea what might have gone wrong? Can I set up a time with one of you to do a supervised update?

Also I guess the contents of my volumes are fucked as well...

-

Ok so now after a restart it looks like nginx just refuses to start...

Fuck... guess im nuking my install, restoring from wasabi, and praying to the dieties i dont believe in that it just goes back to normal.

@staff any idea what might have gone wrong? Can I set up a time with one of you to do a supervised update?

Also I guess the contents of my volumes are fucked as well...

-

This seems to be the error...

-

@atridad That's not good, do you think I can have access to your server? If you enable SSH access, I can look into first thing tomorrow. thanks and sorry for the issue!

@girish Sure. I'll DM you

EDIT: That works. Only thing is I cant access the dashboard which makes it hard to enable ssh access. I can make you an account if you need? I'm going to be up for the next 2 hours so, so let me know and I can set things up.

-

@girish Sure. I'll DM you

EDIT: That works. Only thing is I cant access the dashboard which makes it hard to enable ssh access. I can make you an account if you need? I'm going to be up for the next 2 hours so, so let me know and I can set things up.

-

@msbt said in 6.3.3 a few quirks:

cloudron-support --enable-ssh

I love you.

Seriously though thanks. I was pacing around trying to figure out how to do this lol

-

Ok at least for @atridad the issue was that for some reason old dashboard nginx configs were still around and referencing old SSL certificates which were now purged from the system. This made nginx fail to startup, causing the rest of the side-effects.

-

Ok at least for @atridad the issue was that for some reason old dashboard nginx configs were still around and referencing old SSL certificates which were now purged from the system. This made nginx fail to startup, causing the rest of the side-effects.

@nebulon said in 6.3.3 a few quirks:

Ok at least for @atridad the issue was that for some reason old dashboard nginx configs were still around and referencing old SSL certificates which were now purged from the system. This made nginx fail to startup, causing the rest of the side-effects.

That had been my problem as well (more or less): https://forum.cloudron.io/topic/5263/cloudron-does-not-start-after-update-to-6-3-3

-

@nebulon said in 6.3.3 a few quirks:

Ok at least for @atridad the issue was that for some reason old dashboard nginx configs were still around and referencing old SSL certificates which were now purged from the system. This made nginx fail to startup, causing the rest of the side-effects.

That had been my problem as well (more or less): https://forum.cloudron.io/topic/5263/cloudron-does-not-start-after-update-to-6-3-3

@necrevistonnezr I just noticed your thread... whoops!

-

@girish - I noticed 6.3.4 is out now... is this one that will again need more time than usual to update, or will it be a typical real quick one with nearly no downtime needed?

-

@girish - I noticed 6.3.4 is out now... is this one that will again need more time than usual to update, or will it be a typical real quick one with nearly no downtime needed?