Email sending broken after updating to 8.2.x (due to IPv6 issues)

-

The PTR record is actually set by your server provider, not through Cloudron. Somehow I can't see how this would be connected to an app update, since Cloudron cannot programmatically set the PTR in any way.

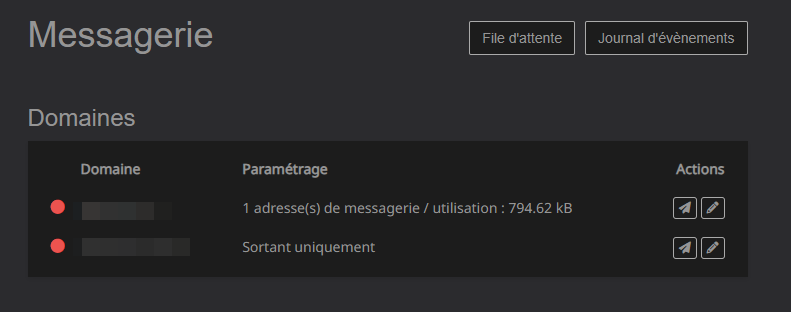

@nebulon it seems the issue is simply that Cloudron keeps telling them the PTR record isn't correct, when seemingly actually it is.

-

The PTR record is actually set by your server provider, not through Cloudron. Somehow I can't see how this would be connected to an app update, since Cloudron cannot programmatically set the PTR in any way.

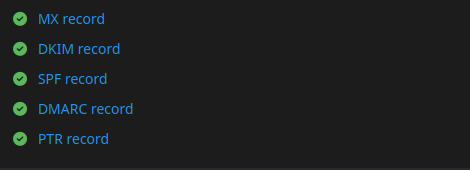

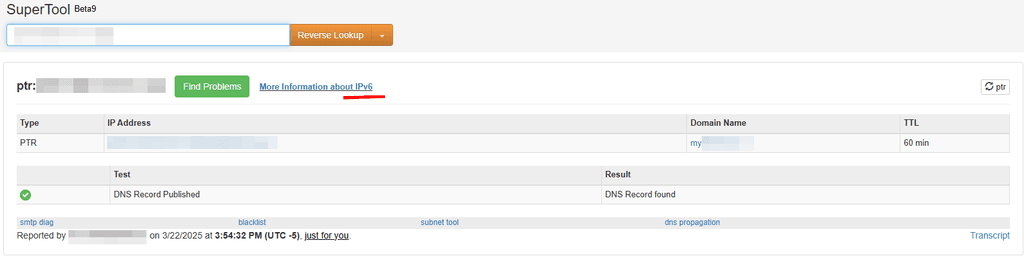

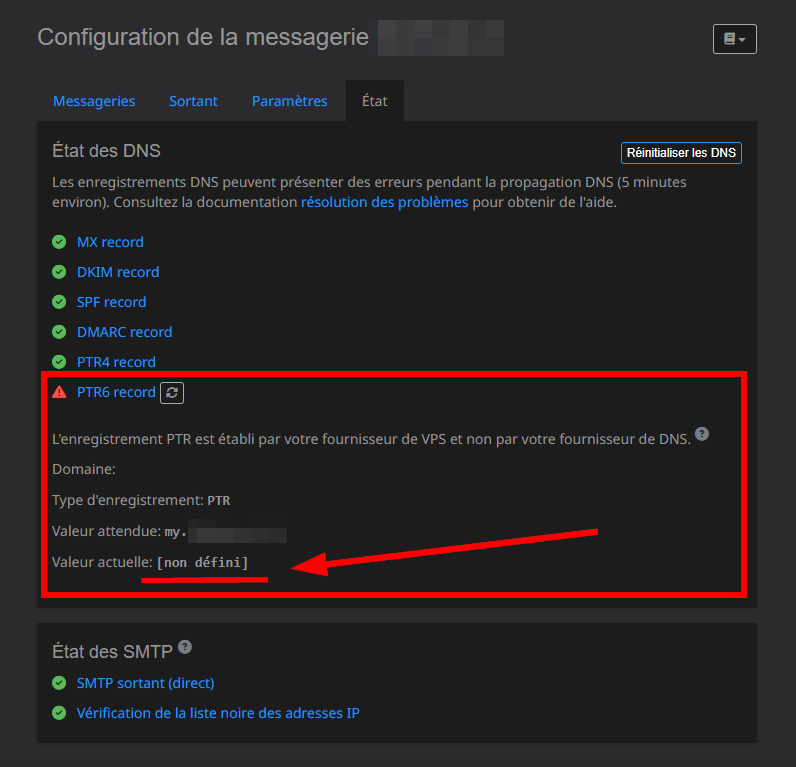

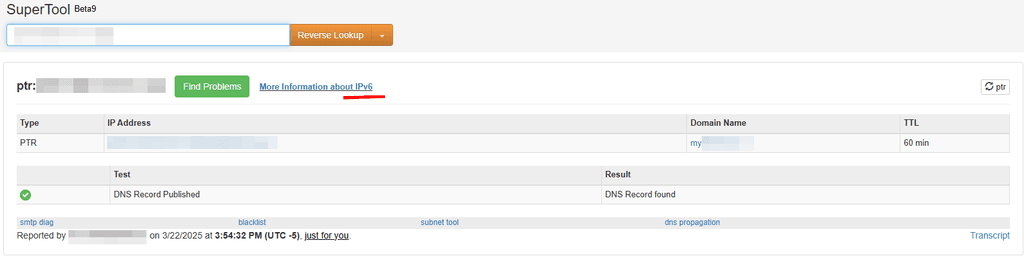

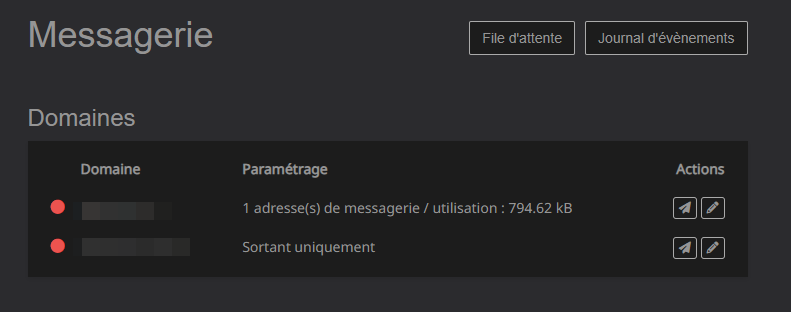

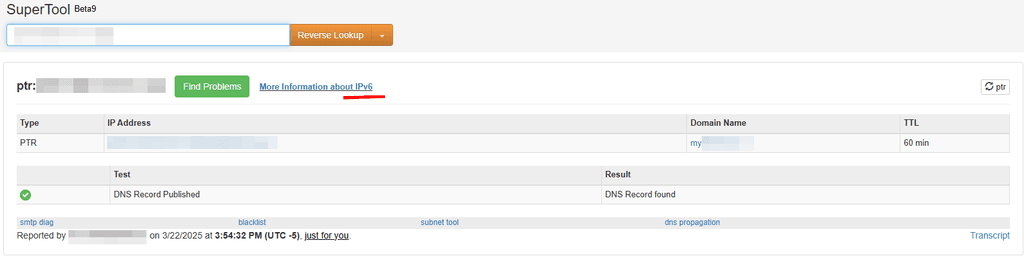

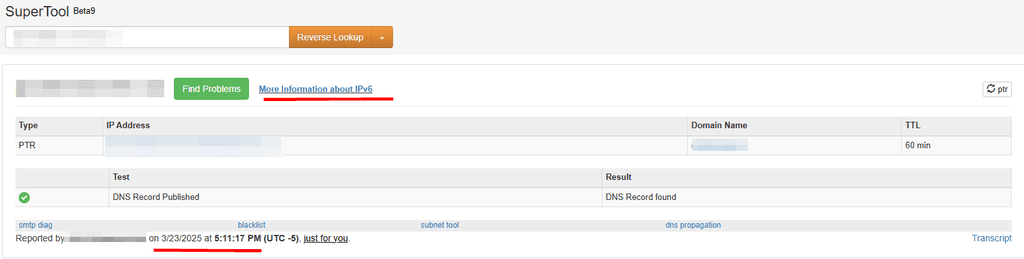

@nebulon Yeah, I’m aware the PTR6 record is set by my VPS provider, and I’ve double-checked that it’s configured correctly on their end. MXToolBox confirms everything looks good.

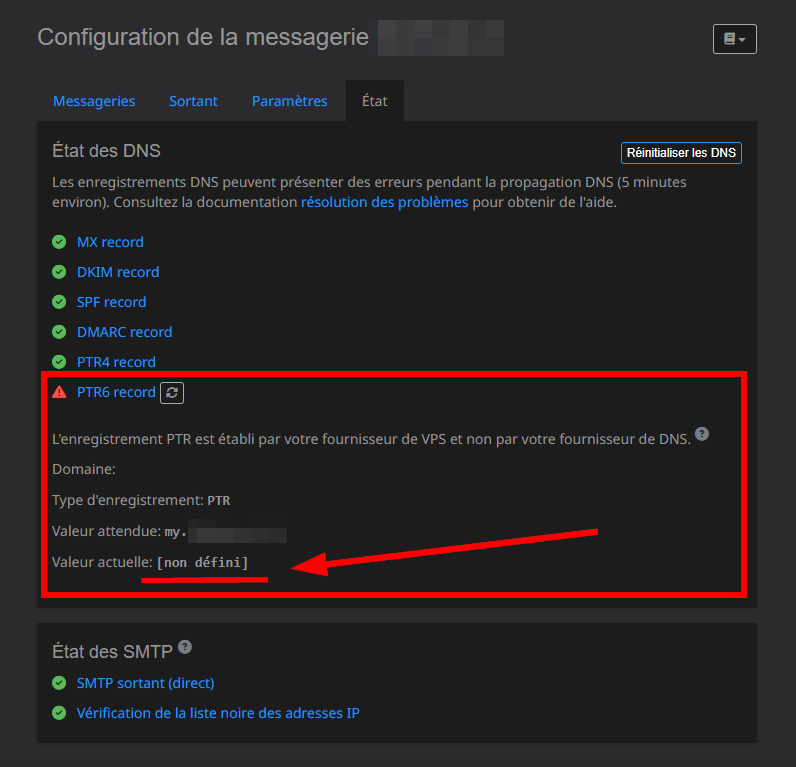

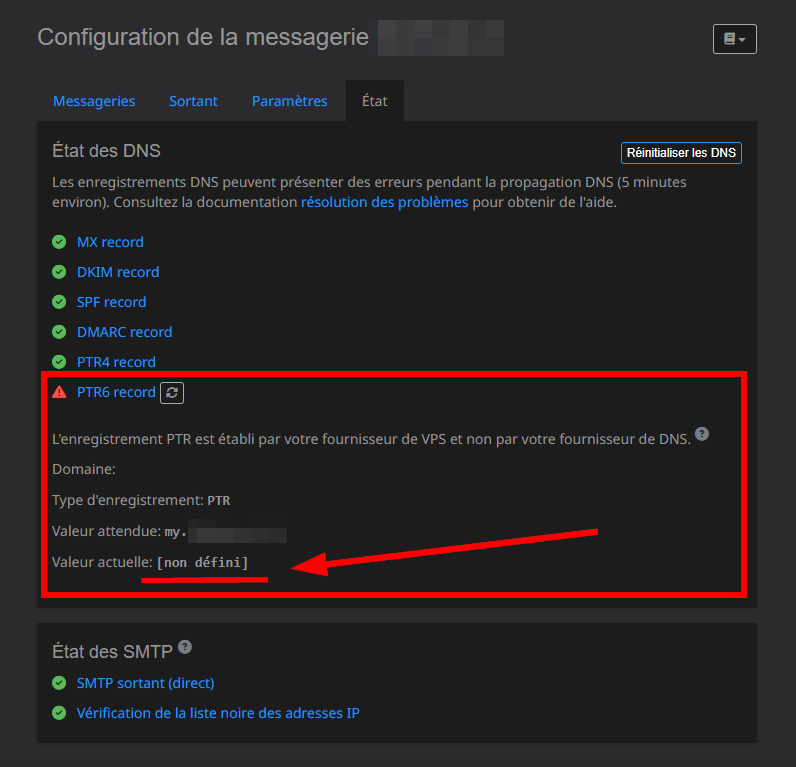

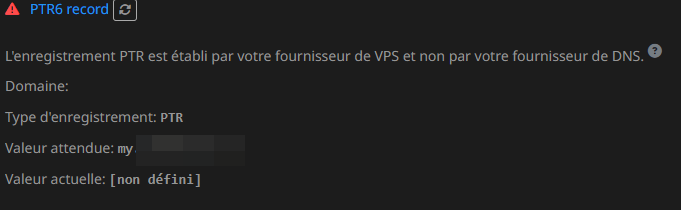

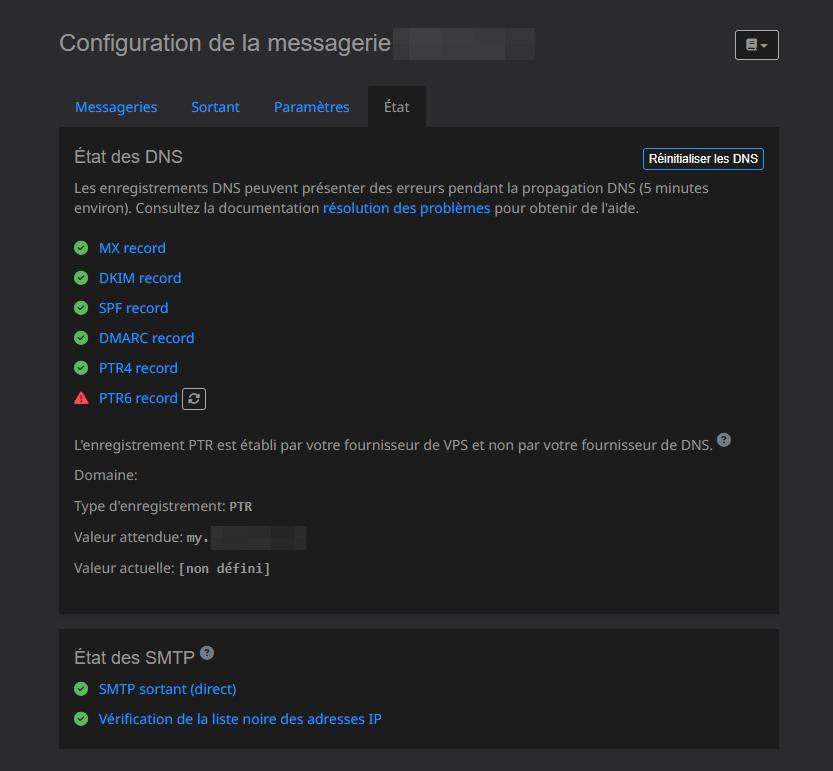

The issue seems to be on Cloudron’s side: it occasionally shows the PTR6 record as “null”. But this only happens after some time — for example, right after a full reboot, everything works fine. Then, 24 to 48 hours later, Cloudron throws an error saying the email setup isn’t correct, and it shows the PTR6 value as “null”.

What’s strange is that MXToolBox still shows the correct PTR6 value, exactly as set on my VPS provider’s side. So the record itself is definitely working.

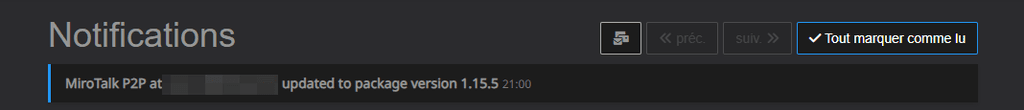

I’ve noticed this often happens after an app update. I’m not 100% sure it’s the cause, but every time the issue appears, it’s usually right after an update.

Not sure if this is a Cloudron issue or something with Ubuntu, but for some reason, only my server fails to resolve the PTR6 correctly after a while.

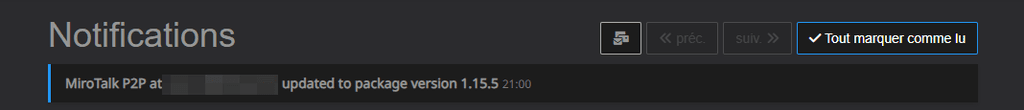

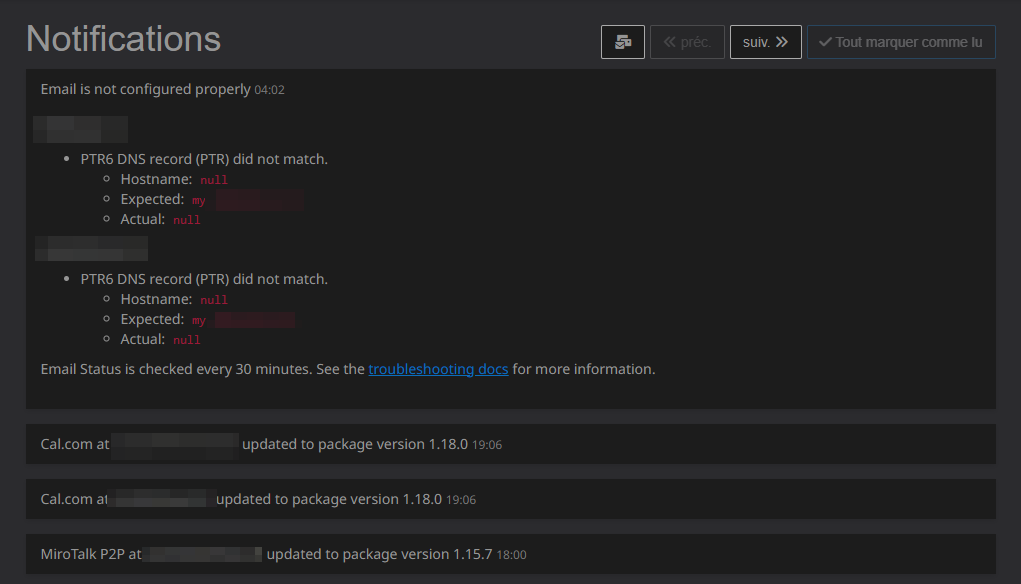

Edit @ 9:00 PM CET: MiroTalk P2P has been updated automatically at 9PM CET. And atm (9:05PM CET) my PTR6 record is still resolved correctly by Cloudron. I will monitor.

@joseph => fyi

Edit @ 9:40 PM CET : Cloudron doesn't resolve my PTR6 record anymore...

But on MXToolBox Side it's still working fine :

It's only cloudron that returns a "null" value for PTR6.

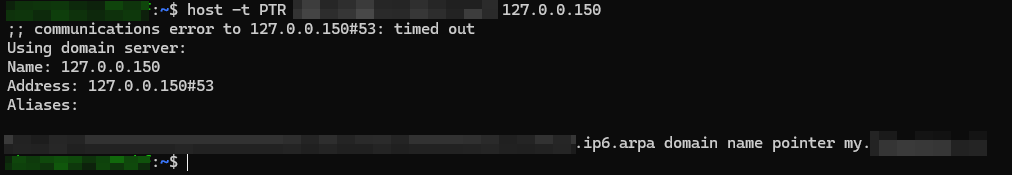

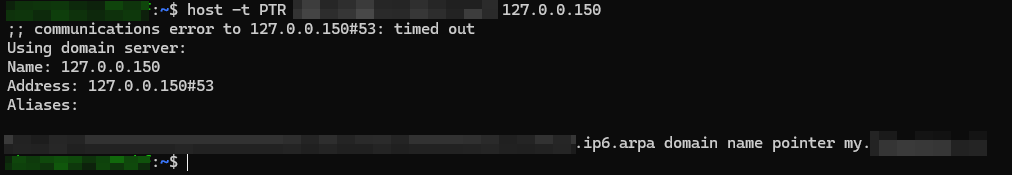

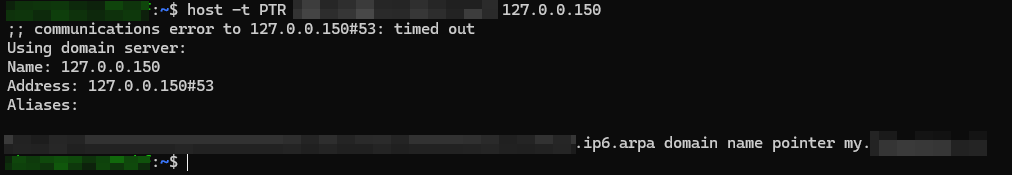

And I guess the value is null because when I do :

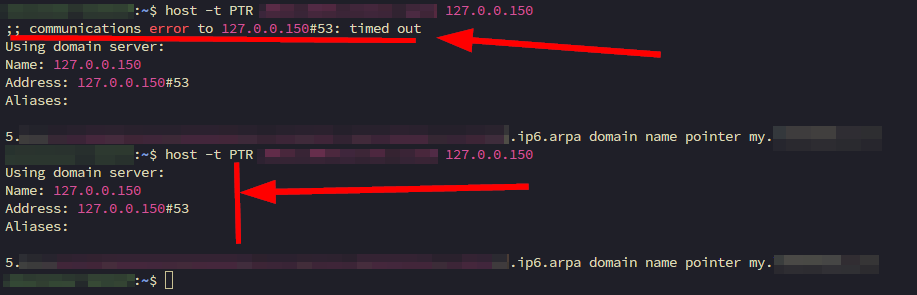

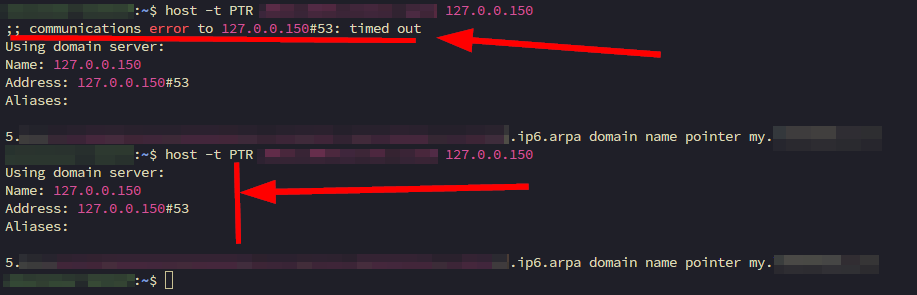

host -t PTR <ip6> 127.0.0.150I have a time out ...

BTW, I use Uptime Kuma to notify me on Telegram each time there is a notification in Cloudron. And usually notifications are about automatic app updates and my Cloudron will fail to resolve my PTR6 record after this. In this example it was about 40 minutes after the update / the notification. Could also be linked to notifications, idk. But there is something with updates or notifications and PTR6 record resolving on Cloudron/Ubuntu side.

-

@nebulon Yeah, I’m aware the PTR6 record is set by my VPS provider, and I’ve double-checked that it’s configured correctly on their end. MXToolBox confirms everything looks good.

The issue seems to be on Cloudron’s side: it occasionally shows the PTR6 record as “null”. But this only happens after some time — for example, right after a full reboot, everything works fine. Then, 24 to 48 hours later, Cloudron throws an error saying the email setup isn’t correct, and it shows the PTR6 value as “null”.

What’s strange is that MXToolBox still shows the correct PTR6 value, exactly as set on my VPS provider’s side. So the record itself is definitely working.

I’ve noticed this often happens after an app update. I’m not 100% sure it’s the cause, but every time the issue appears, it’s usually right after an update.

Not sure if this is a Cloudron issue or something with Ubuntu, but for some reason, only my server fails to resolve the PTR6 correctly after a while.

Edit @ 9:00 PM CET: MiroTalk P2P has been updated automatically at 9PM CET. And atm (9:05PM CET) my PTR6 record is still resolved correctly by Cloudron. I will monitor.

@joseph => fyi

Edit @ 9:40 PM CET : Cloudron doesn't resolve my PTR6 record anymore...

But on MXToolBox Side it's still working fine :

It's only cloudron that returns a "null" value for PTR6.

And I guess the value is null because when I do :

host -t PTR <ip6> 127.0.0.150I have a time out ...

BTW, I use Uptime Kuma to notify me on Telegram each time there is a notification in Cloudron. And usually notifications are about automatic app updates and my Cloudron will fail to resolve my PTR6 record after this. In this example it was about 40 minutes after the update / the notification. Could also be linked to notifications, idk. But there is something with updates or notifications and PTR6 record resolving on Cloudron/Ubuntu side.

-

@robi said in Email sending broken after updating to 8.2.x (due to IPv6 issues):

How did you end up connecting Cloudron notifications to Uptime Kuma?

I’m using Uptime Kuma to monitor the Cloudron notifications using Cloudron API.

The API endpoint I monitor is:

https://my.domain.tld/api/v1/notifications?acknowledged=false&page=1&per_page=2This returns a list of unacknowledged notifications. In Uptime Kuma, I use the “Keyword exists” monitor type and set the keyword to:

"notifications":[]When this keyword is not found, it means there are new notifications waiting to be read. Uptime Kuma then considers the service as “down” and sends me an alert to a Telegram channel. Once I acknowledge the notification in the Cloudron UI, the keyword is present again, and Kuma marks the monitor as “up”.

To authenticate the API call, you need to add a custom header in Uptime Kuma:

{ "authorization": "Bearer YOUR_CLOUDRON_API_TOKEN" }You can generate the API token from your Cloudron account (Account > API Tokens).

I saw this solution somewhere on the forum a while ago, but I don’t remember exactly where or who shared it.

-

@robi said in Email sending broken after updating to 8.2.x (due to IPv6 issues):

How did you end up connecting Cloudron notifications to Uptime Kuma?

I’m using Uptime Kuma to monitor the Cloudron notifications using Cloudron API.

The API endpoint I monitor is:

https://my.domain.tld/api/v1/notifications?acknowledged=false&page=1&per_page=2This returns a list of unacknowledged notifications. In Uptime Kuma, I use the “Keyword exists” monitor type and set the keyword to:

"notifications":[]When this keyword is not found, it means there are new notifications waiting to be read. Uptime Kuma then considers the service as “down” and sends me an alert to a Telegram channel. Once I acknowledge the notification in the Cloudron UI, the keyword is present again, and Kuma marks the monitor as “up”.

To authenticate the API call, you need to add a custom header in Uptime Kuma:

{ "authorization": "Bearer YOUR_CLOUDRON_API_TOKEN" }You can generate the API token from your Cloudron account (Account > API Tokens).

I saw this solution somewhere on the forum a while ago, but I don’t remember exactly where or who shared it.

-

@nebulon Yeah, I’m aware the PTR6 record is set by my VPS provider, and I’ve double-checked that it’s configured correctly on their end. MXToolBox confirms everything looks good.

The issue seems to be on Cloudron’s side: it occasionally shows the PTR6 record as “null”. But this only happens after some time — for example, right after a full reboot, everything works fine. Then, 24 to 48 hours later, Cloudron throws an error saying the email setup isn’t correct, and it shows the PTR6 value as “null”.

What’s strange is that MXToolBox still shows the correct PTR6 value, exactly as set on my VPS provider’s side. So the record itself is definitely working.

I’ve noticed this often happens after an app update. I’m not 100% sure it’s the cause, but every time the issue appears, it’s usually right after an update.

Not sure if this is a Cloudron issue or something with Ubuntu, but for some reason, only my server fails to resolve the PTR6 correctly after a while.

Edit @ 9:00 PM CET: MiroTalk P2P has been updated automatically at 9PM CET. And atm (9:05PM CET) my PTR6 record is still resolved correctly by Cloudron. I will monitor.

@joseph => fyi

Edit @ 9:40 PM CET : Cloudron doesn't resolve my PTR6 record anymore...

But on MXToolBox Side it's still working fine :

It's only cloudron that returns a "null" value for PTR6.

And I guess the value is null because when I do :

host -t PTR <ip6> 127.0.0.150I have a time out ...

BTW, I use Uptime Kuma to notify me on Telegram each time there is a notification in Cloudron. And usually notifications are about automatic app updates and my Cloudron will fail to resolve my PTR6 record after this. In this example it was about 40 minutes after the update / the notification. Could also be linked to notifications, idk. But there is something with updates or notifications and PTR6 record resolving on Cloudron/Ubuntu side.

Hey again @nebulon & @joseph ,

Just wanted to report that I had the same issue again today.

There was another automatic app update on my Cloudron instance at 9 PM CET. I checked shortly after, and PTR6 resolution was still working fine on Cloudron’s side around 10 PM. So initially everything looked okay.

But now, at 11 PM CET, Cloudron is once again returning a null value for the PTR6 record. Just like before, this is only on Cloudron’s side — when I check with MXToolBox, the PTR6 record still resolves correctly and matches what’s configured on my VPS provider's end.

This confirms it’s not a DNS issue upstream, but something on the Cloudron/Ubuntu side that breaks PTR6 resolution after a while, possibly related to app updates or maybe even internal DNS caching?

Really seems like the exact same pattern as before:

- PTR6 works right after reboot of the server

- After some time (or update?), Cloudron stops resolving it and shows null

- External tools like MXToolBox still show it correctly

host -t PTR <ip6>times out locally when PTR6 doesn't resolves correctly.

As mentionned in my previous post, I'm using Uptime Kuma to forward Cloudron notifications to Telegram, and again, the Cloudron PTR6 resolution broke some time after the notification of the app update. Not saying they’re directly connected, but the timing is always suspiciously close.

-

Just wanted to provide an update after some deeper investigation on my side.

I tried running the following command while everything was still marked as green in Cloudron:

host -t PTR <ipv6> 127.0.0.150Even though Cloudron still showed a valid PTR6 at that moment, the command returned a timeout. That might indicate that the issue does not start with Cloudron itself, but with Unbound no longer resolving correctly via 127.0.0.150.

To address this, I modified Unbound’s configuration to use Cloudflare as the upstream DNS resolvers, instead of relying on the resolvers provided via DHCP by my VPS provider. Here's what I did:

- Create a new file "forward.conf" at this path :

/etc/unbound/unbound.conf.d/forward.conf- Edited this file "forward.conf" using nano to add the following :

forward-zone: name: "." forward-addr: 1.1.1.1 forward-addr: 1.0.0.1 forward-addr: 2606:4700:4700::1111 forward-addr: 2606:4700:4700::1001- Validated the configuration with the following command :

sudo unbound-checkconf- Restarted Unbound:

sudo systemctl restart unboundAfter this change, PTR6 resolution via

host -t PTR <ipv6> 127.0.0.150works instantly and consistently. (I just had to wait like 5 minutes and now it's resolving instantly and consistently)

I will continue monitoring over the next few days to confirm whether the issue comes back or not.

If it doesn't comes back, it means there was an issue with Unbound and my VPS Provider Resolvers (DNS) received through DHCP...EDIT : Unfortunatly this workaround comes with an issue... Spamhaus is now considering my IP as blacklisted as I am using an open resolver for the unbound... => https://www.spamhaus.com/resource-center/successfully-accessing-spamhauss-free-block-lists-using-a-public-dns/

I thought I had a good idea by using another resolver for Unbound... But I didn't knew the implication it would have with the IP Black lists of those "DNSBL" ...

-

I was wrong... each time I SSH to my server, and run for the first time :

host -t PTR <ipv6> 127.0.0.150It will time out.

And the second time it will work perfectly and instantly...I've rolled back Unbound to the default cloudron configuration in order to not be blacklisted by https://www.spamhaus.org/ .

And

host -t PTR <ipv6> 127.0.0.150Works correctly ... I just have to send it twice, first time time out. Second time instantly resolved.

It means I am still having the exact same issue that I cannot explain... with my PTR6 value showing "null" in Cloudron only after an app update or after approximately 24-48 hours...

Any ideas @nebulon & @joseph ?

I really don't know where to look/search ...

-

@Gengar Which ubuntu version are you on ? Unbound behavior slighly varies based on Ubuntu 22.04 and 24.04

Can you please try this:

-

Edit

/home/yellowtent/box/src/mail.js. You will find a line at around line 86 likeconst DNS_OPTIONS = { timeout: 10000 };. -

Change it to

const DNS_OPTIONS = { timeout: 30000, tries: 4 };. -

systemctl restart box

-

-

@Gengar Which ubuntu version are you on ? Unbound behavior slighly varies based on Ubuntu 22.04 and 24.04

Can you please try this:

-

Edit

/home/yellowtent/box/src/mail.js. You will find a line at around line 86 likeconst DNS_OPTIONS = { timeout: 10000 };. -

Change it to

const DNS_OPTIONS = { timeout: 30000, tries: 4 };. -

systemctl restart box

@girish Thank you for your help .

I am using Ubuntu 24.04.

I've edited what you told me to edit.

If I understand correctly , it could happen simply because there was no retry and a time out after 10 seconds.

And now that we changed it to 4 retries and 30 seconds, so if it was a time out issue, it should solve it now by giving it more opportunities to do it if it's a bit slow.Is this setting override by each cloudron update ?

I will monitor and let you know if it solved my issue or not.

-

-

@girish Thank you for your help .

I am using Ubuntu 24.04.

I've edited what you told me to edit.

If I understand correctly , it could happen simply because there was no retry and a time out after 10 seconds.

And now that we changed it to 4 retries and 30 seconds, so if it was a time out issue, it should solve it now by giving it more opportunities to do it if it's a bit slow.Is this setting override by each cloudron update ?

I will monitor and let you know if it solved my issue or not.

@Gengar yeah, just guessing here that maybe it is taking more than 10s for the initial resolution. There is a default retry of 2 times . Don't know if changing it to more helps . I have been looking into the unbound docs on increasing the timeout in general but haven't found a good config variable yet.

-

@Gengar yeah, just guessing here that maybe it is taking more than 10s for the initial resolution. There is a default retry of 2 times . Don't know if changing it to more helps . I have been looking into the unbound docs on increasing the timeout in general but haven't found a good config variable yet.

-

@girish Yeah makes sense. I will monitor and update in this post with the final result. Thx again for your help.

Would I have to set this after each cloudron update ? Or is this setting never overriden ?

-

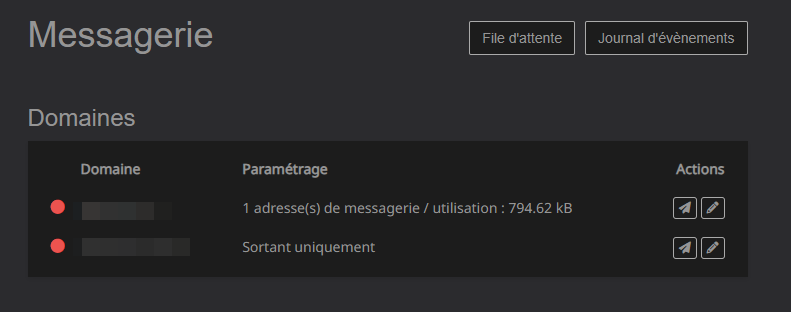

@girish Unfortunatly it didn't worked...

The PTR6 is again viewed as null by Cloudron (and by Cloudron only, MxToolBox works fine).

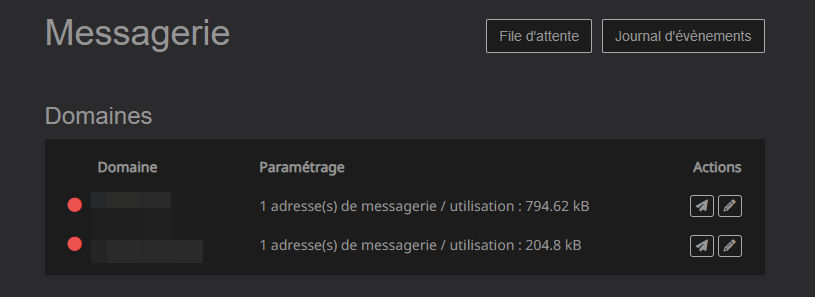

The pattern I observe is that each time I have some app updates (or some notifications ?) then after a few minutes / hours (after the update / notification) it will show the PTR6 value as if it was null.

see below :

Yesterday I did some updates but I restarted the server also so I didn't had the issue because my workaround is to restart the server.

Any ideas @girish to troubleshoot further ?

Edit : added one screenshot

-

I can't really understand why each first try of :

host -t PTR <ipv6> 127.0.0.150always fails instantly.And each second try works instantly.

Binding seems correct, it's listning :

I also tried to generate a trace of a dig of my ipv6

dig -x <my_ipv6> @127.0.0.150 +traceHere is the result :

dig -x <my_ipv6> @127.0.0.150 +trace ; <<>> DiG 9.18.30-0ubuntu0.24.04.2-Ubuntu <<>> -x <my_ipv6> @127.0.0.150 +trace ;; global options: +cmd . 300 IN NS h.root-servers.net. . 300 IN NS i.root-servers.net. . 300 IN NS j.root-servers.net. . 300 IN NS k.root-servers.net. . 300 IN NS l.root-servers.net. . 300 IN NS m.root-servers.net. . 300 IN NS a.root-servers.net. . 300 IN NS b.root-servers.net. . 300 IN NS c.root-servers.net. . 300 IN NS d.root-servers.net. . 300 IN NS e.root-servers.net. . 300 IN NS f.root-servers.net. . 300 IN NS g.root-servers.net. . 300 IN RRSIG NS 8 0 518400 20250409050000 20250327040000 26470 . PVIijRDCpvp/usENq3aRJ+1WJb76GXi7mM85QySLrS6ixxDl5QbQo1Di Sl9wPYETpkYfZrRW4jDhOJyVAHtjit1m9ZJ2MAu9Rhcq72O7J/unHyjL K8vpXhwRQOwggasqVvFiqBDBlOh+TvKmxk++50UrFnokaFMbbJ45+H3t TAZ5CThu0VQwkNDFIGXdcupMGYO/GabVtEVjC22m12mZjMD8udjHbO1D msVclMSGFiGhi4LAjgCBDpx9sld7BeEkv/lIlZs8S/9GJ09a/+5sqe+d 2byJnEcdGUYE1kjHGA/mE6I2lncLKeyt1awD8CPhh3OGkDaiPCzDskwH pUs0aQ== ;; Received 525 bytes from 127.0.0.150#53(127.0.0.150) in 74 ms ;; communications error to 2001:500:2f::f#53: timed out ;; communications error to 2001:500:2f::f#53: timed out ;; communications error to 2001:500:2f::f#53: timed out ip6.arpa. 172800 IN NS f.ip6-servers.arpa. ip6.arpa. 172800 IN NS c.ip6-servers.arpa. ip6.arpa. 172800 IN NS a.ip6-servers.arpa. ip6.arpa. 172800 IN NS b.ip6-servers.arpa. ip6.arpa. 172800 IN NS d.ip6-servers.arpa. ip6.arpa. 172800 IN NS e.ip6-servers.arpa. ip6.arpa. 86400 IN DS 45094 8 2 E6B54E0A20CE1EDBFCB6879C02F5782059CECB043A31D804A04AFA51 AF01D5FB ip6.arpa. 86400 IN DS 13880 8 2 068554EFCB5861F42AF93EF8E79C442A86C16FC5652E6B6D2419ED52 7F344D17 ip6.arpa. 86400 IN DS 64060 8 2 8A11501086330132BE2C23F22DEDF0634AD5FF668B4AA1988E172C6A 2A4E5F7B ip6.arpa. 86400 IN RRSIG DS 8 2 86400 20250409060000 20250327050000 41220 arpa. BrH6HJZNlu//lKOfi1e71GXexN7Q9Xxup1XbXiXQQ1YaxU82FszIRDtg 7OJQ8NmOuIiGhLsHAbsB/aBdIsh+8QeCYYXw7NvnT7sIHDAsXdNEs1qv cxh/4j7BIocLqPf4BMDW9ng9JuppGkg3fXrA7nOiPZMZxJ0UrsWzCtKR bDc2tgrS6Q6nwuo+1c/75C51TkTXqC5iq+mA3ouS2H/UKmbEnOOMt1y+ GaY7R2zU+vWRnJ0CQjqR8cn8wL2LkKvxEJ//wWymu4onhggPrPOiiyyK 77No8mh9CJlreLN9eIa+2qpDdfDZZvUpFoh2uJbDw0nVZgmhvO6faUdw ocrRAQ== ;; Received 949 bytes from 192.112.36.4#53(g.root-servers.net) in 38 ms 6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns4.apnic.net. 6.1.1.0.0.2.ip6.arpa. 86400 IN NS pri.authdns.ripe.net. 6.1.1.0.0.2.ip6.arpa. 86400 IN NS rirns.arin.net. 6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns3.afrinic.net. 6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns3.lacnic.net. 6.1.1.0.0.2.ip6.arpa. 86400 IN DS 3320 13 2 36869F9062FA3449B6362A3C618B7F17949E8729D355510E224789ED 165F0AD9 6.1.1.0.0.2.ip6.arpa. 86400 IN RRSIG DS 8 8 86400 20250417050647 20250327071005 29628 ip6.arpa. oqnemTiOsFj0CxOy5DjTdN8ml4bE47lmKONe+4NMdVS+gNclSwaDWycN UDAj+wFoI2dbM20Iy8jZvcgim6icQ02M77a1p5fK9SSozGYONEjXE7De 4w2jnNBOXzX+JOur97+Vf1YyC9e1LfEp/cbhbTtRxf3/7fgKke9aj4Co LXg= ;; Received 483 bytes from 193.0.9.2#53(f.ip6-servers.arpa) in 16 ms ;; communications error to 2001:67c:e0::5#53: timed out ;; communications error to 2620:38:2000::53#53: timed out 1.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns1.infomaniak.ch. 1.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns2.infomaniak.ch. 1.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. 3600 IN NSEC 2.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. NS RRSIG NSEC 1.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. 3600 IN RRSIG NSEC 13 18 3600 20250409180748 20250326163748 58998 6.1.1.0.0.2.ip6.arpa. 5jdTVibPvZSZ5qdnaTmefipFkNuRMvODtFmpW/nOELP8QTKQzo9mDHxg Zk6YdI2BZiS5AQR59bLAdJTlklvl2w== ;; Received 396 bytes from 199.253.249.53#53(rirns.arin.net) in 90 ms 5.REVERSED_OF_my_IPV6.2.ip6.arpa. 3600 IN PTR my.DOMAIN.XYZ. 1.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns1.infomaniak.ch. 1.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns2.infomaniak.ch. ;; Received 247 bytes from 84.16.67.66#53(ns2.infomaniak.ch) in 1 ms -

I can't really understand why each first try of :

host -t PTR <ipv6> 127.0.0.150always fails instantly.And each second try works instantly.

Binding seems correct, it's listning :

I also tried to generate a trace of a dig of my ipv6

dig -x <my_ipv6> @127.0.0.150 +traceHere is the result :

dig -x <my_ipv6> @127.0.0.150 +trace ; <<>> DiG 9.18.30-0ubuntu0.24.04.2-Ubuntu <<>> -x <my_ipv6> @127.0.0.150 +trace ;; global options: +cmd . 300 IN NS h.root-servers.net. . 300 IN NS i.root-servers.net. . 300 IN NS j.root-servers.net. . 300 IN NS k.root-servers.net. . 300 IN NS l.root-servers.net. . 300 IN NS m.root-servers.net. . 300 IN NS a.root-servers.net. . 300 IN NS b.root-servers.net. . 300 IN NS c.root-servers.net. . 300 IN NS d.root-servers.net. . 300 IN NS e.root-servers.net. . 300 IN NS f.root-servers.net. . 300 IN NS g.root-servers.net. . 300 IN RRSIG NS 8 0 518400 20250409050000 20250327040000 26470 . PVIijRDCpvp/usENq3aRJ+1WJb76GXi7mM85QySLrS6ixxDl5QbQo1Di Sl9wPYETpkYfZrRW4jDhOJyVAHtjit1m9ZJ2MAu9Rhcq72O7J/unHyjL K8vpXhwRQOwggasqVvFiqBDBlOh+TvKmxk++50UrFnokaFMbbJ45+H3t TAZ5CThu0VQwkNDFIGXdcupMGYO/GabVtEVjC22m12mZjMD8udjHbO1D msVclMSGFiGhi4LAjgCBDpx9sld7BeEkv/lIlZs8S/9GJ09a/+5sqe+d 2byJnEcdGUYE1kjHGA/mE6I2lncLKeyt1awD8CPhh3OGkDaiPCzDskwH pUs0aQ== ;; Received 525 bytes from 127.0.0.150#53(127.0.0.150) in 74 ms ;; communications error to 2001:500:2f::f#53: timed out ;; communications error to 2001:500:2f::f#53: timed out ;; communications error to 2001:500:2f::f#53: timed out ip6.arpa. 172800 IN NS f.ip6-servers.arpa. ip6.arpa. 172800 IN NS c.ip6-servers.arpa. ip6.arpa. 172800 IN NS a.ip6-servers.arpa. ip6.arpa. 172800 IN NS b.ip6-servers.arpa. ip6.arpa. 172800 IN NS d.ip6-servers.arpa. ip6.arpa. 172800 IN NS e.ip6-servers.arpa. ip6.arpa. 86400 IN DS 45094 8 2 E6B54E0A20CE1EDBFCB6879C02F5782059CECB043A31D804A04AFA51 AF01D5FB ip6.arpa. 86400 IN DS 13880 8 2 068554EFCB5861F42AF93EF8E79C442A86C16FC5652E6B6D2419ED52 7F344D17 ip6.arpa. 86400 IN DS 64060 8 2 8A11501086330132BE2C23F22DEDF0634AD5FF668B4AA1988E172C6A 2A4E5F7B ip6.arpa. 86400 IN RRSIG DS 8 2 86400 20250409060000 20250327050000 41220 arpa. BrH6HJZNlu//lKOfi1e71GXexN7Q9Xxup1XbXiXQQ1YaxU82FszIRDtg 7OJQ8NmOuIiGhLsHAbsB/aBdIsh+8QeCYYXw7NvnT7sIHDAsXdNEs1qv cxh/4j7BIocLqPf4BMDW9ng9JuppGkg3fXrA7nOiPZMZxJ0UrsWzCtKR bDc2tgrS6Q6nwuo+1c/75C51TkTXqC5iq+mA3ouS2H/UKmbEnOOMt1y+ GaY7R2zU+vWRnJ0CQjqR8cn8wL2LkKvxEJ//wWymu4onhggPrPOiiyyK 77No8mh9CJlreLN9eIa+2qpDdfDZZvUpFoh2uJbDw0nVZgmhvO6faUdw ocrRAQ== ;; Received 949 bytes from 192.112.36.4#53(g.root-servers.net) in 38 ms 6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns4.apnic.net. 6.1.1.0.0.2.ip6.arpa. 86400 IN NS pri.authdns.ripe.net. 6.1.1.0.0.2.ip6.arpa. 86400 IN NS rirns.arin.net. 6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns3.afrinic.net. 6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns3.lacnic.net. 6.1.1.0.0.2.ip6.arpa. 86400 IN DS 3320 13 2 36869F9062FA3449B6362A3C618B7F17949E8729D355510E224789ED 165F0AD9 6.1.1.0.0.2.ip6.arpa. 86400 IN RRSIG DS 8 8 86400 20250417050647 20250327071005 29628 ip6.arpa. oqnemTiOsFj0CxOy5DjTdN8ml4bE47lmKONe+4NMdVS+gNclSwaDWycN UDAj+wFoI2dbM20Iy8jZvcgim6icQ02M77a1p5fK9SSozGYONEjXE7De 4w2jnNBOXzX+JOur97+Vf1YyC9e1LfEp/cbhbTtRxf3/7fgKke9aj4Co LXg= ;; Received 483 bytes from 193.0.9.2#53(f.ip6-servers.arpa) in 16 ms ;; communications error to 2001:67c:e0::5#53: timed out ;; communications error to 2620:38:2000::53#53: timed out 1.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns1.infomaniak.ch. 1.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns2.infomaniak.ch. 1.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. 3600 IN NSEC 2.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. NS RRSIG NSEC 1.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. 3600 IN RRSIG NSEC 13 18 3600 20250409180748 20250326163748 58998 6.1.1.0.0.2.ip6.arpa. 5jdTVibPvZSZ5qdnaTmefipFkNuRMvODtFmpW/nOELP8QTKQzo9mDHxg Zk6YdI2BZiS5AQR59bLAdJTlklvl2w== ;; Received 396 bytes from 199.253.249.53#53(rirns.arin.net) in 90 ms 5.REVERSED_OF_my_IPV6.2.ip6.arpa. 3600 IN PTR my.DOMAIN.XYZ. 1.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns1.infomaniak.ch. 1.0.1.0.3.1.0.0.0.0.6.1.1.0.0.2.ip6.arpa. 86400 IN NS ns2.infomaniak.ch. ;; Received 247 bytes from 84.16.67.66#53(ns2.infomaniak.ch) in 1 msAfter some digging I've understood that Cloudron is using Hakara in a docker container as the SMTP mail server.

So I guess the whole mail server stack is running in a docker container.

Based on those facts, I had a look if ipv6 was enabled in docker.

I saw that Docker is configured to manage IPv6 firewall rules (iptables) using

ps aux | grep dockerd. =>--storage-driver=overlay2 --experimental --ip6tables --userland-proxy=falsebut that containers aren't using ipv6 :

sudo docker network inspect bridge | grep -A 5 IPv6

Result :"EnableIPv6": false, "IPAM": { "Driver": "default", "Options": null, "Config": [ {@girish , if I create a custom config file "deamon.js" in /etc/docker/daemon.json , and I add this config inside the json to enable ipv6 inside containers :

{ "ipv6": true, "fixed-cidr-v6": "fd00:dead:beef::/64" }Do you think it could solve the issue ? Because I guess reverse DNS checks likely happen inside this mail container ?

And maybe each app update reset the docker network bridge ? And then the mail container loses ipv6 connectivity / ability to do his rever DNS check for ipv6 correctly ?I really don't know, trying to figure things out here.

-

After some digging I've understood that Cloudron is using Hakara in a docker container as the SMTP mail server.

So I guess the whole mail server stack is running in a docker container.

Based on those facts, I had a look if ipv6 was enabled in docker.

I saw that Docker is configured to manage IPv6 firewall rules (iptables) using

ps aux | grep dockerd. =>--storage-driver=overlay2 --experimental --ip6tables --userland-proxy=falsebut that containers aren't using ipv6 :

sudo docker network inspect bridge | grep -A 5 IPv6

Result :"EnableIPv6": false, "IPAM": { "Driver": "default", "Options": null, "Config": [ {@girish , if I create a custom config file "deamon.js" in /etc/docker/daemon.json , and I add this config inside the json to enable ipv6 inside containers :

{ "ipv6": true, "fixed-cidr-v6": "fd00:dead:beef::/64" }Do you think it could solve the issue ? Because I guess reverse DNS checks likely happen inside this mail container ?

And maybe each app update reset the docker network bridge ? And then the mail container loses ipv6 connectivity / ability to do his rever DNS check for ipv6 correctly ?I really don't know, trying to figure things out here.

@Gengar said in Email sending broken after updating to 8.2.x (due to IPv6 issues):

Do you think it could solve the issue ? Because I guess reverse DNS checks likely happen inside this mail container ?

if that was the issue then wouldn't we all be having the strange issues you're having? Speaking personally my PTR6 status has been fine and green ever since I initially set it.

if that was the issue then wouldn't we all be having the strange issues you're having? Speaking personally my PTR6 status has been fine and green ever since I initially set it. -

@Gengar said in Email sending broken after updating to 8.2.x (due to IPv6 issues):

Do you think it could solve the issue ? Because I guess reverse DNS checks likely happen inside this mail container ?

if that was the issue then wouldn't we all be having the strange issues you're having? Speaking personally my PTR6 status has been fine and green ever since I initially set it.

if that was the issue then wouldn't we all be having the strange issues you're having? Speaking personally my PTR6 status has been fine and green ever since I initially set it.@jdaviescoates yeah i thought about that too and I guess you are right…

But I really have no other leads rn …