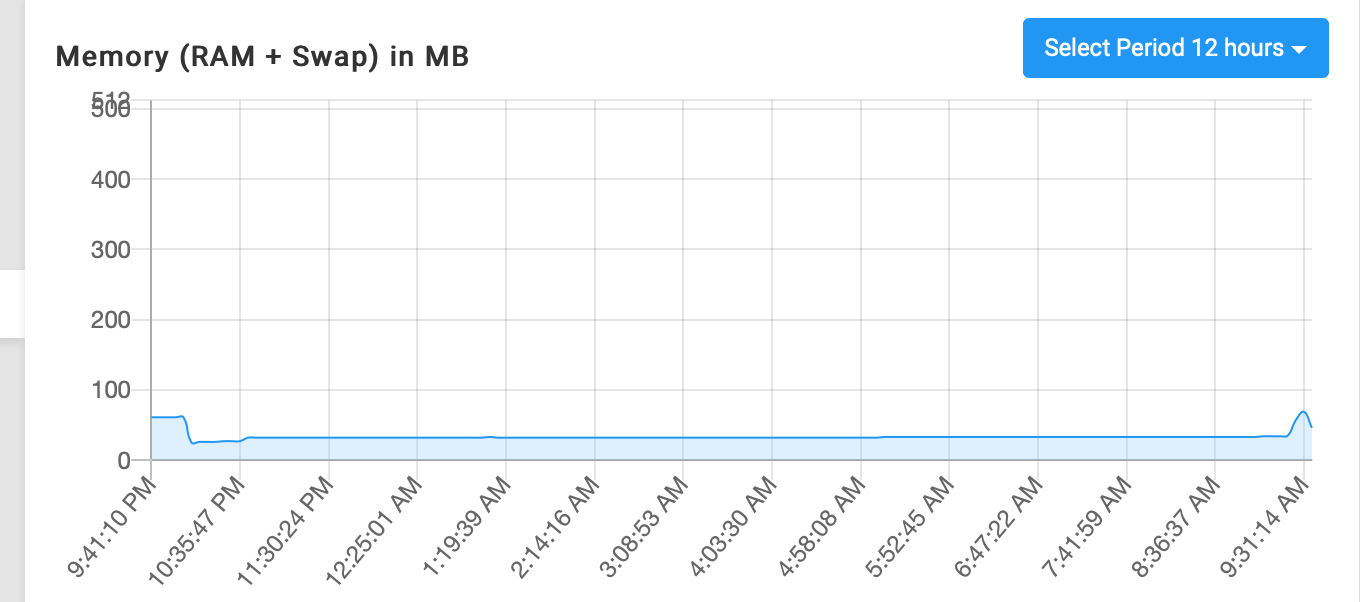

App restarted due to memory limit reached, but graph shows nothing lose to memory limit

-

How is the server overall doing memory wise? Maybe the system itself does not have enough memory left to even hit the set limits?

Also are you aware of maybe some large sync task into radicale, which may spike its memory usage very short term, so it does not even get registered with the memory graph?

@nebulon & @girish - Thank you for the questions.

-

I'm unaware of any large sync task going on, no. The logs don't indicate anything, and I'm the only one using the app and I haven't been making any large changes (i.e. I haven't removed an account and recreated it on my computer thus triggering a full resync for example).

-

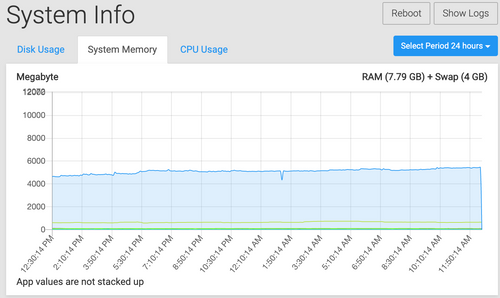

System memory seems to be more than capable right now, and has been for a long time. Here's the 24 hour review from Cloudron:

So I guess what you're saying is since the graphs only collect every 10 minutes as snapshots in time, then if something suddenly raises the memory usage within a couple of minutes until it crashes, that won't be recorded at all on the graphs, right? That's unfortunate if true. Is there a way perhaps to improve it so the graphs are forcefully collected just before a container is killed (i.e. be part of the Docker kill process perhaps) so we can see what the memory usage actually was?

What I did yesterday after seeing the issue happening so frequently was restore from an earlier morning backup before the reboots started happening for outOfMemory errors, and it was killed once more after that but not since then. Yesterday it was happening almost every 45-90 minutes, and the last time it was restarted now according to my event log is 14 hours ago, which is strange but seems like it's maybe settled now whatever it was that was going on.

-

-

@nebulon & @girish - Thank you for the questions.

-

I'm unaware of any large sync task going on, no. The logs don't indicate anything, and I'm the only one using the app and I haven't been making any large changes (i.e. I haven't removed an account and recreated it on my computer thus triggering a full resync for example).

-

System memory seems to be more than capable right now, and has been for a long time. Here's the 24 hour review from Cloudron:

So I guess what you're saying is since the graphs only collect every 10 minutes as snapshots in time, then if something suddenly raises the memory usage within a couple of minutes until it crashes, that won't be recorded at all on the graphs, right? That's unfortunate if true. Is there a way perhaps to improve it so the graphs are forcefully collected just before a container is killed (i.e. be part of the Docker kill process perhaps) so we can see what the memory usage actually was?

What I did yesterday after seeing the issue happening so frequently was restore from an earlier morning backup before the reboots started happening for outOfMemory errors, and it was killed once more after that but not since then. Yesterday it was happening almost every 45-90 minutes, and the last time it was restarted now according to my event log is 14 hours ago, which is strange but seems like it's maybe settled now whatever it was that was going on.

I've also had OOM for some apps without anything registered in the graphs (I was initially surprised, then concluded the data was incomplete).

If it's random spike of memory, I don't know how to debug. Most of the time, I just up the memory limit of the app.

-

-

One of the reasons I posted this, could be a MySQL config issue not utilising all the parallel processing capabilities if my.cnf is defaults: https://forum.cloudron.io/topic/3625/mysql-tuning-with-my-cnf-settings-optimisation

-

One of the reasons I posted this, could be a MySQL config issue not utilising all the parallel processing capabilities if my.cnf is defaults: https://forum.cloudron.io/topic/3625/mysql-tuning-with-my-cnf-settings-optimisation

@marcusquinn This wouldn’t cause an OOM error though for the app would it? Radicale in this case doesn’t even use MySQL.

-

This may be related to a kernel update from Canonical or some sort of instant resource exhaustion attack sweeping the net.

I've also had many idle WP sites get OOM killed for no apparent reason.

I am not liking the 50% swap per app, as it hides the actual amount of memory assigned and things seem to get killed halfway which overinflates resource settings.

I agree with @d19dotca that App mem logs should increase when upper thresholds are met, maybe even throw a notification before it's killed so you have a time stamp near the beginning of whatever made memory jump.

I can also recommend a log monitoring service that can catch the root cause of things by design. Ping me if interested.

-

@marcusquinn This wouldn’t cause an OOM error though for the app would it? Radicale in this case doesn’t even use MySQL.

@d19dotca certainly a misconfiguration of any DB can allow for memory exhaustion and displacing other services.

-

@d19dotca certainly a misconfiguration of any DB can allow for memory exhaustion and displacing other services.

@marcusquinn since this is a shared DB environment, then multiple Apps would be killed not just some using the same DB.

-

The addons have their own memory limit and would in this case be restarted on their own independent from the apps. The apps have to be able to handle db reconnects. However if you would see addon crashes and app crashes together, then this could indicate some issue with the reconnection logic in the app, however I have not seen those so far.

-

@d19dotca certainly a misconfiguration of any DB can allow for memory exhaustion and displacing other services.

-

The addons have their own memory limit and would in this case be restarted on their own independent from the apps. The apps have to be able to handle db reconnects. However if you would see addon crashes and app crashes together, then this could indicate some issue with the reconnection logic in the app, however I have not seen those so far.

@nebulon Agreed. So what I've done even though I have no idea why it's acting up suddenly the last couple of days, is bumped up the memory limit to 1 GB. It's far more than it needs when the graph clearly shows it hovering around just 50 MB most of the time, but I forgot that just because I set 1 GB as the max memory limit doesn't mean it's reserving 1 GB of memory for it on the system, so once I remembered that I realized it's not a bad thing to increase the memory limit higher than it needs. But please correct me if I've misunderstood that part.

-

@nebulon Agreed. So what I've done even though I have no idea why it's acting up suddenly the last couple of days, is bumped up the memory limit to 1 GB. It's far more than it needs when the graph clearly shows it hovering around just 50 MB most of the time, but I forgot that just because I set 1 GB as the max memory limit doesn't mean it's reserving 1 GB of memory for it on the system, so once I remembered that I realized it's not a bad thing to increase the memory limit higher than it needs. But please correct me if I've misunderstood that part.

@d19dotca Yes, the limits are there to protect against the noisy neighbor problem which exists when many processes are competing for the same resources and ONE uses up more than their fair share.

Technically we could have all 30 Apps be set to 1+GB on a 16GB RAM system and it would work fine until one App behaved badly. Then the system would be in trouble as the OOM killer would select a potentially critical service to kill.

With limits, the system is happy, and the killing happens in containers instead.