Object Storage or Block Storage for backups of growing 60+ GB?

-

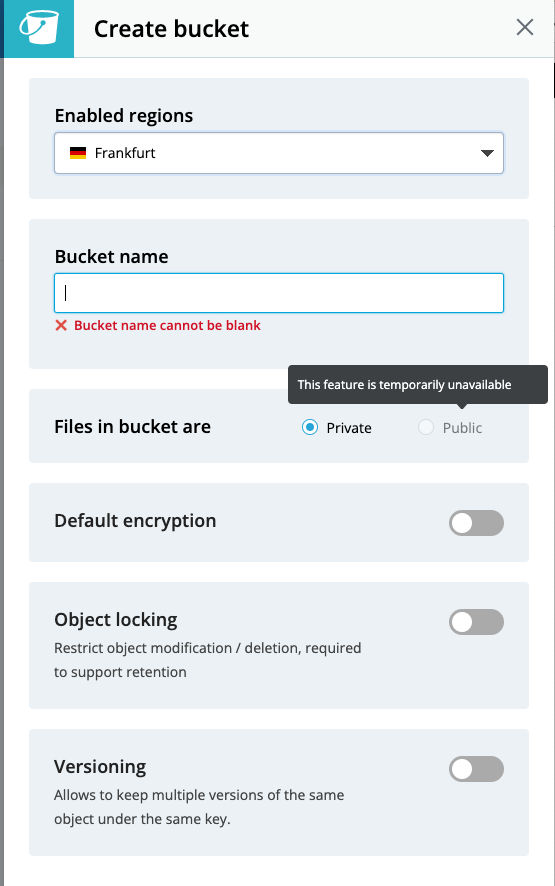

@scooke funny story - i just migrated all my stuff to idrive for my mastodon instance only to discover - twoops - they don't appear to allow public buckets!?

@doodlemania2 I think that's a setting before you create the bucket.

-

@doodlemania2 I think that's a setting before you create the bucket.

-

@scooke anyone know a contact? Would like to stay with them but no public buckets...lol - maybe they can bit flip it for me?

went with their main tech support email

-

Noticing that tgz of about 35-40 GB GB (well that’s the size after compression, it’s really compressing closer to 60+ GB uncompressed data) and it’s nearly double what it consumes in storage because at least the first backup has that snapshot directory too which is a duplicate of the latest backup. My IDrive e2 bucket shows a total usage of 70 GB currently after one system backup.

I notice if I use rsync the backup times are anywheres between about 20-70 minutes, however when using tgz it’s closer to 120 minutes. That’s about the same performance when using Wasabi and even better than Backblaze in my tests so far.

The downside to rsync is it takes forever to delete so many (tens of thousands of) different files from s3 storage, which means Cloudron cleaning it up or even just me doing it manually to purge older data is a real time sink. Makes me want to use tarballs instead however the upload rate seems to be much less performant. I suppose it is maybe a moot point to some degree because it’d normally be done in the middle of the night when time isn’t really a factor, although it will mess things up for two hours during Cloudron version updates if I (which I would) do system backup prior to that task as the maintenance would be so much longer.

I’m curious though… is there a way to improve the tarball performance? Why is it so dang slow? I assume concurrency but what prevents concurrency from taking place when using tarball? @staff, any suggestions here? Anything I can do or any specific recommendations?

I should note I’ve seen next to zero improvements or changes at all whether I use a part size of 128 MB or 1 GB, doesn’t seem to change anything performance wise.

-

Noticing that tgz of about 35-40 GB GB (well that’s the size after compression, it’s really compressing closer to 60+ GB uncompressed data) and it’s nearly double what it consumes in storage because at least the first backup has that snapshot directory too which is a duplicate of the latest backup. My IDrive e2 bucket shows a total usage of 70 GB currently after one system backup.

I notice if I use rsync the backup times are anywheres between about 20-70 minutes, however when using tgz it’s closer to 120 minutes. That’s about the same performance when using Wasabi and even better than Backblaze in my tests so far.

The downside to rsync is it takes forever to delete so many (tens of thousands of) different files from s3 storage, which means Cloudron cleaning it up or even just me doing it manually to purge older data is a real time sink. Makes me want to use tarballs instead however the upload rate seems to be much less performant. I suppose it is maybe a moot point to some degree because it’d normally be done in the middle of the night when time isn’t really a factor, although it will mess things up for two hours during Cloudron version updates if I (which I would) do system backup prior to that task as the maintenance would be so much longer.

I’m curious though… is there a way to improve the tarball performance? Why is it so dang slow? I assume concurrency but what prevents concurrency from taking place when using tarball? @staff, any suggestions here? Anything I can do or any specific recommendations?

I should note I’ve seen next to zero improvements or changes at all whether I use a part size of 128 MB or 1 GB, doesn’t seem to change anything performance wise.

@d19dotca said in Object Storage or Block Storage for backups of growing 60+ GB?:

I’m curious though… is there a way to improve the tarball performance? Why is it so dang slow? I assume concurrency but what prevents concurrency from taking place when using tarball? @staff, any suggestions here? Anything I can do or any specific recommendations?

One idea might be to measure just uploading via other tools to see how much the performance difference is. While the tgz code uploader is slow, it's not substantially slower than native tools (from what I have tested). Try to do a tar cvf - upload of the same data directory as the app to the backup storage and measure.

-

@robi I checked most (all non-US ones, 4 US ones) and the Public radio button stayed greyed out.

-

Interesting. I decided to test something with a local Datacentre closer to my VPS called IDrive e2 (seems like a recent s3 competitor from mid-2022 which promises high speeds).

Backblaze is good although I find it quite slow (mostly because my VPS is in a very far away Datacentre from Backblaze's California (us-west) location. Speed isn't critical since it's just backups but definitely helps still.

Backblaze's pricing for their API calls scares me a little bit, makes me think it'll be much more pricey than I'm anticipating. May just need to test it out for a while to verify.

I see what you mean about Wasabi's weird 90-day storage policy which means even deleted files are still counted for 90 days, and my current estimate is quickly adding up, so I think despite initially happy with Wasabi's performance I may need to abandon that provider.

Still experimenting. Currently in the middle of a large 60+GB backup to IDrive e2 (using rsync instead of tarball for now) and have to say I'm super impressed with the speeds. Their pricing is also quite minimal. Will see if they end up being the one I use.

@d19dotca Thanks for your R&D sharing on this, just trialing it, and the 1 year deals save a lot of money compared to any other S3, including Wasabi.

Really like the UI/UK, much easier for anyone to work with than Wasabi too, as you don't need to create Policies in JSON like you do with other.

from me, definitely recommended for all home users, and most SME needs too!

from me, definitely recommended for all home users, and most SME needs too!@d19dotca said in Object Storage or Block Storage for backups of growing 60+ GB?:

Interesting. I decided to test something with a local Datacentre closer to my VPS called IDrive e2 (seems like a recent s3 competitor from mid-2022 which promises high speeds).

-

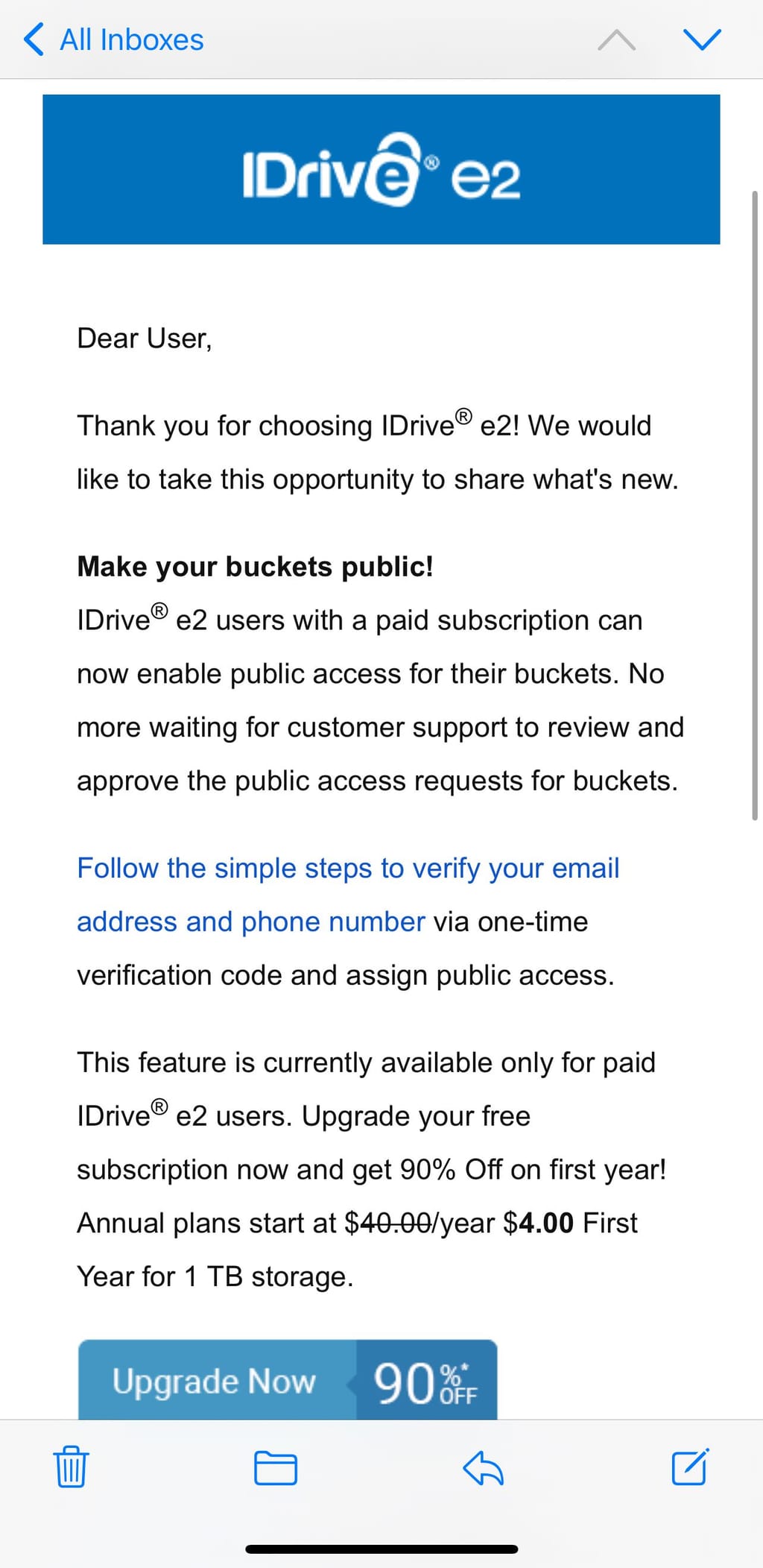

FYI, got an email today from IDrive which announced the public buckets for people looking for that, mentioning it here as I know a few people were asking about that feature.

They’ve also made some serious performance tweaks. I noticed before I was seeing it take about 55-75 minutes for a 30+ GB upload in tarball, and now it’s closer to 45-50 minutes for the same size (in fact possibly even larger of a file now to boot as I have a couple more apps deployed now too and email continues to grow larger).

-

FYI, got an email today from IDrive which announced the public buckets for people looking for that, mentioning it here as I know a few people were asking about that feature.

They’ve also made some serious performance tweaks. I noticed before I was seeing it take about 55-75 minutes for a 30+ GB upload in tarball, and now it’s closer to 45-50 minutes for the same size (in fact possibly even larger of a file now to boot as I have a couple more apps deployed now too and email continues to grow larger).

@d19dotca said in Object Storage or Block Storage for backups of growing 60+ GB?:

I noticed before I was seeing it take about 55-75 minutes for a 30+ GB upload in tarball, and now it’s closer to 45-50 minutes for the same

It may be related to lower traffic and usage over the end of year holidays.

-

@d19dotca said in Object Storage or Block Storage for backups of growing 60+ GB?:

I noticed before I was seeing it take about 55-75 minutes for a 30+ GB upload in tarball, and now it’s closer to 45-50 minutes for the same

It may be related to lower traffic and usage over the end of year holidays.

@robi said in Object Storage or Block Storage for backups of growing 60+ GB?:

It may be related to lower traffic and usage over the end of year holidays.

Entirely possible although I was discussing with them in a support case about the general speed of things and they did say they had recently implemented a change to their service which should speed things up and in my experience it does seem to be improved.

-

@robi said in Object Storage or Block Storage for backups of growing 60+ GB?:

It may be related to lower traffic and usage over the end of year holidays.

Entirely possible although I was discussing with them in a support case about the general speed of things and they did say they had recently implemented a change to their service which should speed things up and in my experience it does seem to be improved.

@d19dotca I also noticed an increase in performance. One thing I did notice though - my public buckets were part of the beta and included anonymous root "viewing" of sorts - it generated an XML file of the contents of each public bucket. A quick email to support and they had me turn the bucket to private, then back to public to correct. Something to check if you or someone you love may be an early public bucket adopter there!

-

@d19dotca We're noticing some significant slowdowns with IDrive backup uploads now. (We have lots and lots of small files and over 1TB). Just wondering if you'd experienced anything that might seem like throttling?

-

@d19dotca We're noticing some significant slowdowns with IDrive backup uploads now. (We have lots and lots of small files and over 1TB). Just wondering if you'd experienced anything that might seem like throttling?

@marcusquinn Oh oh... too good to be true?

-

@marcusquinn Oh oh... too good to be true?

@scooke Maybe, trying their support.

-

@d19dotca We're noticing some significant slowdowns with IDrive backup uploads now. (We have lots and lots of small files and over 1TB). Just wondering if you'd experienced anything that might seem like throttling?

@marcusquinn

idk what tech is behind their stack, but if it is just HDD with no NVMe for caching metadata or any kind of index.

100% that it will be slow if they grow too much. -

Changing strategy to Tarball and that seems to complete in a few minutes. Might just have to be the trade-off for our apps, that have lots and lots of small files. More S3 storage usage but faster to backup and restore, and more self-contained for each backup not relying on files from others.