-

I'm spending a small fortune on Backblaze it seems.

-

Is it possible to backup to a cheaper provider? eg https://www.pcloud.com/cloud-storage-pricing-plans.html?period=lifetime

-

What solutions / tips do you have to reduce backup costs?

-

-

I'm spending a small fortune on Backblaze it seems.

-

Is it possible to backup to a cheaper provider? eg https://www.pcloud.com/cloud-storage-pricing-plans.html?period=lifetime

-

What solutions / tips do you have to reduce backup costs?

@eddowding

Cloudron gives you plenty of protocols to choose from.

I am backing up to https://www.hetzner.com/storage/storage-box via SSHFS. Only paying €3.81 currently (for 1 TB), which is more than fair IMO.

If your VPS is not in Europe, you can also check out IDrive e2 (https://www.idrive.com/e2/pricing). It's an S3 compatible service. -

-

@eddowding

Cloudron gives you plenty of protocols to choose from.

I am backing up to https://www.hetzner.com/storage/storage-box via SSHFS. Only paying €3.81 currently (for 1 TB), which is more than fair IMO.

If your VPS is not in Europe, you can also check out IDrive e2 (https://www.idrive.com/e2/pricing). It's an S3 compatible service.@nichu42 said in Reducing backup costs / Backup to pCloud:

If your VPS is not in Europe, you can also check out IDrive e2 (https://www.idrive.com/e2/pricing). It's an S3 compatible service.

Hetzner also has US-based VPS and storage volumes.

I use Hetzner as my VPS provider and Backblaze B2 to backup data. As I migrate services to self-hosted options, I'm thinking of using Hetzner for both.

-

@eddowding

Cloudron gives you plenty of protocols to choose from.

I am backing up to https://www.hetzner.com/storage/storage-box via SSHFS. Only paying €3.81 currently (for 1 TB), which is more than fair IMO.

If your VPS is not in Europe, you can also check out IDrive e2 (https://www.idrive.com/e2/pricing). It's an S3 compatible service.@nichu42 said in Reducing backup costs / Backup to pCloud:

If your VPS is not in Europe, you can also check out IDrive e2 (https://www.idrive.com/e2/pricing). It's an S3 compatible service.

Currently has insanely cheap 90% off prices for the first year, plus EU options. Not powered by renewables though.

I use Hetzner Storage Boxes like many others here. Hard to beat value (cheaper than iDrive e2 standard prices) and 100% renewable electricity too.

-

@nichu42 said in Reducing backup costs / Backup to pCloud:

If your VPS is not in Europe, you can also check out IDrive e2 (https://www.idrive.com/e2/pricing). It's an S3 compatible service.

Currently has insanely cheap 90% off prices for the first year, plus EU options. Not powered by renewables though.

I use Hetzner Storage Boxes like many others here. Hard to beat value (cheaper than iDrive e2 standard prices) and 100% renewable electricity too.

Also Hetzner Storage Box here, just super cheap and it just works like 95% of the time.

I use SSHFS with the Storage Box. -

FWIW I asked pCloud:

Unfortunately, we do not support SSH but WebDAV. Here are the settings to access your pCloud account via WebDav protocol:

link: https://webdav.pcloud.com (US servers) or https://ewebdav.pcloud.com (EU servers) -

I'm spending a small fortune on Backblaze it seems.

-

Is it possible to backup to a cheaper provider? eg https://www.pcloud.com/cloud-storage-pricing-plans.html?period=lifetime

-

What solutions / tips do you have to reduce backup costs?

@eddowding I use Scaleway for Cloudron backups and Hetzner storage box for other archives

-

-

@eddowding

Cloudron gives you plenty of protocols to choose from.

I am backing up to https://www.hetzner.com/storage/storage-box via SSHFS. Only paying €3.81 currently (for 1 TB), which is more than fair IMO.

If your VPS is not in Europe, you can also check out IDrive e2 (https://www.idrive.com/e2/pricing). It's an S3 compatible service. -

@nichu42 they seem to have sites in the EU too, which is nice - thank you !

I'm confused how to configure it, if you have any tips. I'm trying all sorts of combinations of IP / endpoints, access id, secret, username etc to no avail so far.

@eddowding pretty sure @marcusquinn is using it for backups so should be able to help

-

@nichu42 they seem to have sites in the EU too, which is nice - thank you !

I'm confused how to configure it, if you have any tips. I'm trying all sorts of combinations of IP / endpoints, access id, secret, username etc to no avail so far.

@eddowding said in Reducing backup costs / Backup to pCloud:

endpoints

often it's just that you're missing an https:// or something

-

@eddowding pretty sure @marcusquinn is using it for backups so should be able to help

@jdaviescoates I'm using IDrive e2 (S3 compatible) and tarball backups. Seems to be the best speed & cost ratio.

-

G girish moved this topic from Support on

G girish moved this topic from Support on

-

@jdaviescoates I'm using IDrive e2 (S3 compatible) and tarball backups. Seems to be the best speed & cost ratio.

@marcusquinn said in Reducing backup costs / Backup to pCloud:

@jdaviescoates I'm using IDrive e2 (S3 compatible) and tarball backups. Seems to be the best speed & cost ratio.

I know, that's why I tagged you, because my old chum @eddowding was looking for support in getting that set up

-

Regardless of the provider, the possibility to copy the backup to a webdav share would be very good!

-

FWIW I asked pCloud:

Unfortunately, we do not support SSH but WebDAV. Here are the settings to access your pCloud account via WebDav protocol:

link: https://webdav.pcloud.com (US servers) or https://ewebdav.pcloud.com (EU servers)@eddowding Hi there, I am new to cloudron but have it installed, and want to use webdav to send my cloudron backups to my pcloud account as described in your post here, I have a european server pcloud account with 3TB available so this should get me going well, I just need some help setting the webdav to pcloud as my backup choice, can you please post a description of exactly how to do this as I have searched and found no more info on this or using webdav for this purpose in general?

Your help is appreciated! -

@eddowding Hi there, I am new to cloudron but have it installed, and want to use webdav to send my cloudron backups to my pcloud account as described in your post here, I have a european server pcloud account with 3TB available so this should get me going well, I just need some help setting the webdav to pcloud as my backup choice, can you please post a description of exactly how to do this as I have searched and found no more info on this or using webdav for this purpose in general?

Your help is appreciated! -

@eddowding Hi there, I am new to cloudron but have it installed, and want to use webdav to send my cloudron backups to my pcloud account as described in your post here, I have a european server pcloud account with 3TB available so this should get me going well, I just need some help setting the webdav to pcloud as my backup choice, can you please post a description of exactly how to do this as I have searched and found no more info on this or using webdav for this purpose in general?

Your help is appreciated!@Mad_Mattho Checkout iDrive.com e2 or Hetzner.com Storage Box, both well tested and liked by many here.

-

Enter Shadowdrive, an EU hosted provider with 2TB at € 4.99 / month. Based on Nextcloud. Cheapest privacy minded storage provider I've seen so far.

https://shadow.tech/en-DE/drive

"Hosted in Europe, your files are safe from prying eyes, even our own: we do not commercially exploit any data you choose to host, and it is encrypted both in transit and client-side.

Desktop and mobile clients help you keep your files synced automatically. You can also connect with third-party backup software to ensure that you recover what you need." -

@Mad_Mattho and other pDrive customers - The Linux version of pDrive creates "a folder in your [user] directory and use(s) that as a mount point for a virtual drive" (https://www.reddit.com/r/pcloud/comments/13rkrh8/linux_cannot_write_into_pcloud_drive/). If you are on a linux-based server/vm, etc., it sounds like this should work well. I don't know if you would need to start over and install pDrive first, or if you could just install it now post-Cloudron-install without messing up Cloudron. If you give it a try let the rest of us know how it went.

-

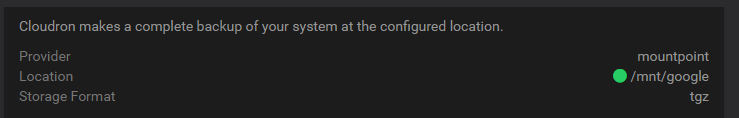

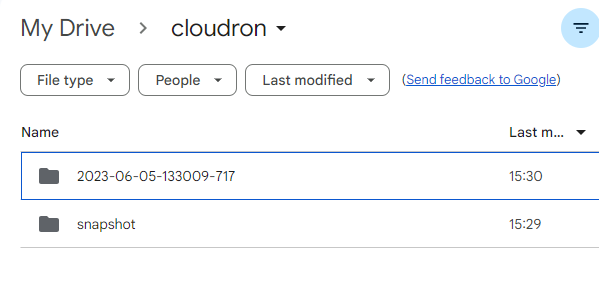

You could try/experiment with using rclone.

create a config for your desired mount, for example google drive.

Mount that via systemd and then point your backups to that location.For example:

[Unit] Description=rclone Service Google Drive Mount Wants=network-online.target After=network-online.target [Service] Type=notify Environment=RCLONE_CONFIG=/root/.config/rclone/rclone.conf RestartSec=5 ExecStart=/usr/bin/rclone mount google:cloudron /mnt/google \ # This is for allowing users other than the user running rclone access to the mount --allow-other \ # Dropbox is a polling remote so this value can be set very high and any changes are detected via polling. --dir-cache-time 9999h \ # Log file location --log-file /root/.config/rclone/logs/rclone-google.log \ # Set the log level --log-level INFO \ # This is setting the file permission on the mount to user and group have the same access and other can read --umask 002 \ # This sets up the remote control daemon so you can issue rc commands locally --rc \ # This is the default port it runs on --rc-addr 127.0.0.1:5574 \ # no-auth is used as no one else uses my server --rc-no-auth \ # The local disk used for caching --cache-dir=/cache/google \ # This is used for caching files to local disk for streaming --vfs-cache-mode full \ # This limits the cache size to the value below --vfs-cache-max-size 50G \ # Speed up the reading: Use fast (less accurate) fingerprints for change detection --vfs-fast-fingerprint \ # Wait before uploading --vfs-write-back 1m \ # This limits the age in the cache if the size is reached and it removes the oldest files first --vfs-cache-max-age 9999h \ # Disable HTTP2 #--disable-http2 \ # Set the tpslimit --tpslimit 12 \ # Set the tpslimit-burst --tpslimit-burst 0 ExecStop=/bin/fusermount3 -uz /mnt/google ExecStartPost=/usr/bin/rclone rc vfs/refresh recursive=true --url 127.0.0.1:5574 _async=true Restart=on-failure User=root Group=root [Install] WantedBy=multi-user.target # https://github.com/animosity22/homescripts/blob/master/systemd/rclone-drive.service