Just got interested in this myself, seems like they use Docker Compose https://github.com/gramps-project/gramps-web/blob/main/docker-compose.yml

rosano

Posts

-

Gramps.js/Web -

What does it take to use Surfer as a “real” multidomain surfer?@nebulon Agreed. I just use the tools I understand, so it tends to end up with this shape. For me static sites only require GET anyway. I copied headers in the previous version, maybe will add that later. Pull requests welcome if someone wants to improve it.

-

What does it take to use Surfer as a “real” multidomain surfer?I just worked on a hack for this https://github.com/rosano/remit

It's a small separate app that maps multiple domains to folders under any public URL: so if it gets a request for

your-site-domain.com/path, it will fetchhttps://your-surfer-cloudron-domain.com/maybe_a_folder_with_some_websites/your-site-domain.com/pathand send it as a response. So you name folders with the domain and it figures out the rest. -

Map domain to subfolder?@nebulon Yes, and I also asked myself last year! https://forum.cloudron.io/topic/11395/multiple-static-sites-on-one-app/3

If I develop a solution, where do you recommend I share it?

-

Map domain to subfolder?Is it possible to have different subdirectories in surfer mapped to their own domains? Like this:

example1.commaps to/public/alfaexample2.commaps to/public/bravo

-

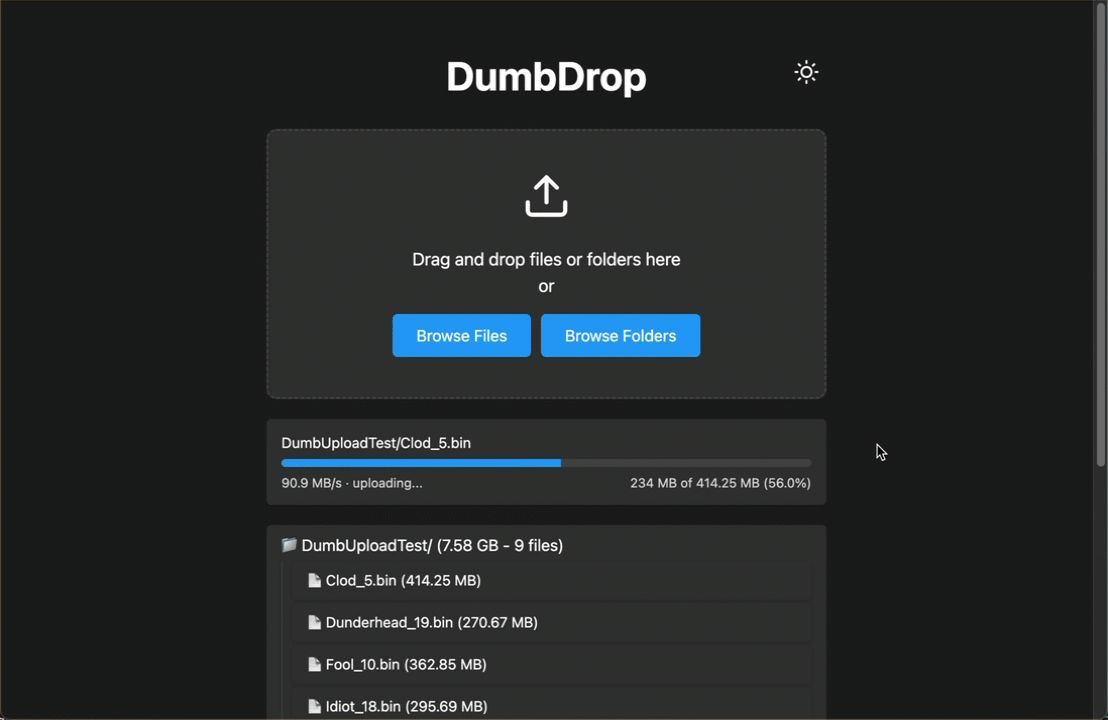

DumbDrop

- Title: DumbDrop on Cloudron - No accounts, no tracking, just drag, drop, and share.

- Main Page: https://dumbware.io/DumbDrop

- Git: https://github.com/DumbWareio/dumbdrop

- Licence: GNU GPL

- Docker: Yes

- Demo: https://dumbdrop.dumbware.io (I was able to guess the pin easily)

- Summary: end-to-end encrypted file-sharing app, maybe similar to wormhole (or filepizza but not p2p)

- Notes: they seem to make a nice suite of simple open-source software

- Alternative to / Libhunt link: e.g. https://alternativeto.net/software/dumbdrop/

- Screenshots:

-

remotestorage.ioSurprised to find this here! I've been developing apps for this since 2019. Yesterday, I finally made a Cloudron package for the Armadietto server https://github.com/rosano/armadietto-cloudron

-

database sizeDeleting all executions still didn't move the database down from 500mb, but after running

VACUUM FULL execution_data;it's now 13mb. I''ll take it. -

database size@joseph it's the

execution_datatable but I'm not sure how to figure out where the bloat is. the LLM queries are giving me mostly table size but nothing more detailed. -

database sizeIs it normal for the database to be half a gigabyte?

I have deleted all my executions several times but it doesn't seem to change anything. Also just set

export DB_SQLITE_VACUUM_ON_STARTUP=truein/app/data/env.sh, but after restarting it seems to take the same amount of space. When I runSELECT pg_size_pretty(pg_database_size('XXXXX'))it sayspg_size_pretty ---------------- 594 MB (1 row)Is there some data stuck in there that I can see and remove somehow?

-

Can't export credentials@girish Works thank you. I see you already updated the docs!

-

Can't export credentialsWhen I run the export command as per Cloudron docs:

gosu cloudron /app/code/node_modules/.bin/n8n export:credentials --allI get a bunch of warnings, and then it seems to not find any credentials:

Permissions 0644 for n8n settings file /app/data/user/.n8n/config are too wide. This is ignored for now, but in the future n8n will attempt to change the permissions automatically. To automatically enforce correct permissions now set N8N_ENFORCE_SETTINGS_FILE_PERMISSIONS=true (recommended), or turn this check off set N8N_ENFORCE_SETTINGS_FILE_PERMISSIONS=false. User settings loaded from: /app/data/user/.n8n/config There is a deprecation related to your environment variables. Please take the recommended actions to update your configuration: - N8N_RUNNERS_ENABLED -> Running n8n without task runners is deprecated. Task runners will be turned on by default in a future version. Please set `N8N_RUNNERS_ENABLED=true` to enable task runners now and avoid potential issues in the future. Learn more: https://docs.n8n.io/hosting/configuration/task-runners/ Error exporting credentials. See log messages for details. No credentials found with specified filters No credentials found with specified filtersNothing appears in the logs.

Searching for "No credentials found with specified filters" brings up two topics from the n8n forum (first, second) but I'm not sure if there's something I can use there. Any advice?

I'm running version 1.85.4 / io.n8n.cloudronapp@3.76.0 and recently did a rapid series of upgrades from a 2023 version of n8n.

-

How to add this new anti bot sign-up configuration on Cloudron?Ghost recently made a UI for this https://ghost.org/changelog/signup-spam-protection/

-

backup / snapshots retention@jdaviescoates i didn't know about the lifecycle settings, tried it and it reduced total size by 90% after a few days

@joseph i think this was around the time i switched from aws to backblaze, but i understand it didn't transfer pre-existing backups, only starting fresh, although i could be wrong. will try deleting everything if needed, but so far the previous solution works for me

-

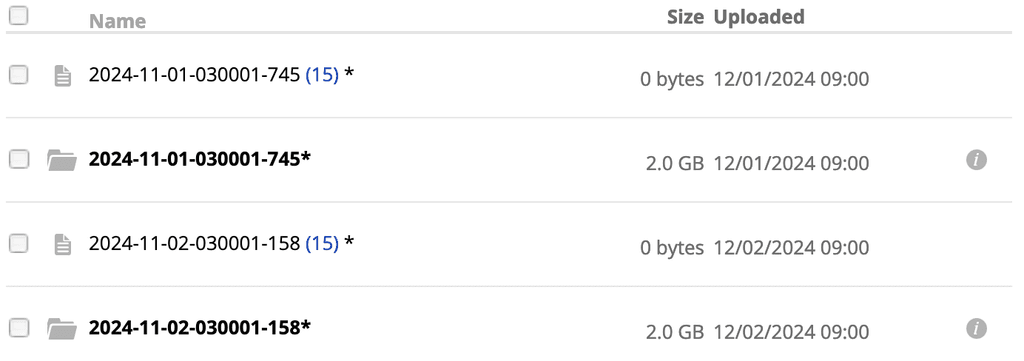

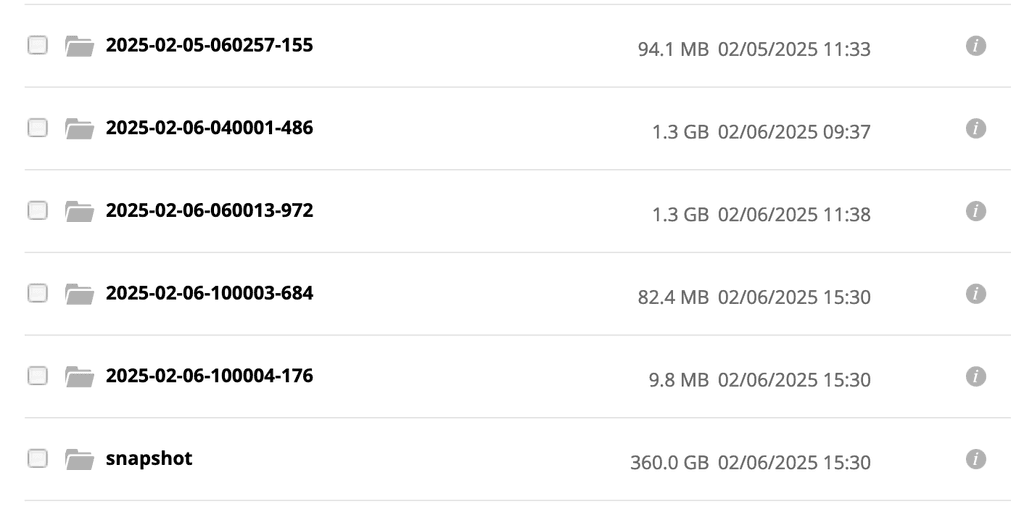

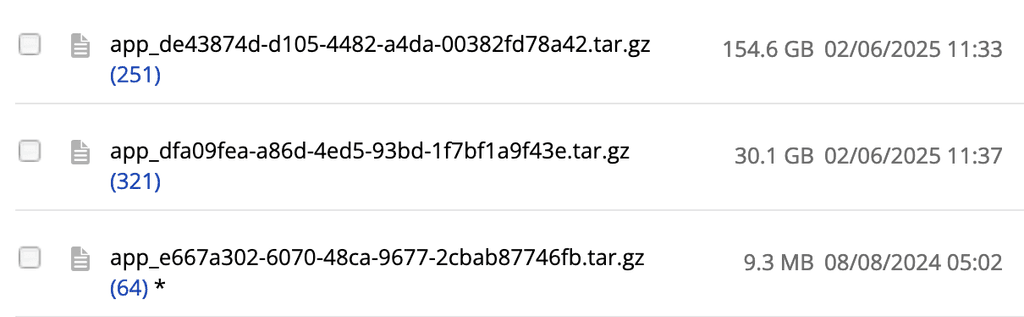

backup / snapshots retentioni gather that there may be some things left beyond the retention policy, but it seems like everything or many/most things are kept forever. some of the dates in

/snapshotsgo back to june/july of last year, over 6 months, and i have maybe only ever made a special manual snapshot or said 'keep this' once or twice. i expect most of these should be deleted by now, do i have the wrong idea? -

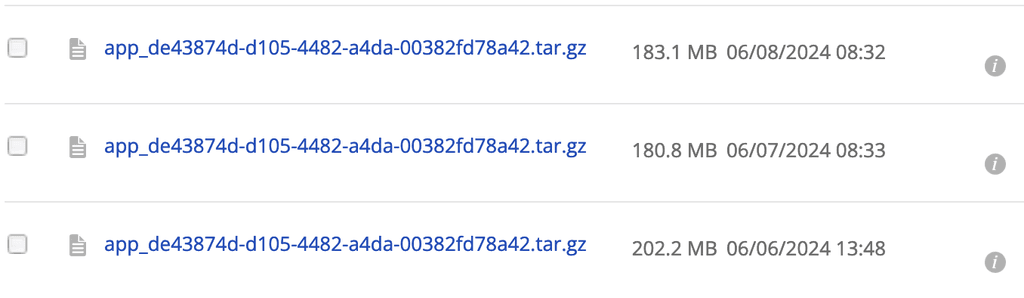

backup / snapshots retentionmy backblaze storage bill seems to be increasing every month and i think it's due to cloudron backups. my retention policy is 1 month but it seems like older backups / snapshots are not getting deleted:

i'm confused also looking at this directory as the main folder seems time-based and the subfolder

/snapshotseems app-based. this subfolder seems to also have many old versions beyond retention policy.

manually cleaning this up is very cumbersome in backblaze as their checkboxes are not html but pictures or something, so one must literally click all of them. i tried cloudron's 'cleanup backups' which seems to only consider ones within my 1 month retention policy. not sure if it's aware of the files beyond that.

Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-21-040001-622/app_XXXXX_v2.26.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-20-100002-716/app_XXXXX_v2.25.0 keep/discard: preserveSecs Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-20-040001-314/app_XXXXX_v2.25.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-19-040001-285/app_XXXXX_v2.25.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-18-040001-307/app_XXXXX_v2.25.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-17-040001-202/app_XXXXX_v2.25.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-16-040001-480/app_XXXXX_v2.25.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-15-040001-449/app_XXXXX_v2.25.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-14-040001-497/app_XXXXX_v2.25.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-13-100004-538/app_XXXXX_v2.24.0 keep/discard: keepWithinSecs Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-13-040001-279/app_XXXXX_v2.24.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-12-040001-348/app_XXXXX_v2.24.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-11-040001-615/app_XXXXX_v2.24.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-10-040001-315/app_XXXXX_v2.24.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-09-040001-222/app_XXXXX_v2.24.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner applyBackupRetention: 2025-01-08-040001-314/app_XXXXX_v2.24.0 keep/discard: referenced Feb 07 08:01:17 box:backupcleaner cleanupAppBackups: applying retention for appId 1bba7e1f-a2f5-4bd9-b2be-3a60d0153669 retention: {"keepLatest":true,"keepWithinSecs":2592000}i didn't find any solutions in these related topics:

- Backups before retention policy not being deleted. Bug?

- Scaleway backups retained > retention policy

- Backup retention time

any suggestions?

-

"fatal: not a git repository"@nebulon said in "fatal: not a git repository":

Have you tried to use a subfolder in /app/data/ for this? This is where data is kept persistently in Cloudron.

Been using this folder and seems like it has had no issues for a week or two now

thanks for clarifying

thanks for clarifying

-

"fatal: not a git repository"I have been pulling an external git repository via n8n into the container's

/tmpfolder and have pushed changes successfully via an active workflow. At some point the workflow fails because of afatal: not a git repositoryerror and I'm not sure why this happens.When navigating to the

.gitfolder via the container's web terminal in Cloudron, the data inside looks like this:total 104 drwxr-xr-x 8 cloudron cloudron 4096 May 14 12:00 . drwxr-xr-x 7 cloudron cloudron 4096 May 14 12:00 .. -rw-r--r-- 1 cloudron cloudron 5 May 14 07:08 COMMIT_EDITMSG -rw-r--r-- 1 cloudron cloudron 92 May 14 07:08 FETCH_HEAD -rw-r--r-- 1 cloudron cloudron 41 May 14 07:08 ORIG_HEAD drwxr-xr-x 2 cloudron cloudron 4096 May 3 10:17 branches -rw-r--r-- 1 cloudron cloudron 333 May 14 07:08 config drwxr-xr-x 2 cloudron cloudron 4096 May 14 12:00 hooks -rw-r--r-- 1 cloudron cloudron 56770 May 14 07:08 index drwxr-xr-x 2 cloudron cloudron 4096 May 14 12:00 info drwxr-xr-x 3 cloudron cloudron 4096 May 3 10:18 logs drwxr-xr-x 52 cloudron cloudron 4096 May 14 07:08 objects drwxr-xr-x 5 cloudron cloudron 4096 May 3 10:18 refsWhen cloning/pulling the repo fresh to a new folder, it looks slightly different but I'm not sure what would make it behave differently.

total 116 drwxr-xr-x 8 cloudron cloudron 4096 May 19 07:10 . drwxr-xr-x 7 cloudron cloudron 4096 May 19 07:10 .. -rw-r--r-- 1 cloudron cloudron 5 May 19 07:10 COMMIT_EDITMSG -rw-r--r-- 1 cloudron cloudron 92 May 19 07:10 FETCH_HEAD -rw-r--r-- 1 cloudron cloudron 23 May 19 07:10 HEAD -rw-r--r-- 1 cloudron cloudron 41 May 19 07:10 ORIG_HEAD drwxr-xr-x 2 cloudron cloudron 4096 May 19 07:10 branches -rw-r--r-- 1 cloudron cloudron 333 May 19 07:10 config -rw-r--r-- 1 cloudron cloudron 73 May 19 07:10 description drwxr-xr-x 2 cloudron cloudron 4096 May 19 07:10 hooks -rw-r--r-- 1 cloudron cloudron 57218 May 19 07:10 index drwxr-xr-x 2 cloudron cloudron 4096 May 19 07:10 info drwxr-xr-x 3 cloudron cloudron 4096 May 19 07:10 logs drwxr-xr-x 14 cloudron cloudron 4096 May 19 07:10 objects -rw-r--r-- 1 cloudron cloudron 114 May 19 07:10 packed-refs drwxr-xr-x 5 cloudron cloudron 4096 May 19 07:10 refsI found one answer suggesting to copy

.git/ORIG_HEADto.git/HEADin the broken repo, but that didn't change anything and creates anotherfatal: detected dubious ownership in repository at XXXXXerror.As it functions for weeks and then suddenly stops, I wonder if it's not an n8n issue and something that happens at the Cloudron or OS level. If there are any guesses about that I would appreciate it.

For now I just manually delete the folder and pull again, but this defeats the purpose of using an automation system; I can also delete as part of the workflow, but wanted to avoid pulling a large amount of data everytime.

-

App Proxy set host header@girish said in App Proxy set host header:

@rosano so your plausible is running with a different domain with https and you are proxying from cloudron with another domain name ?

they recommend setting the

Hostheader toplausible.io, for example with nginx. I suppose that's what the App Proxy was doing before the update. I imagine it's not the direction Cloudron is going, in which case a hostable fork of the previous version would be amazing. -

App Proxy set host header@girish said in Cloudron 7.6 released:

app proxy: Host header is set to match the proxy domain instead of the target domain

I think this change broke my App Proxy for Plausible analytics. Would be nice to have the previous behaviour (or fork the older version).