there was a breaking change (more info here https://community.n8n.io/t/n8n-restrict-file-access-to-in-2-0/227750), you need to add this to your env file:

export N8N_RESTRICT_FILE_ACCESS_TO="/app/data"

there was a breaking change (more info here https://community.n8n.io/t/n8n-restrict-file-access-to-in-2-0/227750), you need to add this to your env file:

export N8N_RESTRICT_FILE_ACCESS_TO="/app/data"

apparently the successor of the tool has/will have one: https://controlr.app/

New repo: https://github.com/bitbound/ControlR

What happened: https://github.com/immense/Remotely/issues/985 --> https://github.com/bitbound/ControlR/discussions/84

@andreasdueren I'm guessing line 19 cleanup from here was a bit too optimistic, it removed the github url from the s3 package

@davidbrrrr I don't think I actually set it up. Did you manually add another data folder in /app/data so the path /app/data/data is available?

When I'm in "My Apps"-view, finding apps by label doesn't work anymore, it did in <9. To reproduce, set a label which doesn't include parts or the URL and try to find it. I tried searching the forums if this is already mentioned somewhere, but couldn't find anything.

9.0.15

Two things:

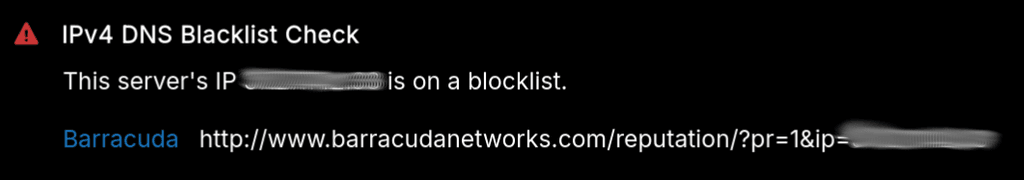

If I want to select and copy the link to barracudanetworks to check what's going on, it collapses before I can hit the copy button. The first link goes directly to the removal request, which is good, but it would be nice if the reputation check could also be a link, so I don't have to open the element inspector to copy the URL

A customer of mine is having the same issue. For me it works, because I'm not using SSO/OIDC, but they are and are unable to hop on video calls, which is a shame. If what I'm reading is true, the package needs quite a bit of adjustment to make it work

Update: I linked the account and now I'm in all kinds of trouble: The /#/mailboxes view doesn't load anymore, console shows TypeError: can't access property "label", item[column] is undefined

Sidenote: the /#/email-domains view takes forever to load

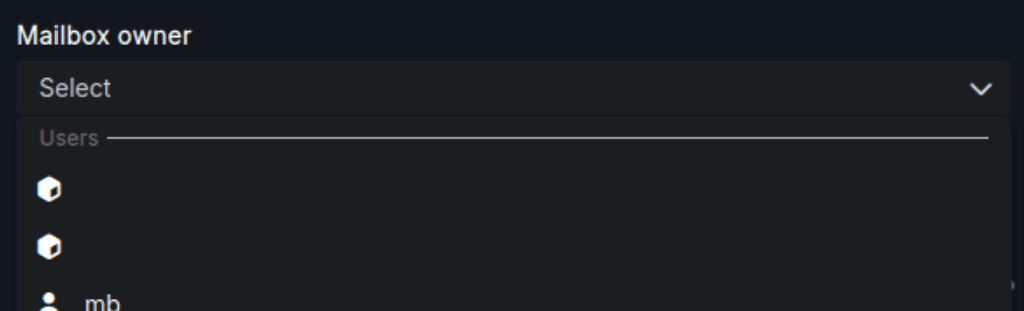

Just wanted to link a mailbox to a newly created but not yet activated user account. As far as I know in <9 the email address was used instead of the username if there was none, which currently is an empty field.

Create a user without username, just the email address, go to

/#/mailboxes, add mailbox, try to find correct user if there are more than one of those accounts.

Cloudron 9.0.15

@LoudLemur I haven't touched it in a while, but you're welcome to update it and test it out, back then everything was working except email, but that was 4 years ago, so it probably evolved and made it work

@nebulon can you also add a line for client_max_body_size 20M or something, just got a 413: Open WebUI: Server Connection Error in OpenwebUI where the Ollama logs say [error] 25#25: *7901 client intended to send too large body: 2460421 bytes, client: 172.18.0.1, server: _, request: "POST /api/chat HTTP/1.1", host: "lama.example.com" - apparently the 1MB default is not enough for bigger queries (if that is what is currently set as default).

@nebulon much obliged, works like a charm now!

Hi there! I'm playing around with OpenwebUI and Ollama to host a local model and I tried adding longer txt files as "knowledge" and ask questions about it. Adding text to the vector db seems to be working, but when I try to query it via mistral-7b, OpenwebUI stops with "504: Open WebUI: Server Connection Error" and Ollama throws this error, after exactly 60 seconds:

Dec 08 11:53:19 2025/12/08 10:53:19 [error] 33#33: *41243 upstream timed out (110: Connection timed out) while reading response header from upstream, client: 172.18.0.1, server: _, request: "POST /api/chat HTTP/1.1", upstream: "http://0.0.0.0:11434/api/chat", host: "lama.example.com"

Dec 08 11:53:19 172.18.0.1 - - [08/Dec/2025:10:53:19 +0000] "POST /api/chat HTTP/1.1" 504 176 "-" "Python/3.12 aiohttp/3.12.15"

Dec 08 11:53:19 [GIN] 2025/12/08 - 10:53:19 | 500 | 1m0s | 172.18.18.78 | POST "/api/chat"

Since this machine is quite beefy (20 Cores, 64GB RAM) but doesn't have a proper GPU, it would probably work, but just takes a little longer to come up with a reply. I tried various env vars to adjust the timeout, but I'm guessing this comes from the built in nginx. Can we set the timeout to 5 minutes or something?

@hpz24 I have one machine that has quite a lot of special characters in the encryption password. What I do is this:

cloudron backup decrypt --password="123\$123" ...

So the backslash is not part of the password and is required to escape the $ sign, if that's something that you're using.

Two UI things:

/#/mailboxes view but all accounts just show "Loading..." and nothing happens. Would be cool if it remembered the mailbox size or at least start collecting the data again.@brutalbirdie not sure if I can make it this evening, but if you're doing it, I'll definitely try

For anyone having those problems, here's quick update for this one: After a few weeks someone from the Postiz Discord (terrible way and platform for bug reporting btw) suggested to set up Cloudflare R2. It took two restarts, but after that I managed to connect my LinkedIn pages, because apparently it wasn't able to store the profile images and thus created that redirect above.

Although, now that I'm writing this, the bucket it still empty, but there are files in /app/data/data/2025/11/27 (the second data folder wasn't there yet, I needed to create that one manually, maybe that solved it after all?)

Also, even with the working connection, this error appeared in the logs, maybe we could let that point to somewhere it can write:

Nov 27 19:37:40 ⨯ Failed to write image to cache x5s7nobKRgx-CJ5Nq4z9X1PXnOjxJPxqtCDckv+9gKk= Error: ENOENT: no such file or directory, mkdir '/app/code/apps/frontend/.next/cache/images'

Nov 27 19:37:40 at async Object.mkdir (node:internal/fs/promises:860:10)

Nov 27 19:37:40 at async writeToCacheDir (/app/code/node_modules/next/dist/server/image-optimizer.js:190:5)

Nov 27 19:37:40 at async ImageOptimizerCache.set (/app/code/node_modules/next/dist/server/image-optimizer.js:574:13)

Nov 27 19:37:40 at async /app/code/node_modules/next/dist/server/response-cache/index.js:121:25

Nov 27 19:37:40 at async /app/code/node_modules/next/dist/lib/batcher.js:45:32 {

Nov 27 19:37:40 errno: -2,

Nov 27 19:37:40 code: 'ENOENT',

Nov 27 19:37:40 syscall: 'mkdir',

Nov 27 19:37:40 path: '/app/code/apps/frontend/.next/cache/images'

Nov 27 19:37:40 }

Ok nevermind, I found the corresponding update and it works again after updating to the next version.

Here's an odd one: I just updated a machine to Cloudron 9 and everything came back up except a MinIO instance. It's stuck in a restart loop with this:

Nov 23 18:32:31 ==> Changing ownership

Nov 23 18:32:31 ==> Starting minio

Nov 23 18:32:37 ]11;?\

Nov 23 18:32:37 API: SYSTEM.iam

Nov 23 18:32:37 Time: 17:32:37 UTC 11/23/2025

Nov 23 18:32:37 DeploymentID: xxx-xxx-xxx-xxx-xxx

Nov 23 18:32:37 Error: Unable to initialize OpenID: Unknown JWK key type OKP (*fmt.wrapError)

Nov 23 18:32:37 6: internal/logger/logger.go:268:logger.LogIf()

Nov 23 18:32:37 5: cmd/logging.go:29:cmd.iamLogIf()

Nov 23 18:32:37 4: cmd/iam.go:281:cmd.(*IAMSys).Init()

Nov 23 18:32:37 3: cmd/server-main.go:1004:cmd.serverMain.func15.1()

Nov 23 18:32:37 2: cmd/server-main.go:564:cmd.bootstrapTrace()

Nov 23 18:32:37 1: cmd/server-main.go:1003:cmd.serverMain.func15()

Nov 23 18:32:37 2025-11-23T17:32:37Z

loop & repeat

It's a rather old release, probably the last one before they removed the gui (RELEASE.2025-04-22T22-12-26Z), any idea if this is fixed in a later version? I didn't change anything in between, so I'm a bit lost.

@nebulon Nice! I would be interested in both, vector and raster tile server