- Enter the Flow editor

- Go to Settings on the top panel

- Go to Metadata on the left panel

You'll find the necessary options there.

shrey

Posts

-

Typebot Chat invitation links appear on social media with an image - Where is that setting? -

Why does the backup process block administrative actions?The blocking of any administrative action (Installing, Resizing, Restarting etc. of any app) while the backup process is ongoing, is, in my experience, a rather big nuisance.

I take backups 4 times a day, each of which lasts at least an hour. During this time, i can't seem to execute any of the administrative tasks listed above.

This results in me experiencing a forced downtime, which isn't really acceptable to me.How do i avoid this scenario?

And can something be done about this on a permanent basis?

Thanks

-

Issue with uploading images@james Hi. Well, I've tried only with Instagram (Standalone) currently, and that is currently not working due to some other upstream related issue.

Although, wasn't able to complete the full (images related) pipeline, i was able to upload and preview the images within Postiz at least, which worked fine.

-

Capability to tweak CORS config of an appSome apps, such as n8n, depend on custom reverse proxy config, for their core functioning. (See:

https://forum.cloudron.io/topic/9011/bug-cors-error,

https://community.n8n.io/t/cors-error-in-scenario-where-reverse-proxy-manipulation-is-not-available/25054

)As Cloudron currently does not provide that facility, for use-cases where such config is essential, having the app on Cloudron becomes irrelevant.

Thus, need that functionality within Cloudron.

-

Inventree - Open Source Inventory Management System

- Title: Inventree on Cloudron

- Main Page: https://inventree.org/

- Git: https://github.com/inventree/InvenTree

- Licence: e.g. MIT

- Docker: Yes

- Demo: https://docs.inventree.org/en/stable/demo/?h=demo

-

Summary: InvenTree is an open-source inventory management system which provides intuitive parts management and stock control. InvenTree is designed to be extensible, and provides multiple options for integration with external applications or addition of custom plugins.

-

Notes: Still trying it out for Inventory and partly, Manufacturing Execution System requirements, but looks like promising so far.

- Alternative to / Libhunt link: e.g. https://alternativeto.net/software/inventree/

- Screenshots:

-

Peertube, I tried it today...I also started using Peertube a few days ago. Have been continuously uploading new videos to it.

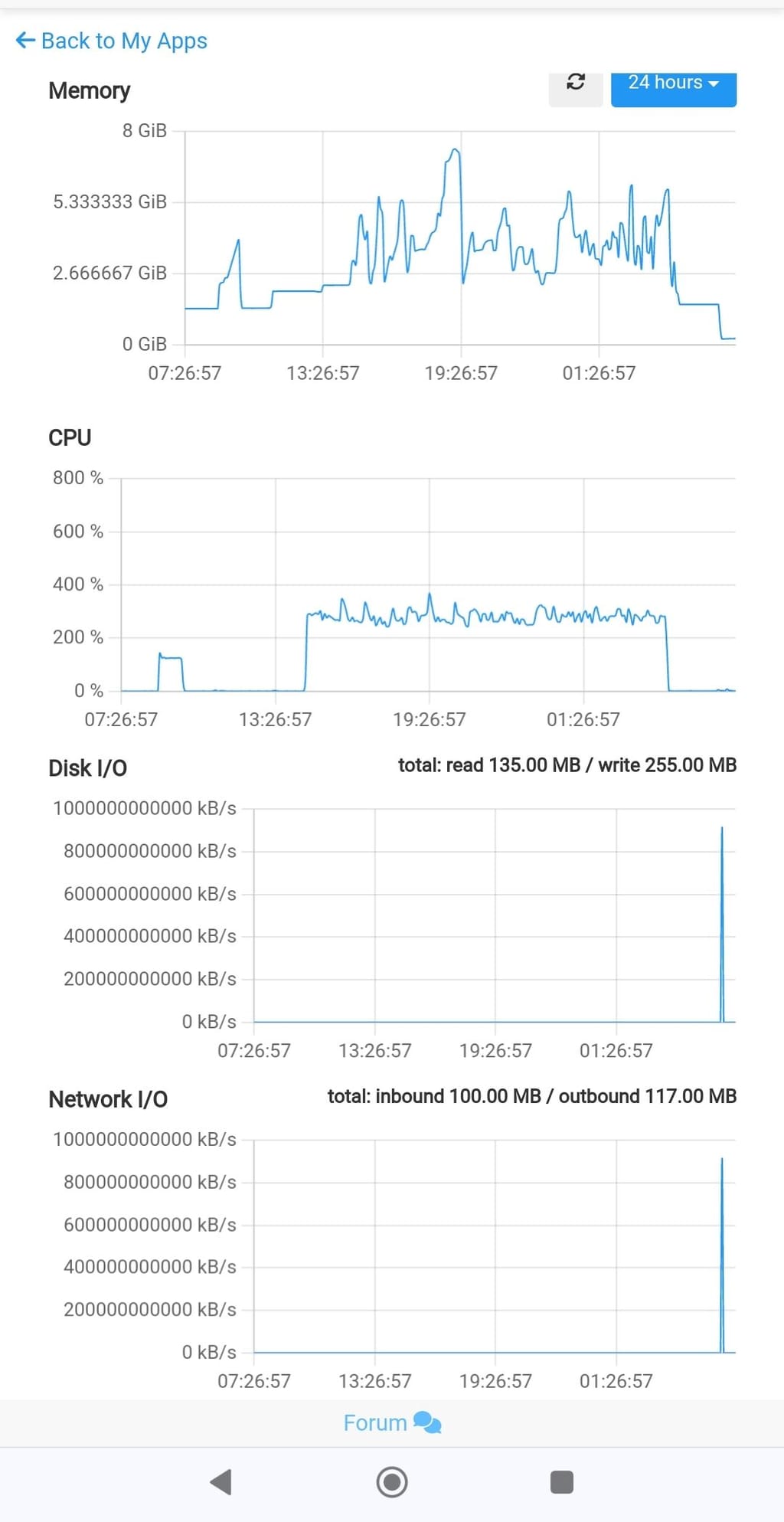

Crashed initially, but not since i increased the resource limits as well as the server resources.Here's the usage graph now:

-

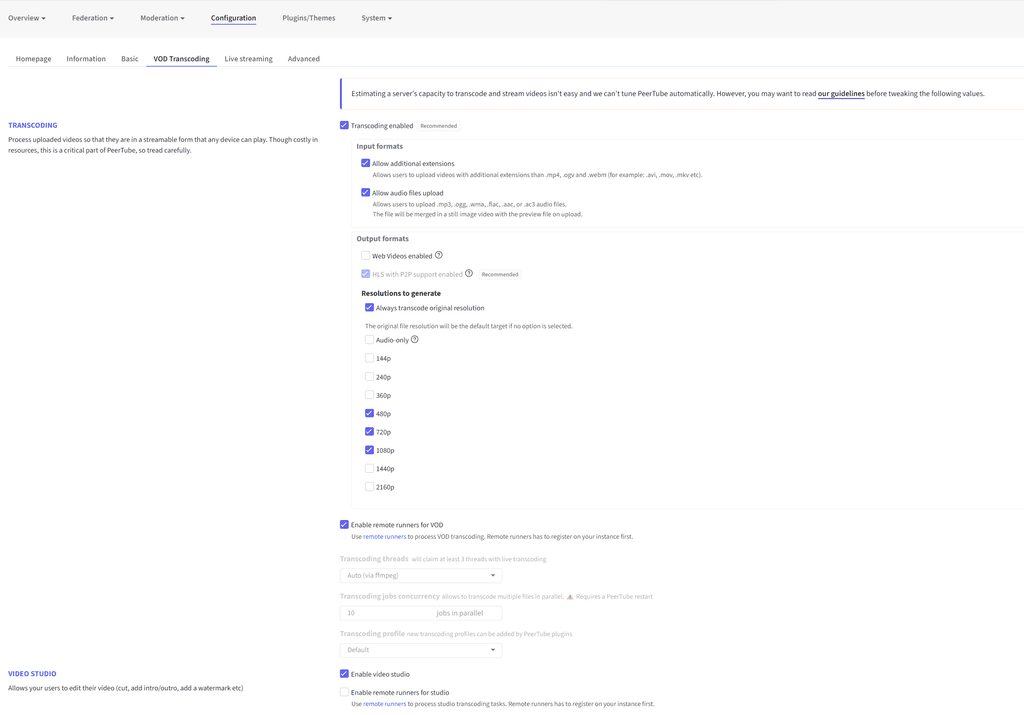

Professional PeerTube InstallationSure.

- My data is stored in 3 places:

a. local storage (Only this is included in the 'App backups')

b. mounted volume (for certain options that are temporarily space-intensive, like:tmp&streaming_playlists)

c. object storage (primary/permanent storage for the video files)

The config in

production.yaml:storage: tmp: '/media/my-mounted-volume/my-project/storage/tmp/' # Use to download data (imports etc), store uploaded files before processing... avatars: '/app/data/storage/avatars/' streaming_playlists: '/media/my-mounted-volume/my-project/storage/streaming-playlists/' redundancy: '/app/data/storage/redundancy/' logs: '/app/data/storage/logs/' previews: '/app/data/storage/previews/' thumbnails: '/app/data/storage/thumbnails/' torrents: '/app/data/storage/torrents/' captions: '/app/data/storage/captions/' cache: '/app/data/storage/cache/' plugins: '/app/data/storage/plugins/' client_overrides: '/app/data/storage/client-overrides/' bin: /app/data/storage/bin/ well_known: /app/data/storage/well_known/ tmp_persistent: /app/data/storage/tmp_persistent/ # Use two different buckets for Web videos and HLS videos on AWS S3 storyboards: /app/data/storage/storyboards/ web_videos: /app/data/storage/web-videos/ object_storage: enabled: true # Example AWS endpoint in the us-east-1 region endpoint: 'region.my-s3-domain' # Needs to be set to the bucket region when using AWS S3 region: 'region' web_videos: bucket_name: 'my-bucket-name' prefix: 'direct/' streaming_playlists: bucket_name: 'my-bucket-name' prefix: 'playlist/' AWS_ACCESS_KEY_ID: 'my-key-ID' AWS_SECRET_ACCESS_KEY: 'my-access-key' credentials: aws_access_key_id: 'my-key-ID' aws_secret_access_key: 'my-access-key' access_key_id: 'my-key-ID' secret_access_key: 'my-access-key' max_upload_part: '1GB'

- For Remote Runners:

a.

b.

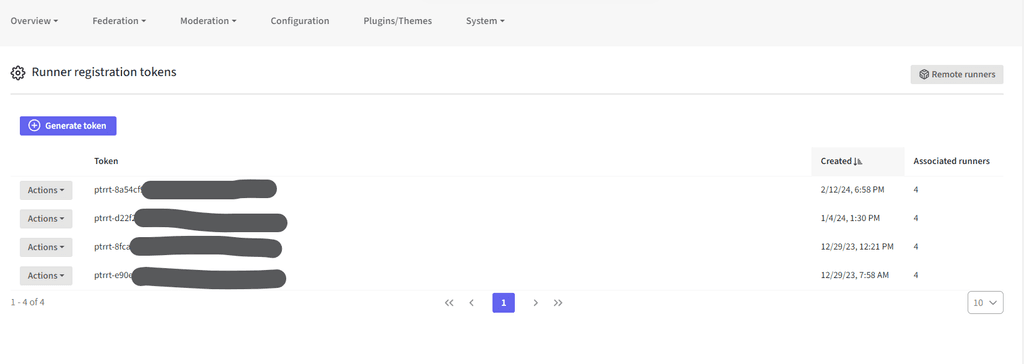

c. Set up remote machine(s) using the Peertube CLI to connect to your app, using the

Runner registration tokens.https://docs.joinpeertube.org/maintain/tools#peertube-runner

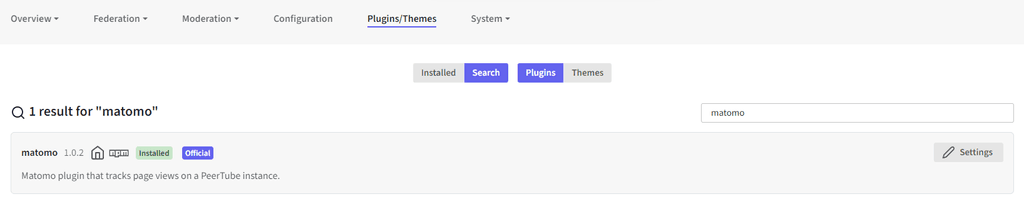

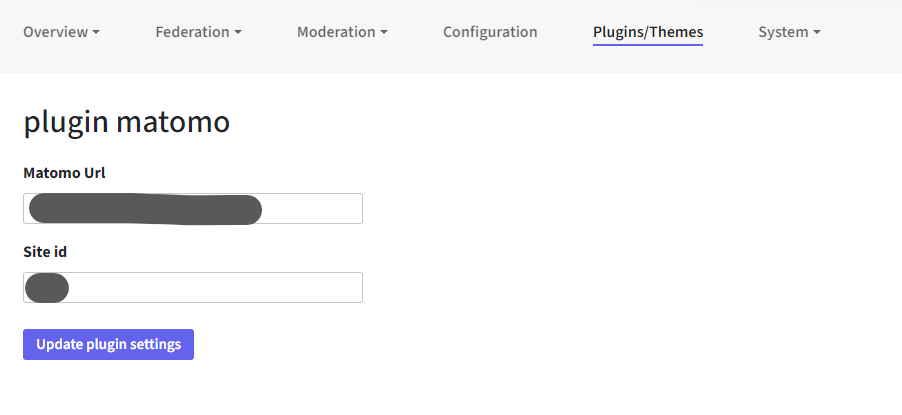

- For Matomo, i'm using a plugin: https://www.npmjs.com/package/peertube-plugin-matomo

- My data is stored in 3 places:

-

Unable to do a fresh installationI tried these steps : https://docs.cloudron.io/storage/#swap, which increased the Swap size.

This seems to resolve the above issue, as the app has now successfully installed.

-

How to use custom nodes?Figured it out.

Just uploaded my node's folder to the

custom-extensionsfolder and restarted n8n. -

Storage management in Immich?What's the optimum way to manage the data (app code + uploaded files) and backups (of the app code)?

Currently, i've set the app to use an external Volume as the primary 'Data Directory'.

But, as the Data Directory also includes the uploaded files (which could be hundreds of GBs), it does seem prudent to have the uploaded files stored in a non-regular-backup location.

Initially, I did try mounting a volume and configuring theimmich.json, but that didn't seem to work.immich.json->{ "UPLOAD_LOCATION":"/media/<volume name>/data/immich_files", "THUMB_LOCATION":"/media/<volume name>/data/thumbs", "ENCODED_VIDEO_LOCATION":"/media/<volume name>/data/encoded-video", "PROFILE_LOCATION":"/media/<volume name>/data/profile" }Error log ->

WARN [Api:DuplicateService] Unknown keys found: { "UPLOAD_LOCATION": "/media/<volume name>/data/immich_files", "THUMB_LOCATION": "/media/<volume name>/data/thumbs", "ENCODED_VIDEO_LOCATION": "/media/<volume name>/data/encoded-video", "PROFILE_LOCATION": "/media/<volume name>/data/profile" }

Immich references ->

https://immich.app/docs/guides/custom-locations

https://immich.app/docs/install/docker-compose#step-2---populate-the-env-file-with-custom-values -

MiroTalk SFU: Recording not possible?@james said in MiroTalk SFU: Recording not possible?:

Change AWS_S3_BUCKET_NAME to AWS_S3_BUCKET restart and it should be working.

Thanks!

That did get it working.

@mirotalk-57bab571 Just one more kink remaining:

When i 'disconnect' the call without stopping the recording, the Recording seems to get saved only in the local directory, and not S3. The S3 upload seems to be triggered only when an ongoing recording is stopped explicitly.

-

Authentication error@girish Indeed, that was the case! Thanks

I'd installed the plugins, but didn't check them yet.

The plugin had a placeholder (invalid) value set as the default.

-

MiroTalk SFU: Recording not possible?@james

Current behaviour:- I start a meeting as Host

- Start the Recording (only Host is allowed to record)

- Once meeting is over, i stop the meeting by clicking on "Leave Room"

- The recording is available in

/app/data/recbut not in S3.

If, in Step 3:

i stop the Recording and then stop the meeting, only then the Recording gets uploaded to S3.Expectation:

Recording should get uploaded to S3 regardless of the path taken to 'stop' the meeting and without explicitly stopping the recording. -

Can I access a postgres database of an app from outside?Hey @devtron .

I was curious about it too, so just tried the same using DBeaver.

I was able to successfully access Directus' Postgres database by:

- configuring SSH connection to the server that Cloudron is hosted on

- using:

- host = value gotten from the host server by using `docker inspect postgresql | grep IPAddress

- database = value gotten from Directus' 'terminal' (echo $CLOUDRON_POSTGRESQL_DATABASE)

- username = value gotten from Directus' 'terminal' (echo $CLOUDRON_POSTGRESQL_USERNAME)

- password = value gotten from Directus' 'terminal' (echo $CLOUDRON_POSTGRESQL_PASSWORD)

-

Unable to connect to a DigitalOcean managed database@girish said in Unable to connect to a DigitalOcean managed database:

None of the feature like backup/restore will work

So far, they've been working fine. Because, all that's required to be backed up in such cases is the app code. Of course, as the database is external, it is accepted that the backup system is external & independent to Cloudron.

@girish said in Unable to connect to a DigitalOcean managed database:

Not an expert on the apps and I don't know if it's the main purpose. But they work just fine with fresh databases as well where you use it like a nocode/locode app.

Yes, pairing up with external databases is indeed, one of the primary features of these apps. And using a fresh database is just one of the features, and not always preferred.

You might want to state clearly and prominently, particularly in the cases of such apps, that external connections are not allowed/recommended.

-

ActivePieces - nocode alternative to Zapier, Make, n8n etcDoes anyone here have any links to a good comparison between Activepieces and n8n?

Or any insights from first-hand experience of both? -

Since update to v8.2.1 backups fail with "Too many empty directories"Seem to have finally resolved the issue, by deleting those empty directories, using

find . -type d -empty -delete, for now.Although, the app is steadily creating new empty directories already!

-

ETA for latest release package?Hi all. When can we expect to get the updated package of the latest release of this software?

Thanks.

-

Mintlify - open-source documentation alternative to mkdocs, gitbook and readme.com@marcusquinn

An important point to consider: they seem to be completely non-OSS.PS: The above github link is also non-existent

-

SendGrid is over, what to use instead?SMTP2GO is pretty underrated imo.

Been using their $15/month plan for the past few years, to cater to a variety of emails for a large list of subdomains/domains, without any issues. And the support's pretty decent as well!