Hi @girish sorry for the delay.

I think -D does not necessarily impact performance, my test after removing -D was much quicker but after adding it again it was just as quick. Seems like there's some kind of filesystem caching. Maybe at some point I could analyse the differences but I suspect changing this doesn't impact performance so much.

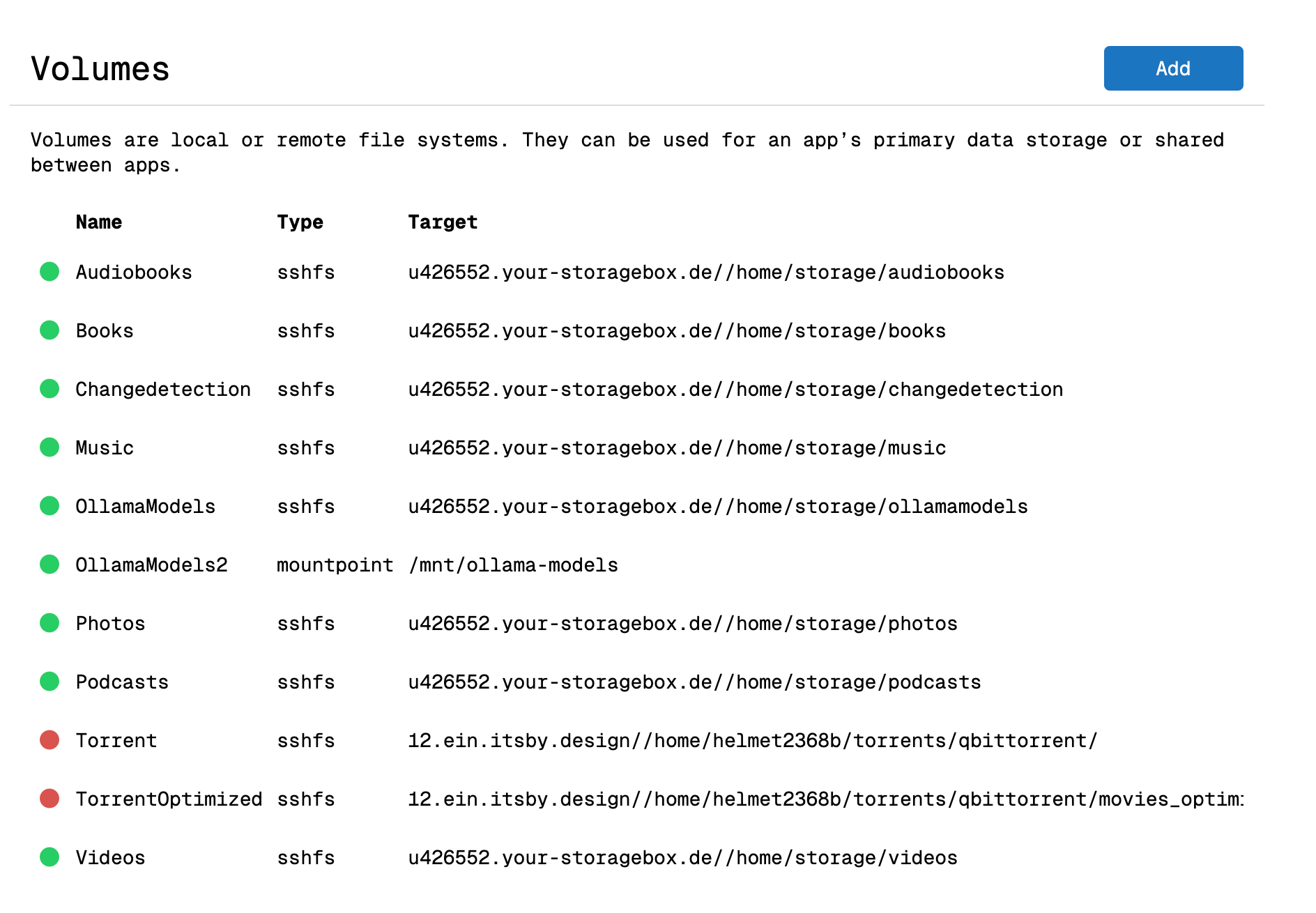

The part that does impact performance a lot is the -x flag, but as far as I've seen, that will only prevent iteration on the remote (s3fs) filesystem if du is initiated on the parent dir, e.g. /mnt and not on the mount itself /mnt/s3fs.

Would be a lifesaver if you could add this, but understandable if it cannot be done. If it cannot be done, a workaround to exclude certain volumes from disk usage calculation would be fine too. Or maybe you could add an option to the Disk Usage UI: Offer an option to include/exclude certain volumes before actually executing calculation