@robi for the first logviewer mismatch, this is because the first time shown is from the logservice itself and is translated into the local time of your browser, whereas the second comes from the app itself and is just treated as a plain string. Apps normally run in UTC.

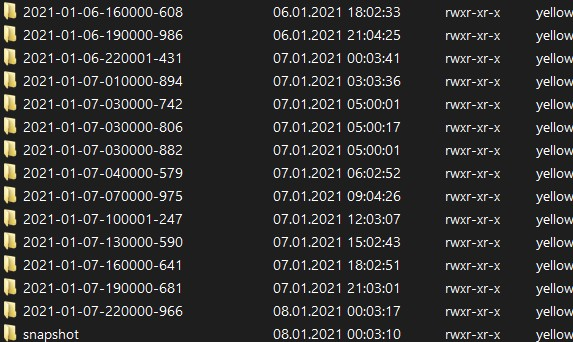

For the winscp part, I am not sure how winSCP translates timestamps. The folder names are set from server time.

I don't think any of this will have anything to do with DB reconnection, if you have any issue there, please make a new entry in the TS forum section with some more info.